2 - Specifications, Technology & PureVideo

GeForce 9600 GT Architecture

An introduction to the GeForce 9600 GT graphics card

Ever since November 2007 rumors have been swirling around the GeForce 9600 GT graphics card, it did not even need an announcement from the company. If you browsed the web a little you probably already heard practically everything there is to know about the card. The GeForce 9600 GT products are based on a 64 stream processors based GPU, it comes with 512MB of GDDR3 memory, on a (yay finally) 256-bit memory bus. I'm pretty excited about mid-range now, finally making the move to the 256-bit memory bus.

The core and memory speeds are 650MHz and 900MHz, respectively. For the real Guru's; the shader domain is clocked at 1625 MHz. The total memory bandwidth is 57.6GBs with a texture fill rate of 20.8 billion pixels per second.

The GPU, under the new codename 'D9M' (G94 for the rest of us), is a DirectX 10, OpenGL 2.1, Shader Model 4.0 product designed for PCI Express 2.0. It'll be fabricated at 65nm, and NVIDIA claims it will deliver a performance boost of up to 90 per cent over the GeForce 8600 GTS, which we'll validate in our benchmark session. The product will nearly double up the framerate in the more extreme situations compared to the 8600 series.

Fort the freaks Guru's - the GPU is manufactured on TSMC's 65nm process node and accounts for half a billion transistors (505 Million to be precise). As stated, it has 64 unified shader processors, binding to 16 ROPs.

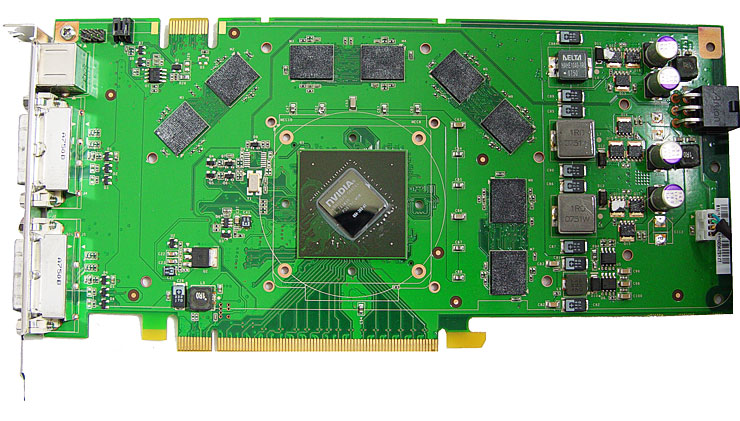

Let's strip her nekkid.

The D9M GPU based cards can peak at 90 Watts power consumption and will therefore require a power supply with at least 400W and 26A on the entire 12V rail, it will also require a 6-pin PCI-Express connector as the card will surpass 75 Watts (PCIe slot) energy consumption, and that is new compared to previous mid-range cards such as the 8600 GT (except the 8600 GTS though).

|

||||||||||||||||||||||||||||||||||||||||||||||||||||||||

The 9600 GT will launch at prices ranging from $169 to $189. Special edition cards (OC) versions will likely be more expensive. In Europe expect an initial sales price of 169 EUR for this 512MB model. Today is all about the 512MB models, make no mistake ... in time I do expect both 256MB and 512MB of memory and pricing and memory capacities are to be expected tied. So in a nutshell, that's the 9600 GT. The 9600 GT is the first mainstream NVIDIA graphics card with a 256-bit memory bus, and it will be an interesting competitor to AMD's Radeon HD 3850 and 3870. The 9600 features two dual-link HDCP enabled DVI-I outputs. Both HDMI and DVI support HDCP (High-bandwidth Digital Content Protection) which will be a requirement for protected content. In the long run; the card would support DisplyPort connectors fine as well, this obviously being board-partner dependant.

Next to bringing a pretty competitive product to the market, NVIDIA also introduced some new PureVideo features allowing several new functions. Let's have a look at these first.

New PureVideo Enhancements

PureVideo HD is a video engine built into the GPU of your graphics card (dedicated core logic). It allows for dedicated GPU-based video processing to accelerate, decode and enhance image quality of low- and high definition video in the following formats: H.264, VC-1, WMV/WMV-HD, and MPEG-2 (HD). Speaking more generic; your graphics card can be used to decode SD/HD materials in two categories:

HD Acceleration

The more your graphics card can decode the better, as it'll lower the overall used CPU cycles of your PC. We'll measure with the two most popular codecs used on both Blu-Ray and HD-DVD movies. VC1 is without a doubt the most used format, and secondly, the hefty, but oh so sweet H.264 format. We'll fire off a couple of movies and allow the graphics cards to decode the content; meanwhile like a vicious minx we'll be monitoring and recording the CPU load of the test PC.

HD Quality

Not only can the graphics card help offloading the CPU, it can also improve (enhance) image quality; as it should. So besides checking out performance of AMD's Avivo HD and NVIDIA's PureVideo HD video engines, we want to see how they effect the image quality, e.g. post-process and enhance the image quality of the movie.

Basically, in the entire GeForce Series 8 and obviously the new Series 9 range we see a 10-Bit display processing pipeline and also new post-processing options like:

- VC-1 & H.264 HD Spatial-Temporal De-Interlacing

- VC-1 & H.264 HD Inverse Telecine

- HD Noise Reduction

- HD Edge Enhancement

- HD Dynamic Contrast Enhancement

- HD Dynamic Color Enhancement

Today's newly added features in bold will be available for all GeForce series 8 & 9 products. With the GeForce 9600 GT comes the exact same VP2 decoding engine as found on the 8800 GT. You'll have your low-CPU post-processed, decoding 1080P image quality options with HD-DVD and Blu-Ray just like the 8800 GT with the new Video Processor 2 engine built in. I quickly verified; the HQV-HD score is at it's maximum 100 points and thus working like a charm.

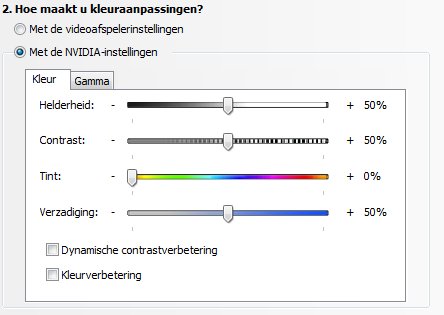

Now read the topic carefully as with this new release we mainly talk about enhancements. In the upcoming drivers you'll notice the addition of two new features: Dynamic Contrast and Color enhancement.

Now read the topic carefully as with this new release we mainly talk about enhancements. In the upcoming drivers you'll notice the addition of two new features: Dynamic Contrast and Color enhancement.

It does pretty much what the name says; dynamic contrast enhancement technology will improve the contrast ratios in videos in real-time on the fly. It's a bit of a trivial thing to do, as there are certain situations where you do not want your contrast increased. Think for example a scary thriller, dark environment ... and all of the sudden your trees light up. So with that in mind; the implementation has been done very delicately. It does work pretty well, but personally I'd rather tweak the contrast ratio myself and leave it at that.

To the right you can see a screenshot where the new options are located, and yes sorry for the Dutch language.

** Here's an idea for NVIDIA's driver team, selectable languages in the ForceWare drivers.

The second feature is Dynamic Color Enhancement. It's pretty much a color tone enhancement feature and will slightly enforce a color correction where it's needed. We'll show you that in a bit as I quite like this feature; it makes certain aspects of a movie a little more vivid.

Also a small new addition for Vista Aero enthusiasts, previously when you play back a movie while utilizing graphics processor with software like PowerDVD, and thus had to shut down Vista Aero and revert back to the basic Vista theme; this has now been solved and windows transparency, thumbnail previews etc are all working as it's intended to do.

Also new is a feature called Dual-Stream decode. Pretty much it boils down to the fact that you can display two video streams simultaneously. Pretty handy if you watch a Blu-Ray movie with a small directors commentary window on the lower part of your screen.

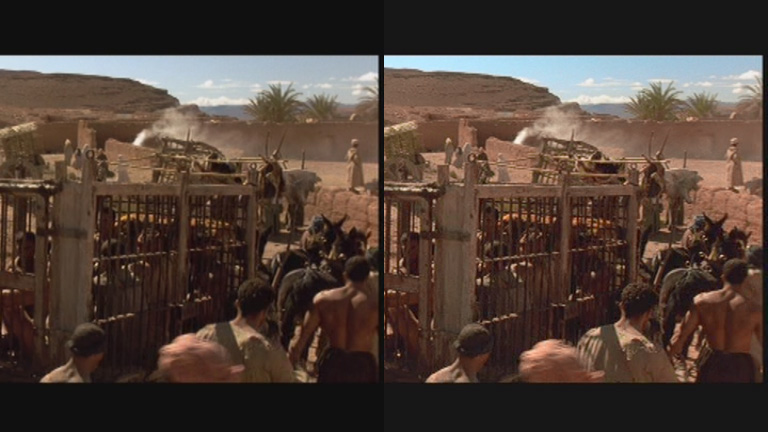

Let's split the frames in two and compare with all interesting tweaks enabled. Two older features, edge enhancement and noise reduction obviously are also at your disposal. To the left the baseline (first) image, to the right the final result. Once we enable these as well and combine them with the Dynamic contrast enhancement and color enhancement option we see a distinct difference in image quality. Thanks to edge enhancement the frame is more sharp.

Anyway, let's have a look at Inno3D's offering.