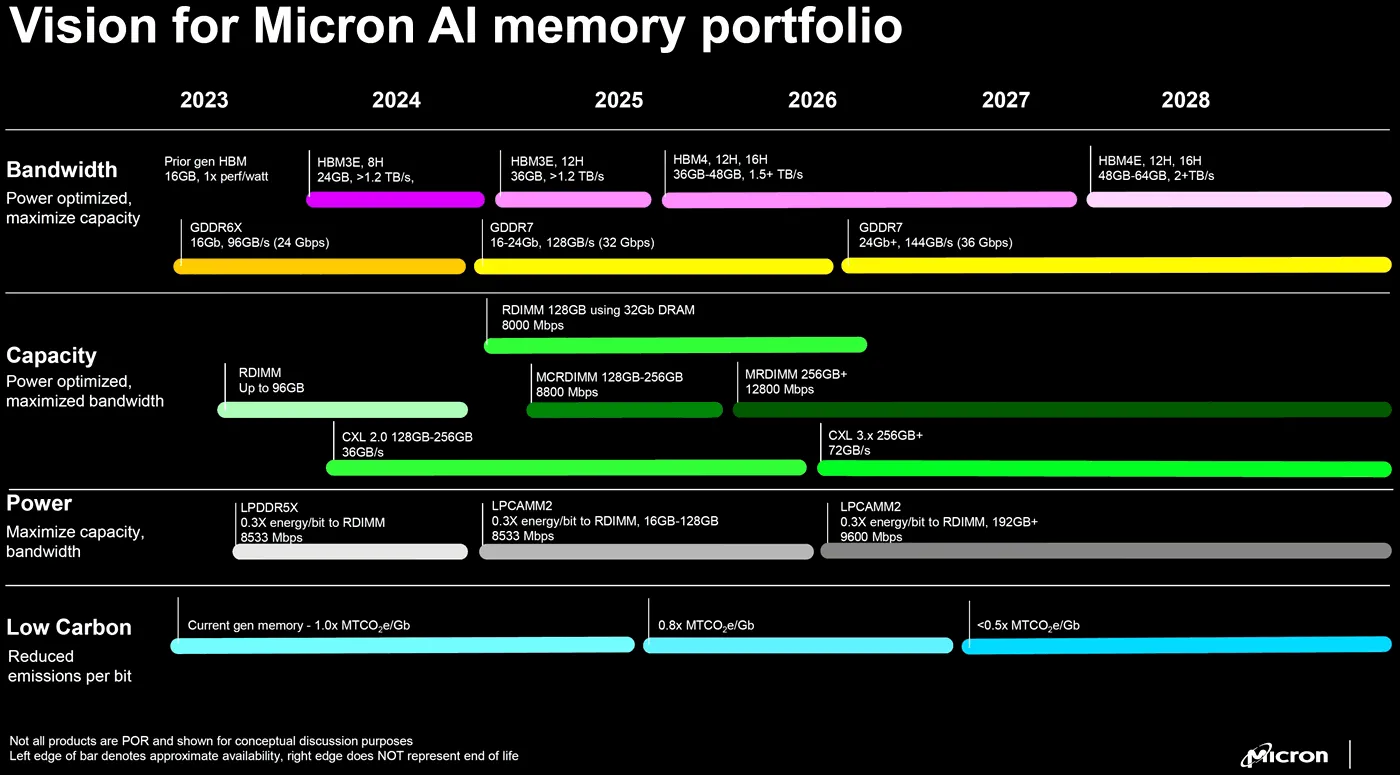

The first gaming graphics cards (GPUs) that use the new GDDR7 memory might continue using 16 Gbit (2 GB) memory chips, similar to what we see in today's RTX 40-series cards. This info comes from kopite7kimi, who is known for accurate NVIDIA GeForce leaks. Typically, a 16 Gbit chip allows a graphics card with a 256-bit memory bus to have 16 GB of video memory. The top-end model in the current lineup, the RTX 4090, achieves its 24 GB of memory by using twelve of these chips. The leak suggests that NVIDIA might not apply the GDDR7 standard across all its products. Instead, like with previous series, it might work with a memory company to develop a specialized standard that's better suited for its graphics cards. This was seen when NVIDIA and Micron Technology co-developed the GDDR6X. The first GDDR7 chips, expected at the end of 2024 or start of 2025, could reach speeds up to 32 Gbps. A second wave of GDDR7 chips might push this to 36 Gbps by late 2025 or 2026, following the pattern seen with GDDR6's evolution.

GDDR7 is also significant because it supports "odd" memory capacities, like 3 GB chips, not just the usual powers of two (e.g., 2 GB, 4 GB). This flexibility could lead to graphics cards with more tailored memory amounts, fitting better with the graphics chip's capabilities and avoiding the need for costly memory doubling.

Sources: kopite7kimi (Twitter), 3DCenter.org