Page 5

The Luminex Engine

Okay which marketing bozo came up with that word? One of the things you'll notice in the new Series 8 products is that number if pre-existing features have become much better and I'm not only talking about the overall performance improvements and new DX10 features. Nope, NVIDIA also had a good look at Image Quality. Image quality is significantly improved on GeForce 8800 GPUs over the prior generation with what NVIDIA seems to call the Lumenex engine.

You will now have the option of 16x full screen multisampled antialiasing quality at near 4x multisampled antialiasing performance using a single GPU with the help of a new AA mode called Coverage Sampled Antialiasing. We'll get into this later though with pretty much this is a math based approach as the new CS mode computes and stores boolean coverage at 16 subsamples and yes this is the point where we lost you right? We'll drop it.

So what you need to remember is that CSAA enhances application antialiasing modes with higher quality antialiasing. The new modes are called 8x, 8xQ, 16x, and 16xQ. The 8xQ and 16xQ modes provide first class antialiasing quality TBH.

So what you need to remember is that CSAA enhances application antialiasing modes with higher quality antialiasing. The new modes are called 8x, 8xQ, 16x, and 16xQ. The 8xQ and 16xQ modes provide first class antialiasing quality TBH.

If you pick up a GeForce 8800 GTS/GTX then please remember this; Each new AA mode can be enabled from the NVIDIA driver control panel and requires the use to select an option called Enhance the Application Setting. Users must first turn on ANY antialiasing level within the games control panel for the new AA modes to work, since they need the game to properly allocate and enable anti-aliased rendering surfaces.

If a game does not natively support antialiasing, a user can select an NVIDIA driver control panel option called Override Any Applications Setting, which allows any control panel AA settings to be used with the game. Also you need to know that in a number of cases (such as the edge of stencil shadow volumes), the new antialiasing modes can not be enabled, those portions of the scene will fall back to 4x multisampled mode. So there definitely is a bit of a tradeoff going on as it is a "sometimes it works but sometimes it doesn't" kind of feature.

So I agree, a very confusing method. I simply would like to select in the driver which AA mode I prefer, something like "Force CSAA when applicable", yes something for NVIDIA to focus on. We'll test CSAA with a couple of games in our benchmarks.

But 16x quality at almost 4x performance, really good edges, really good performance, that obviously is always lovely.

One of the most heated issues over the previous generation products opposed to the competition was the fact that the NVIDIA graphics cards could not render AA+HDR at the same time. Well that was not entirely true through as it was possible with the help of shaders as exactly four games have demonstrated. But it was a far from efficient method, a very far cry (Ed: please no more puns!) you might say.

So what if I would were to say that now not only you can push 16xAA with a single G80 graphics card, but also do full 128-bit FP (Floating point) HDR! To give you a clue the previous architecture could not do HDR + AA but it could do technically 64-bit HDR (just like the Radeons). So NVIDIA got a good wakeup call and noticed that a lot of people were buying ATI cards just so they could do HDR & AA the way it was intended. Now the G80 will do the same but it's even better. Look at 128-bit wide HDR as a palette of brightness/color range that is just amazing. Obviously we'll see this in games as soon as they will adopt it, and believe me they will. 128-bit precision (32-bit floating point values per component), permitting almost real-life lighting and shadows. Dark objects can appear extremely dark, and bright objects can be exhaustingly bright, with visible details present at both extremes, in addition to rendering completely smooth gradients in between.

As stated; HDR lighting effects can be used together with multisampled antialiasing now on GeForce 8 Series GPUs and the addition of angle-independent anisotropic filtering. The antialiasing can be used in conjunction with both FP16 (64-bit color) and FP32 (128-bit color) render targets.

Improved texture quality is something I MUST mention. We all have been complaining about shimmering effects and lesser filtering quality than the Radeons, it's a thing of the past. NVIDIA added raw horsepower for texture filtering making it really darn good and in fact claims it's even better then currently the most expensive team red product (x1950 XTX). Well .. we can test that !

Allow me to show you. See, I have this little tool called D3D AF Tester which helps me determine how image quality is in terms of Anisotropic filtering. So basically we knew that ATI always has been better at IQ compared to NVIDIA.

|

|

|

|

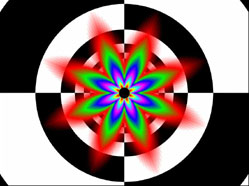

GeForce 7900 GTX 16xAF (HQ) |

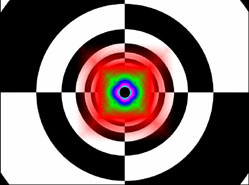

Radeon X1900 XTX 16xHQ AF |

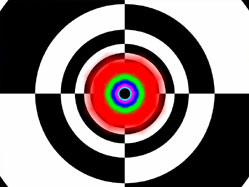

GeForce 8800 GTX 16xAF Default |

Now have a look at the images above and let it sink in. It goes too far to explain what you are looking at; but the more perfect a round colored circle in the middle is the better image quality will be. A perfect round circle is perfect IQ.

Impresive huh ? The the AF patterns are just massively better compared to previous generation hardware. Look at that .. that is default IQ; that's just really good ...

What about Physics ?

You can use the graphics card as a physics card with the help of what NVIDIA would like to market as the Quantum physics engine.

Yes, the GeForce 8800 GPUs are capable of handling complete physics computations that free the CPU from physics calculations and allow the CPU to focus on AI and game management. The GPU is now a complete solution for both graphics and physics. We all know that Physics acceleration really is the next hip thing in gaming, now with the help of shaders, you can already push a lot of debris around but you can also take a card to the next level.

As soon as titles start supporting it we'll be shocked whether one of our quad-cores of that new Intel CPU is doing Physics, whether you add-in another dedicated graphics card as demonstrated by NVIDIA and ATI, whether you do it with an Ageia card or in the case of the G80 in the GPU. I most definitely prefer this latter alternative as I think that Physics processing belongs in the GPU, as close as possible to the stuff that renders your games. Basically what happens is this: The Quantum Effect engine can utilize some of the Unified shader processors to calculate the physics data with the help of a new API that NVIDIA has created, CUDA. CUDA is short for Compute Unified Device Architecture. Is anyone thinking GPGPU here? No? Well I am. The thing is, NVIDIA is making it accessible to developers as you can code physics in C (common programmers language).

A good example of GPGPU is ATI's recent development of calculating data over the GPU in the form of distributed computing. You calculate stuff over the GPU that is not intended for such a dedicated processor, but it can. NVIDIA is now utilizing a similar method CUDA and Kudo's to them for developing it.

NVIDIA is making this API completely available to the programmers though an SDK. Since it's coded in C it's widely supported by programmers as it's a much preferred way of programming, basically any programmer can code in C. So to put it simply, game developpers or for that manner any developer can code in C some pretty nifty functions. NVIDIA has a very nice demo on it called fluid in a box, which should be released pretty soon.

Here's the catch, I'm not really sure how successful it'll be compared to a dedicated physics processing unit will be though, as I believe that Physics processing on a GPU that has to render the game as well, might be a bit too much. But hey time will tell as yes we first need developer support to get this going.

It's doable, yet I'm holding out on casting an opinion until after we have seen some actual implementation in games.