Page 8

Overclocking & TweakingBefore we dive into an extensive series of tests and benchmarks, we need to explain overclocking. With most videocards, we can do some easy tricks to boost the overall performance a little. You can do this at two levels, namely tweaking by enabling registry or BIOS hack, or even tamper with Image Quality and then there is overclocking, which by far will get you the best possible results.

What do we need?

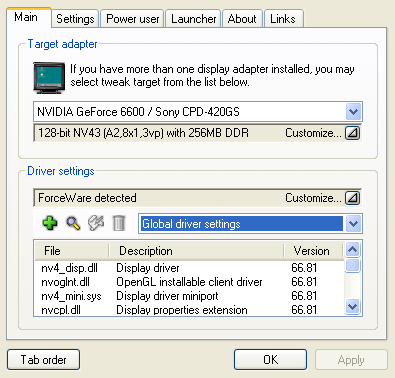

One of the best tools for overclocking NVIDIA and ATI videocards is our own Rivatuner, which you can download here. If you own a NVIDIA graphics card then NVIDIA actually has very nice built in options for you at that can be found at the display driver properties. They are hidden though and you need to enable it by installing a small registry hack called CoolBits, which you can download right here (after downloading and unpacking just click the .reg file twice and confirm the import).

Where

should we go ?Overclocking: by increasing the frequency of the videocard's memory and GPU, we can make the videocard increase its calculation clock cycles per second. It sounds hard but it really can be done in less then a few minutes. I always tend to recommend to novice users and beginners not to increase the frequency any higher then 5-10% of the core and memory clock. Example: If your card would run at 300 MHz then I suggest you don't increase the frequency any higher than 330 MHz.

More advanced users push the frequency often way higher. Usually when your 3D graphics will start to show artifacts such as white dots ("snow"), you should go down 10 MHz and leave it at that.

The core can be somewhat different. Usually when you are overclocking too hard, it'll start to show artifacts, empty polygons or it will even freeze. I recommend that you back down at least 15 MHz from the moment you notice an artifact. Look carefully and observe well.

All in all... do it at your own risk. Overclocking your card too far or constantly to its maximum limit might damage your card and it's usually not covered by your warranty.

You will benefit from overclocking the most with a product that is limited or you may call it "tuned down." We know that this graphics core is often limited by tact frequency or bandwidth limitation, therefore by increasing the memory and core frequency we should be able to witness some higher performance results. A simple trick to get some more bang for your buck.

The GeForce 6600 at default clock speeds is doing 300 MHz. It's DDR memory is 2x275 thus 550 MHz. Overclocked it was capable of running at 550 MHz core and 575 MHz memory frequency.

The high core overlock is something we observe with almost all 6600 cards, it's just amazing. But to be able and capable of using that high core clock efficiently you need more memory bandwidth, and memory wise the overclock is a bit dissapointing. Overall a fantastic overclock though. Take a good look at the numbers in the benchmarks as you'll see a very nice difference when we enable the overclock.

The Test System

Now we begin the benchmark portion of this article, but first let me show you our test system.

- Albatron PX915P/G Pro (PCI-Express 16x enabled)

- 1024 MB DDR400

- GeForce 6600/6600GT/6800GT/Radeon X600

- Pentium 4 class 3.6 Ghz (Socket 775)

- Windows XP Professional

- DirectX 9.0c

- Detonator 61.77 for GeForce cards 66.81 for 6600 GT

- Radeon Catalyst 4.8 for ATI cards

- Latest reference chipset and graphics card drivers

- RivaTuner 2.0 (tweak utility)

Benchmark Software Suite:

- Far Cry 1.2 SM3 patched Guru3D config & timedemo

- Splinter Cell (Guru3D custom timedemo)

- Return to Castle Wolfenstein - Checkpoint DM60

- 3DMark 2003

- AquaMark 3

- Unreal Tournament 2004 (Guru3D custom timedemo)

- Doom 3

- Halo: Combat Evolved

The Anisotropic filtering setting that enables itself in the ForceWare drivers when you enable AF/AA settings has been disabled by us unless noted otherwise, this to make the bechmarks as objective as they can be for future comparisons.

|

The numbers (FPS = Frames Per Second) | ||||||||||||||

|

||||||||||||||

|

| ||||||||||||||