Page 7 - The move to DX10

The move to DirectX 10

Despite the fact that graphics cards are all about programmability and thus shaders these days, you'll notice in today's product that we'll not be talking about pixel and vertex shaders much anymore. With the move to DirectX 10 we now have a new technology called Unified shader technology, and graphics hardware will adapt to that model, it's actually very promising. DirectX 10 has been shipping with the first public release version of Windows Vista, which is also its biggest downside; you need to have Windows Vista. It will definitely change the way software developers make games for Windows and very likely benefit us gamers in terms of better gaming visuals and better overall performance.

Hilbert, pal ... What's this shader thingy you so often talk about?

If you recently attempted to purchase a video card, then you will no doubt have heard the terms "Vertex Shader" and "Pixel Shader" and with the new DirectX 10 "Geometry Shaders". In today's reviews the reviewers actually tend to think that the audience knows everything. I realized that some of you do not even have a clue what we're talking about. Sorry, that happens when you are deep into the matter consistently. Let's do a quick course on what is happening inside your graphics card for to be able to poop out all these millions of colored pixels.

What do we need to render a three dimensional object as 2D on your monitor? We start off by building some sort of structure that has a surface, that surface is built from triangles. Why triangles? They are quick to calculate. How's each triangle being processed? Each triangle has to be transformed according to its relative position and orientation to the viewer. Each of the three vertices that the triangle is made up of is transformed to its proper view space position. The next step is to light the triangle by taking the transformed vertices and applying a lighting calculation for every light defined in the scene. And lastly the triangle needs to be projected to the screen in order to rasterize it. During rasterization the triangle will be shaded and textured.

Graphic processors like the GeForce series are able to perform a very large amount of these tasks. The first generation was able to draw shaded and textured triangles in hardware. The CPU still had the burden to feed the graphics processor with transformed and lit vertices, triangle gradients for shading and texturing, etc. Integrating the triangle setup into the chip logic was the next step and finally even transformation and lighting (TnL) was possible in hardware, reducing the CPU load considerably. The big disadvantage was that a game programmer had no direct (i.e. program driven) control over transformation, lighting and pixel rendering because all the calculation models were fixed on the chip.

And now, we finally get to the stage where we can explain shaders as that's when they got introduced.

A shader is basically nothing more than a relatively small program executed on the graphics processor to control either vertex, pixel or geometry processing and it has become intensely important in today's visual gaming experience.

Vertex and Pixel shaders allow developers to code customized transformation and lighting calculations as well as pixel coloring or all new geometry functionality on the fly, (post)processed in the GPU. With last-gen DirectX 9 cards there were separated dedicated core-logic in the CPU for pixel and vertex code execution, thus dedicated Pixel shader processors and dedicated Vertex processors. With DirectX 10 something significantly changed though. Not only were Geometry shaders introduced, but the entire core logic changed to a unified shader architecture that is a more efficient approach to allow any kind of shader in any of the stream processors.

Team green NVIDIA's GeForce 8800 GTX and Ultra have 128 stream processors where Team Red ATi's HD 2900 XT has 320 of them. Now before you think that ATI is the clear winner here ... NVIDIA's shader domain is clocked heaps faster so in the long run all boils down to the same thing, yet the approach to achieve the goal is different.

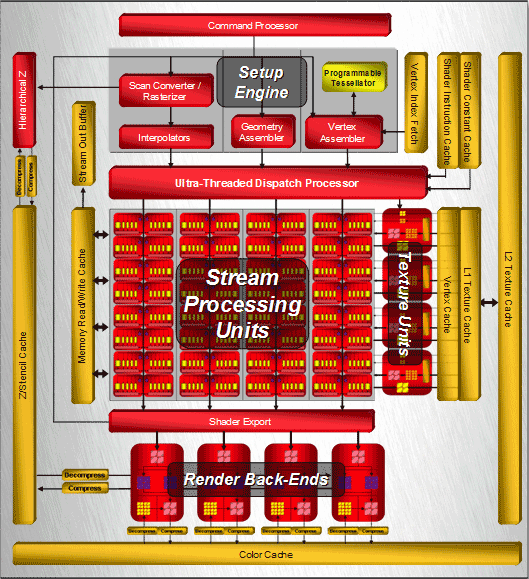

Above you can see the shader processors I just mentioned, organized in a nice diagram. This is actually a setup of the the HD 2900 XT GPU. And it's very unlikely that you understand what I'm about to show you, but allow me to show you an example of a Vertex and a Pixel shader. A small piece of code that is executed on the Stream (Shader) processors inside your GPU:

| Example of a Pixel Shader instruction | Example of a Vertex Shader instruction |

| #include "common.h" struct v2p { float2 tc0 : TEXCOORD0; // base half4 c : COLOR0; // diffuse }; // Pixel half4 main ( v2p I ) : COLOR { return I.c*tex2D (s_base,I.tc0); } |

#include "common.h" struct vv { float4 P : POSITION; float2 tc : TEXCOORD0; float4 c : COLOR0; }; struct vf { float4 hpos : POSITION; float2 tc : TEXCOORD0; float4 c : COLOR0; }; vf main (vv v) { vf o; o.hpos = mul (m_WVP, v.P); // xform, input in world coords o.tc = v.tc; // copy tc o.c = v.c; // copy color return o; } |

Now, this code itself is not at all interesting and I understand it means absolutely nothing to you, but I just wanted to show you in some sort of generic easy to understand manner what a shader is and involves.

Okay with that being said, let's go back to the review as we can now explain DirectX 10 better.