AMD recently made a significant announcement at its Data Center and AI Technology Premiere event in San Francisco, California, introducing a range of new products.

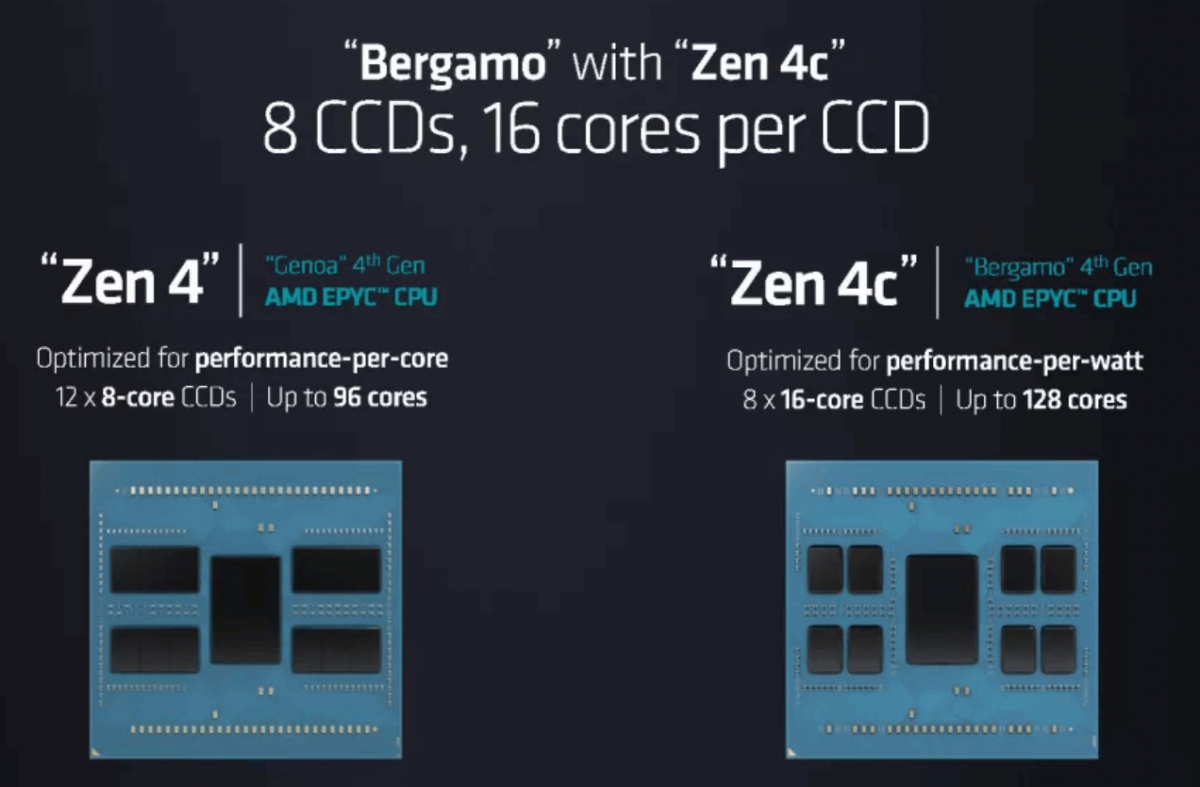

AMD unveiled its latest additions to the 4th generation EPYC lineup at the "Data Center and AI Technology Premiere" event. The new models, named "EPYC 97X4" series and "3D V-Cache," offer enhanced capabilities for cloud servers and technical computing. The "EPYC 97X4" series, internally known as "Bergamo," is designed specifically for cloud servers. It incorporates the Zen 4c core architecture, resulting in a significant expansion of CPU cores compared to the previous EPYC 7004 series. The maximum core count has been increased from 96 cores/192 threads to 128 cores/256 threads. As a result, the throughput of these processors is up to 3.7 times higher, and energy efficiency is improved by up to 2.7 times compared to cloud servers utilizing Ampere processors. Additionally, the number of containers per server can be increased up to threefold.

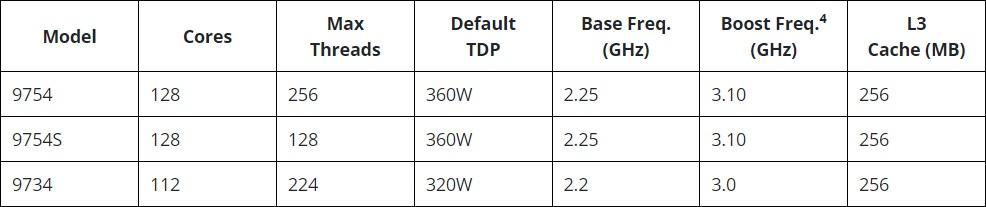

- The "EPYC 97X4" series comprises three models: "EPYC 9754" with 128 cores/256 threads, "EPYC 9754S" with 128 cores/128 threads, and "EPYC 9734" with 112 cores/224 threads.

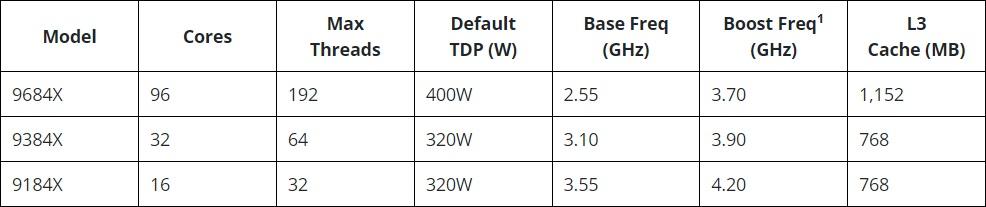

Another notable addition is the "EPYC 9004 with 3D V-Cache" series, codenamed "Genoa-X," aimed at technical computing applications. These processors feature the Zen 4 core architecture and utilize stacked cache on the CPU die, resulting in an impressive L3 cache size of up to 1,152MB.

Compared to the Xeon Platinum 8480+ dual server, the "EPYC 9004 with 3D V-Cache" series offers approximately 2.5 times better computational processing performance for fluid dynamics and up to 2.1 times improved performance for finite element analysis. The product lineup for the "EPYC 9004 with 3D V-Cache" series includes the following models: "EPYC 9684X" with 96 cores/192 threads and 1,152MB L3 cache, "EPYC 9384X" with 32 cores/64 threads and 768MB L3 cache, and "EPYC 9184X" with 16 cores/32 threads and 768MB L3 cache.

The EPYC Bergamo processors, with an impressive 128 cores, represent the industry's first x86 cloud native CPUs. These processors leverage an optimized Zen 4c core design, which effectively reduces the area required for each core. Noteworthy competitors in this segment include Intel's 144-core Sierra Forest chips, featuring Intel's Efficiency cores (E-cores) in their Xeon data center lineup, as well as Ampere's 192-core AmpereOne processors. Additionally, tech giants like Google and Microsoft are also actively developing or employing custom silicon.

All of these product offerings from AMD are tailored to maximize power efficiency for workloads that are highly parallel and latency-tolerant. These processors excel in scenarios such as high-density virtual machine deployments, data analytics, and front-end web services. Notably, these chips provide higher core counts compared to standard data center solutions while operating at lower frequencies and power envelopes.

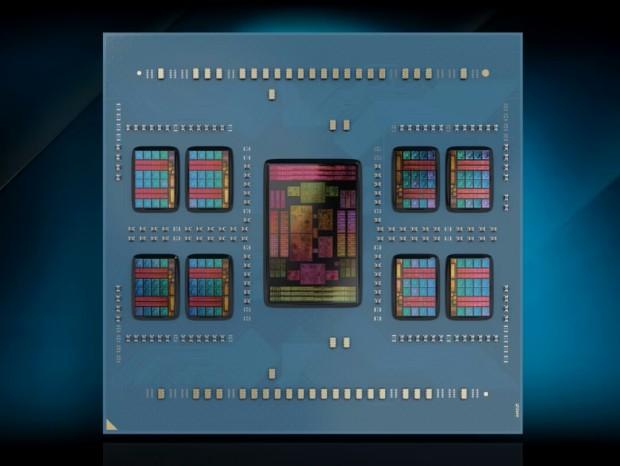

The EPYC Bergamo processors, available in two variants—the EPYC 9754 with 128 cores and 256 threads, and the EPYC 9734 with 112 cores and 224 threads—can be seamlessly integrated into server platforms that utilize the same SP5 socket as the standard 96-core EPYC Genoa processors. Like their regular counterparts, the Bergamo processors support 12-channel memory running at DDR5-4800. AMD manufactures these processors by combining chiplets with Zen 4c cores and the existing 'Floyd' central I/O die, effectively connecting the compute chiplets to a memory and I/O chiplet based on an older process node.

The specifications for the EPYC Bergamo processors are as follows:

- EPYC 9754: 128 cores / 256 threads, base/boost frequency of 2.25/3.1 GHz, default TDP of 360W, and 256MB L3 cache.

- EPYC 9754S: 128 cores / 128 threads, base/boost frequency of 2.25/3.1 GHz, default TDP of 360W, and 256MB L3 cache.

- EPYC 9734: 112 cores / 224 threads, base/boost frequency of 2.2/3.0 GHz, default TDP of 320W, and 256MB L3 cache.

AMD claims a 2.7x increase in energy efficiency with the Bergamo chips, offering significant improvements over previous generations. The Bergamo architecture demonstrates a core + L3 cache area of 2.48mm^2, which is 35% smaller than the previous Zen 4 core design.

Additionally, AMD has announced the "Instinct MI300" series, which features AI accelerators. The lineup comprises two models: the GPU-based "Instinct MI300X" and the APU-based "Instinct MI300A" with an integrated CPU.

The "Instinct MI300X" is equipped with the latest CDNA 3 GPU architecture and offers up to 192GB of HBM3 memory. Sample shipments for this model are expected to commence in the third quarter of 2023. On the other hand, samples of the "Instinct MI300A" have already begun shipping.

AMD EPYC Bergamo CPUs With 128 Zen 4C Cores