The AI Image Generation Benchmark is designed in collaboration with industry experts to ensure the generation of equitable and consistent results across various supported hardware platforms. This benchmark is part of UL Procyon's efforts to address the expanding diversity in AI hardware, which now ranges from dedicated AI accelerators in compact PCs to traditional CPUs and integrated GPUs, all capable of performing AI Inference tasks. The introduction of more AI-capable processors has significantly widened the performance spectrum of consumer AI hardware. Consequently, similar to the approach taken with ray tracing in gaming benchmarks, assessing AI Inference performance now necessitates a variety of benchmarks tailored to effectively gauge the capabilities of the extensive array of consumer AI hardware currently available. By adding the AI Image Generation benchmark to its benchmarking tools, UL Procyon is distinguishing the existing AI Inference benchmark as the AI Computer Vision Benchmark, thereby providing a more comprehensive and segmented evaluation framework for AI Inference performance across different hardware.

The AI Image Generation Benchmark

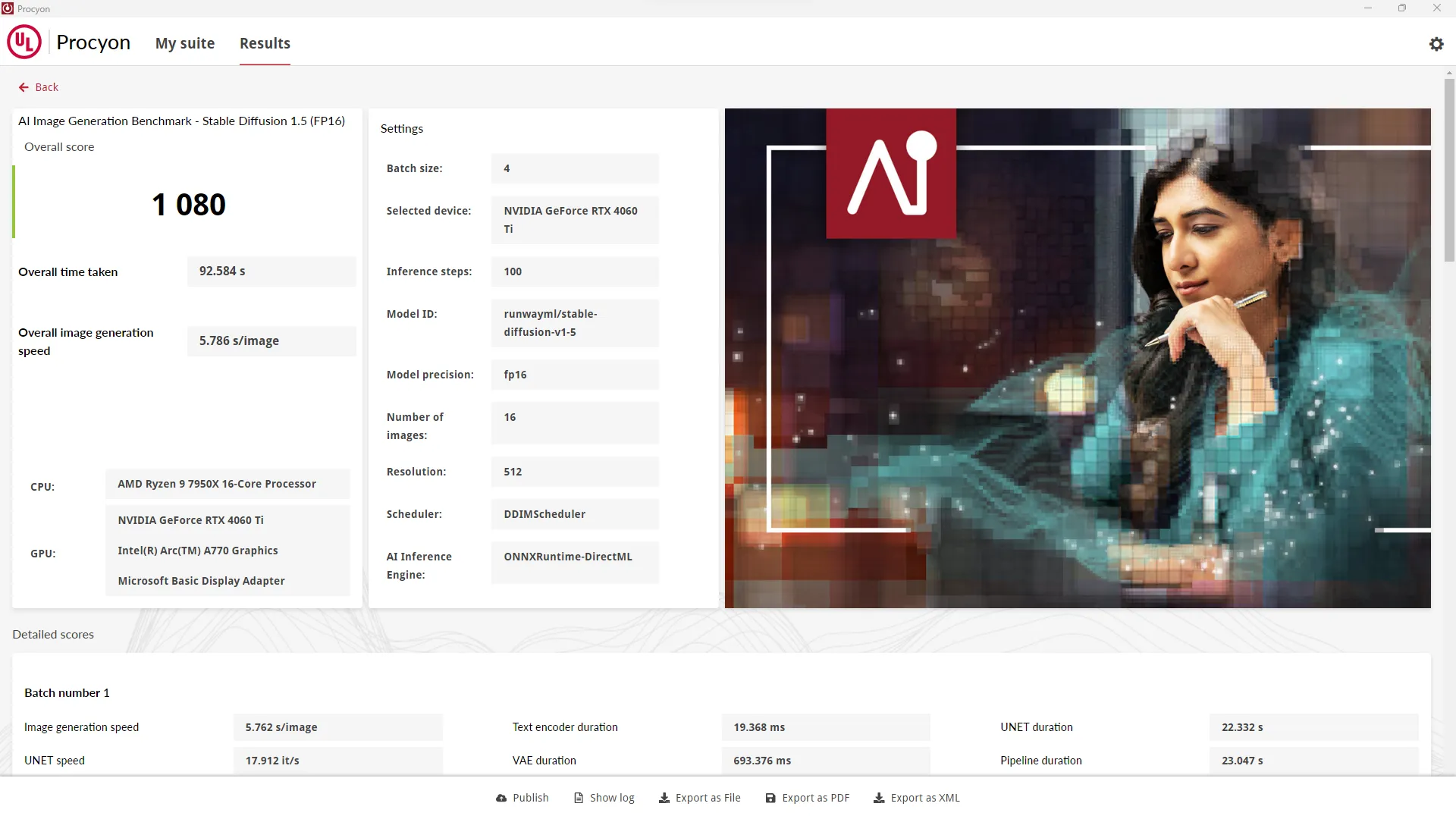

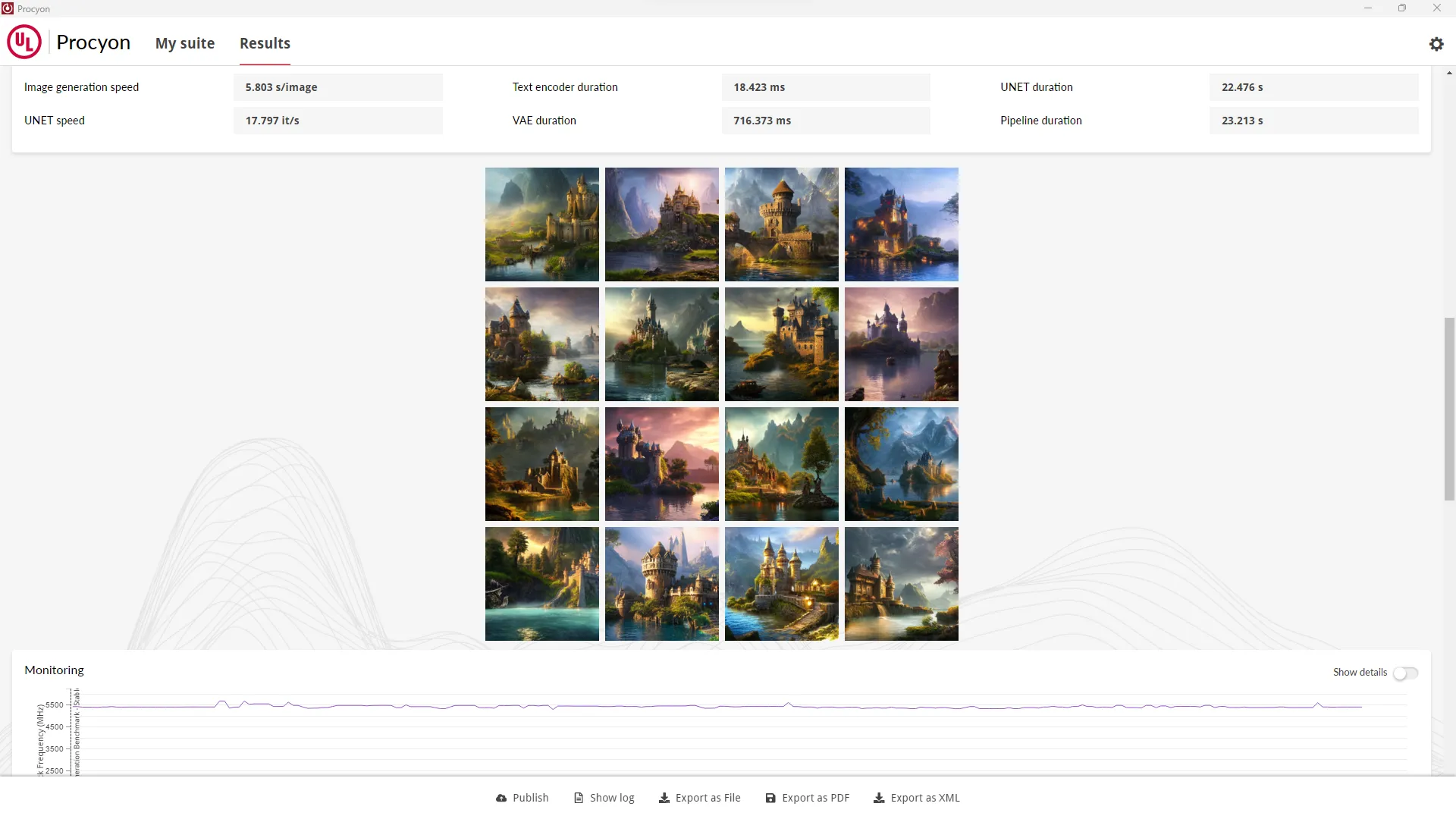

Built around the Stable Diffusion AI model, the AI Image Generation Benchmark is considerably heavier than the computer vision benchmark and is designed for measuring and comparing the AI Inference performance of modern discrete GPUs. To better measure the performance of both mid-range and high-end discrete graphics cards, this benchmark contains two tests built using different versions of the Stable Diffusion model, and we hope to add more tests in the future to support other performance categories.

The Stable Diffusion XL (FP16) test is our most demanding AI inference workload, with only the latest high-end GPUs meeting the minimum requirements to run it. For mid-range discrete GPUs, the Stable Diffusion 1.5 (FP16) test is our recommended test.

Test performance across multiple AI Inference Engines

Like our AI Computer Vision Benchmark, you can freely switch between several leading inference engines, letting you compare engine performance differences, hardware using the same engine or the best-case implementations of AI Inference performance across devices. By default, the benchmark selects the optimal inference engine for the system’s hardware.

Currently, the AI Image Generation Benchmark supports the following inference engines. We plan to add support for more inference engines in the future to provide optimal performance for all supported AI hardware.

- ONNX runtime with DirectML

- NVIDIA TensorRT

- Intel OpenVINO

Procyon Benchmarking Suite

The UL Procyon benchmark suite offers flexible licensing, letting you choose

the benchmarks that best meet your needs. You can buy just one benchmark or add

more in any combination. The software will be released coming Monday the 25th.

Find out more about UL Procyon benchmarks.