The MI300 series, introduced by AMD in 2023, comprised two models: the MI300X, which is a GPU-based design, and the MI300A, which integrates APU architecture. Both models initially utilized HBM3 memory, with capacities of 192GB and 128GB, respectively. There have also been discussions around a CPU-centric MI300C variant. HBM3e memory is noted for its significant performance improvements over HBM3, exemplified by SK Hynix's HBM3e modules achieving a pin transmission rate of 9.2Gbps, compared to the 6.4Gbps rate of HBM3 modules.

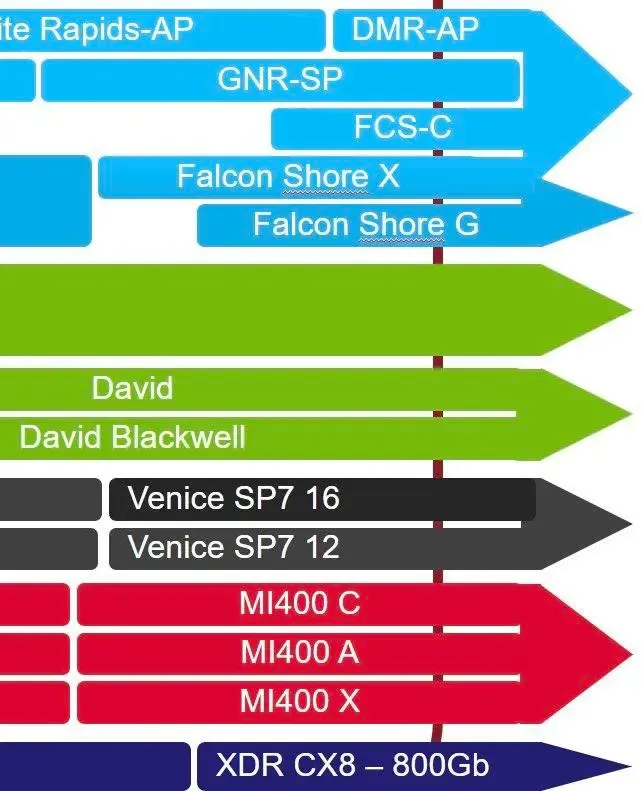

In the context of the industry, AMD's competitor Nvidia has already introduced an HBM3e variant of its GH200 Grace Hopper superchip, with speculation that Nvidia's forthcoming B100 AI GPU will also utilize HBM3e memory. Details surrounding the AMD Instinct MI400 accelerator remain scarce. However, historical updates from @Kepler_L2 revealed that the MI400 series is expected to feature chips codenamed Mercury, Venus, and Earth. Further insights were provided by VideoCardz, citing AMD CEO Su Zifeng's confirmation during an August 2023 earnings call that the development of the MI400 series is underway. In September 2023, another leak from YuuKi-AnS suggested that the MI400 series would be diversified into three variants: X, A, and C.

Source: ithome