3D Vision surround - 4 displays- Adaptive VSync

3D Vision surround on a Single GPU

Whether or not NVIDIA would like to admit it, ATI's Eyefinity certainly changed the way we all deal with multi-monitor setups. As a result in the series 500 products there was multi monitor support for gaming with three monitors, this is called Surround Vision. The downside here was that you needed at least two cards setup in SLI for this to work.

It would not have made any sense to have not addressed this with Kepler, so Surround vision and 3D Surround vision are now supported with one card That means you can game even in 3D on three monitors with just one GeForce GTX 680 as the new display engine can drive four monitors at once. Keplers display engine fully supports HDMI 1.4a, 4K monitors (3840x2160) and multi-stream audio.

Using up-to 4 displays

We just had a quick chat about 3D Vision surround but Kepler goes beyond three monitors. You can connect four monitors, use three for gaming and setup one top side (3+1) monitor to check your email or something desktop related.

Summing up Display support

- 3D Vision Surround running off single GPU

- Single GPU support for 4 active displays

- DisplayPort 1.2, HDMI 1.4a high speed

- 4K monitor support - full 3840x2160 at 60 Hz

In combination with new Desktop management software you can also tweak your desktop output a little like center your Window taskbar at the middle of the three monitors or maximize windows to a single display. With the software you can also setup and apply bezel correction. Interesting though is a new feature that allows you to use hotkeys to see game menu's hidden by the bezel.

PCIe Gen 3

The series 600 cards from NVIDIA all are PCI Express Gen 3 ready. This update provides a 2x faster transfer rate than the previous generation, this delivers capabilities for next generation extreme gaming solutions. So opposed to the current PCI Express slots which are at Gen 2, the PCI Express Gen 3 will have twice the available bandwidth and that is 32GB/s, improved efficiency and compatibility and as such it will offer better performance for current and next gen PCI Express cards.

To make it even more understandable, going from PCIe Gen 2 to Gen 3 doubles the bandwidth available to the add-on cards installed, from 500MB/s per lane to 1GB/s per lane. So a Gen 3 PCI Express x16 slot is capable of offering 16GB/s (or 128Gbit/s) of bandwidth in each direction. That results in 32GB/sec bi-directional bandwidth.

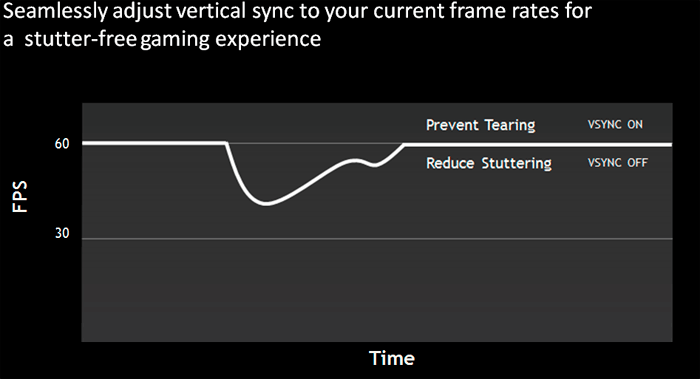

Adaptive Vsync

In the process of eliminating screen tearing NVIDIA will now implement adaptive VSYNC in their drivers.

VSync is the synchronization of your graphics card and monitor's abilities to redraw the screen a number of times each second (measured in FPS or Hz). It is an unfortunate fact that if you disable VSync, your graphics card and monitor will inevitably go out of synch. Whenever your FPS exceeds the refresh rate (e.g. 120 FPS on a 60Hz screen), and that causes screen tearing. The precise visual impact of tearing differs depending on just how much your graphics card and monitor go out of sync, but usually the higher your FPS and/or the faster your movements are in a game - such as rapidly turning around - the more noticeable it becomes.

Adaptive VSYNC is going to help you out on this matter. So While normal VSYNC addresses the tearing issue when frame rates are high, VSYNC introduces another problem : stuttering. The stuttering occurs when frame rates drop below 60 frames per second causing VSYNC to revert to 30Hz and other multiples of 60 such as 20 or even 15 Hz.

To deal with this issue NVIDIA has applied a solution called Adaptive VSync. In the series 300 drivers the feature will be integrated, minimizing that stuttering effect. When the frame rate drops below 60 FPS, the adaptive VSYNC technology automatically disables VSYNC allowing frame rates to run at that natural state, effectively reducing stutter. Once framerates return to 60 FPS Adaptive VSync turns VSYNC back on to reduce tearing.

This feature will be optional in the upcoming GeForce series 300 drivers, not a default.

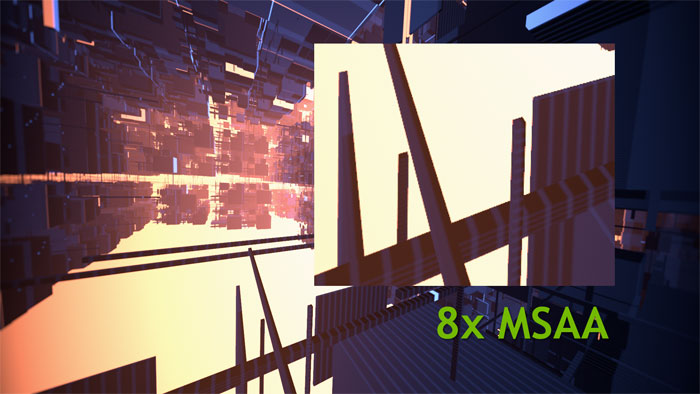

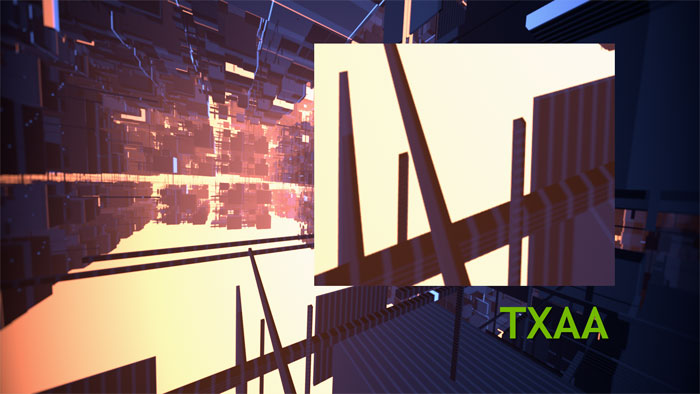

A new AA mode - TXAA

NVIDIA is to release yet another new AA mode that NVIDIA cooked up. TXAA is a new style anti aliasing technique that make the most of the graphics card high texture performance. TXAA is a mix of hardware anti-aliasing, custom CG style AA resolve and in the case of 2x TXAA an optional temporal components for better image quality. The new AA function will be available in two modes, TXAA 1 and TXAA 2.

- TXAA 1 should offer better than 8x MSAA visual quality with the performance hit of 2x MSAA.

- Then TXAA 2 offers even better image quality but with the performance of 4x MSAA

Unfortunately TXAA needs to be implemented in upcoming game titles, and this the functionality will be available later this year. TXAA will also get supported in GeForce series 400 and 500 products.

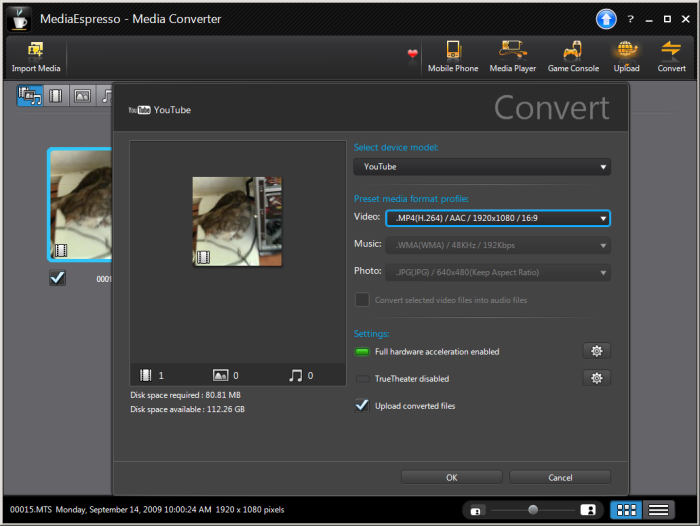

NVENC

NVENC a dedicated graphics encoder engine that incorporates a new hardware based H.264 video encoder inside the GPU. In the past this functionality was managed over the shader processor cores yet with the introduction of Kepler some extra core logic has been added dedicated to this function.

Nothing that new you might think but hardware 1080p encoding is now an option at 4 to 8x in real-time. The supported format here follows the traditional Blu-ray standard which is H.264 high profile 4.1 encoding as well as Multi-view video encoding for stereoscopic encoding. Actually up-to 4096x4096 encode is supported.

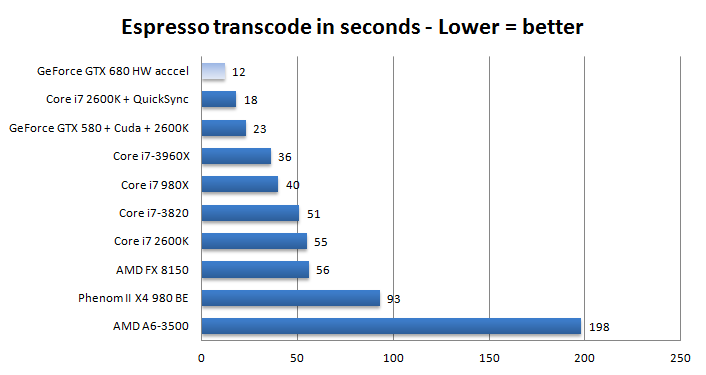

Above you can see Cyberlink Espresso with NVENC enabled and crunching our standard test. We tested and got some results

Above you can see Cyberlink Espresso with NVENC enabled and crunching our standard test. We tested and got some results

Now it's not that your processors can't handle this, but the big benefit of encoding over the GPU is saving watts and thus power consumption, big-time. Try to imagine the possibilities here with transcoding, video editing but also think in terms of videoconferencing and heck why not, wireless display technology.

Now above, you can find the results of this test. In this test we transcode a 200 MB AVCHD 1920x1080i media file to MP4 binary (YouTube format). This measurement is in seconds needed for the process, thus lower = better.

This quick chart is an indication as the different software revisions in-between the Espresso software and could differ a tiny bit. The same testing methodology and video files have been used of course. So where a Core i7 3960X takes 36 seconds to transcode the media file (raw over the processor) we can now have the same workload done in 12 seconds, as that was the result with NVENC. Very impressive, it's roughly twice as fast as a GTX 580 with CUDA transcoding and roughly a third faster then a Core i7 2600K with QuickSync enabled as hardware accelerator. The one thing we could not define however... was image quality. So further testing will need to show how we are doing quality wise.

And yes I kept an eye on it -- transcoding over the CPU resulted into a power draw of roughly 300 Watt for the entire PC, and just 190 Watt when run over the GeForce GTX 680 (entire PC measured). So NVENC not only saves heaps of time, it saves on power very much.