Page 4

Testing HD decoding performance

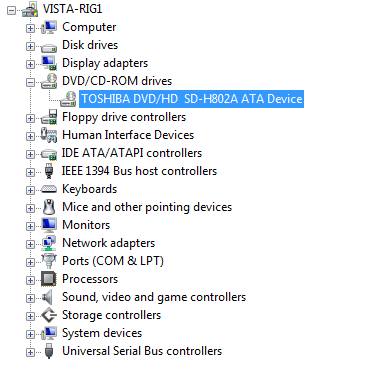

Here at Guru3D we have developed a new test. As most of you know, we where pretty much surprised after posting our HD 2900 XT review that there was no UVD engine present while roughly the entire world believed it had that engine. To prevent such situations we decided to develop our own decoding test where we can measure CPU utilization very precisely during HD playback.

Test system:

Mainboard:

nVIDIA nForce 680i SLI (eVGA)

Processor:

Core 2 Duo X6800 Extreme (Conroe)

Graphics Cards:

Various DirectX 10 mid-range cards

Memory:

2048 MB (2x1024MB) DDR2 CAS4 @ 1142 MHz Dominator Corsair

Power Supply Unit:

Enermax Galaxy 1000 Watt

Monitor:

Dell 3007WFP - up-to 2560x1600

OS related Software:Windows Vista Business Edition (32-bit)

DirectX 10

ATI Catalyst 8.38.9.1-rc2_48912

NVIDIA ForceWare 158.45

As explained the most important bitstream for decoding HD content right now is VC-1. Armed with actually both a HD-DVD and Blu-ray drive we'll decode 140 second clips from two movies at a 1920x1080 screen resolution (interlaced).

These clips are in each benchmark run the same. Each .5th of a second we'll measure CPU load and register it. After the 140 seconds we'll have an average, minimum and maximum CPU load to observe. We'll focus on the average framerate.

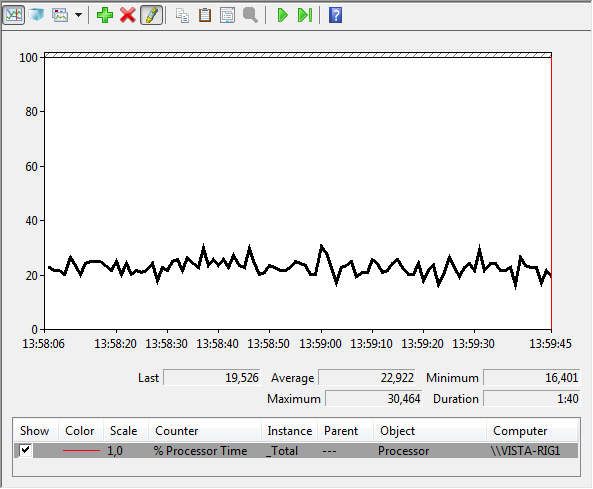

Let's see what that would look like:

Above we can see a GeForce 8600 GT decoding HD-DVD VC1 content, in this case it's decoding the movie 'The Bourne Supremacy'. Overall an 23% CPU load average. Quite honestly not bad at all. It can be better though, as the VP2 video engine from NVIDIA only partly can coop with the VC1 content. VC1 bitstreaming is not supported. On the next slide you'll see a Radeon HD 2600 decoding VC1 content, this card thanks to the new UVD engine, does decode the entropy VC1 bitstream.

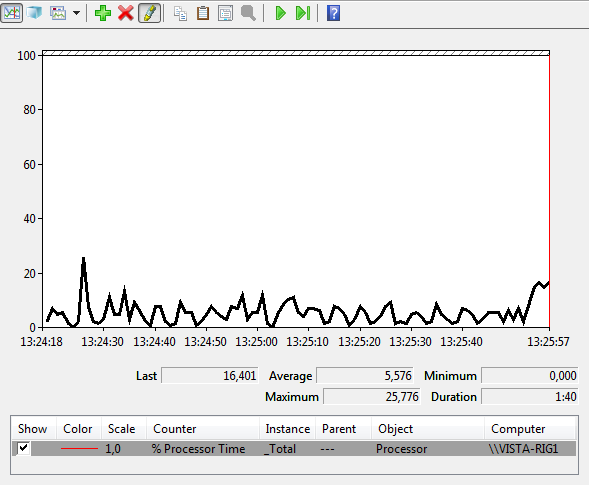

On the Blu-ray side we look at a VC1 stream of the movie 'Blood Diamond', exceptional good (low) CPU load. Let's measure with a couple of HD ready cards and have a look at the outcome in some charts.

First and foremost, it is not a requirement to have your graphics card decode any given HD stream, it's preferred though. In the charts below you can for example see PowerDVD decoding a HD stream over the CPU. On both movies this causes the most CPU utilization. Quite honestly that's really not even half bad. We did however use a 2.9 GHz Core 2 Duo X6800 processor on this system. However, after testing that also, changing to a cheaper E6600 would only results in a slightly higher utilization (we're talking 2-3% here).

VC-1 Decoding

Let's have a peek how well things scale with some more graphics cards included:

Above you can see CPU utilization while the movie 'Blood Diamond' is being decoded. The number is the percentage of CPU load on average (lower = better).

Mind you that the number you see is the average CPU utilization during the 140 seconds of decoding. Again; lower means better. As you can see the 2400 and 2600 products decode HD VC1 streams like they're on dope; only a 5% CPU load! That's exceptionally good. Slightly worse at 15% CPU load we stumble into NVIDIA's GeForce series and all the way to your right you can see the clip decoded over the CPU with PowerDVD where we spot a CPU load of 30%

This movie however, has an average bitrate of roughly 15 Mbit/sec. Let's move on to the next chart.

Here we see on HD-DVD the movie, 'The Bourne Supremacy'. Again exceptional performance from the 2400 and 2600 series, this HD bitstream was crazy, 25 Mbit/sec and higher was no exception. Again we notice that the movie is using a VC-1 bitstream.

As the results now show, clearly the HD 2900 XT does not have UVD. The GeForce 8800 doesn't do bitstreaming as well, yet seems to be dealing with decoding a tad better. Then looking at the 8600 series however, we see that they keep your CPU nicely chilled, but not as good as the UVD supported Radeon HD 2400 and 2600 cards from ATI, that's just amazing.

The reason behind this is VC-1. NVIDIA's new Bitstream processor (BSP) does not support it, it's silly. It does H.264, CABAC/CAVLS but not VC-1. Therefore NVIDIA remains smack in the middle, ATI definitely is king of HD acceleration today.

So for the 2400 & 2600 series goes the entire process of Bitstream processing, Frequency transform, Pixel prediction towards deblocking up to post processing (up/downscaling / deinterlacing, color correction) and displaying is managed by Avivo HD on the 2400/2600 graphics cards and that's including content with a 40MBit/sec bit-rate. Lovely.

H.264 Decoding

Now let's have a peek at H.264 decoding. Now it took me a while to find a movie that was encoded in H.264 with a decent bitstream; but hey ... I ended up with the really lovely Pan's Labyrinth. It's an extremely harsh title in the sense that's it's encoded with h.264 yet still was showing a steep 20 Mbit/sec; this is a really hard thing to do for a any piece of hardware at 1920x1080.

Since I've only had this content available to me since yesterday, I could only include the results of the GeForce 8600 and HD 2400 and 2600 series. Peek and be amazed.

Yes .. that chart looks messed up, but that's the reaility, the numbers again represent CPU load on average. To your right you'll notice PowerDVD decoding the H.264 content over the CPU, a 2.9 GHz Core 2 Duo X6800 I might add; that's 40% CPU utilisation for both CPU cores.

Then when you look at the Radeon HD 2400 XT, HD 2600 XT and GeForce 8600 GT and GTS you'll be shocked. The PC is literally doing nothing. The entire bitstream is being decoded and post-processed over the GPU's. Amazing stuff.