Introduction

NVIDIA GeForce RTX 2070, 2080 and 2080 Ti

Going Hybrid Ray Tracing with NVIDIAs newest GPUs

Is Turing is the start of the next 20 years of gaming graphics? You don't have the explain to a game developer what ray tracing is, as they wanted to use it for the last ten years. And yes, that has got to be my opening line for this review. Hey all and welcome to the first in a long row of RTX reviews.

presentation embargo: 14 September 6 am Pacific

-- RTX can work with Vulkan, Daijin entertainment is developing an online title / game called Enlisted, running Vulkan and RTX.In secific they are using it for global illumination.

double precison ratio should be the same as last gen

It has been leaked, announced and coming but finally, we can show you our review on the new RTX series graphics processors from NVIDIA. In today's review we're peeking at the new technology that NVIDIA offers, as well as finally we can show you performance metrics based on the new graphics cards.

Windows DXR to be released with the fall update

A dozen or so RTX games in development not 21 as the rest was deep learning DLSS.

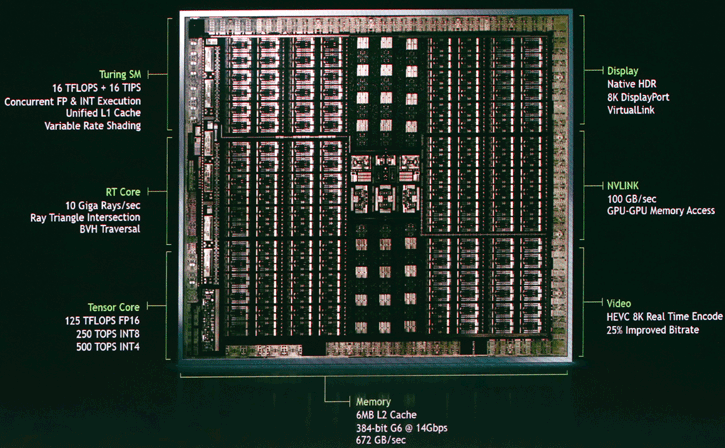

Architecture

On NVIDIA tech day Jonah Alben explained the new architecture, the Turing architecture.

New core superscalar architecture, concurrent FP & INT, execution datapaths, Enhanced L1, Tensor cores, RT cores. All past CUDA processing is not offloaded onto the INT pipeline. Caches wise there is an L1 cache and shared cache L2 6MB. The L1 bandwidth has been doubled compared to pascal making it more efficient.

Part of the GPU is, of course, the memory bandwidth, GDDR6. Up to 1.3 to 1.6 memclk speed clock

---

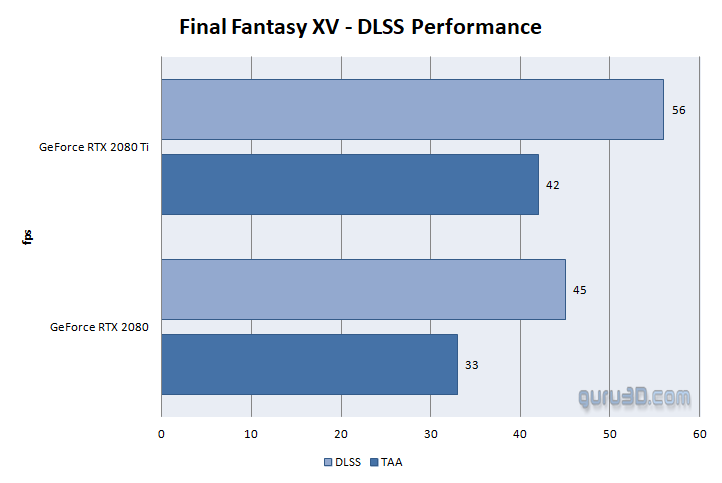

Final Fantasy XV - Deep learning Supersampling AA

One of the challenges with the RTX reviews is that the hardware is ready, the software now. We cannot test Raytracing and the new DSAA mode. However, we've received a pre-release compatible build of Final fantasy where we can run a scripted benchmark in both a TAA and DLSS mode. Since DLSS is pretty much offloaded to3ards the Tensor cores and the fact that you do not have to enable AA on your regular rasterizer renderer engine (shader processors), you are rendering the game without AA, which boosts performance. DLSS then kicks in over the Tensor cores at no performance hit. DLSS is an easy integration for developers; so far developers have announced that 25 games will have DLSS support.

Two example videos

I took the liberty of recording two 80 Mbit/s videos in Ultra HD with the GeForce RTX 2080 Ti, so these are not supplied by NVIDIA, we recorded these ourselves. Both run the exact same timedemo, one is at TAA and the other configured at DLSS. Feel free to spot the differences. BTW stutters are an unfortunate side effect of the game engine, this is not related to the cards or its AA modes. I enabled an overlay FPS counter, however it refused to record unfortunately. Have a peek though.

Example video with TAA

Example video with DLSS

You probably will be impressed as you are rendering the game close to the same quality levels, at no additional cost for DLSS.

EPIC infiltrator DLSS versus TAA demo

But wait yo, there's more. We've obtained the DLSS supporting demo called infiltrator from EPIC. The scenes you are about to watch are based on Unreal Engine 4, and trust me when I say, they look awesome with some high-end, performance-heavy cinematic effects like depth-of-field, bloom and motion blur. Infiltrator was originally built to run at 1080p and with all its settings turned on, even on today’s most powerful GPU is taxing at 4K resolution. By integrating NVIDIA DLSS technology, which leverages the Tensor Cores of GeForce RTX, Infiltrator now runs at 4K resolution with consistently high frame rates without stutters or other distractions.

So again this runs at 3840x2160 (and is recorded in Ultra HD as well). The demo is recorded here at Guru3D on the very same test system we test the cards on, this is not a 'supplied' video this is a real-time recording. I took two runs with an FPS tracker active, please observe them both. Also, I need you to try and look if you can tell a difference between TAA and DLSS. I've recorded the two videos with VSYNC disabled, so we max out the GPU and performance.

In the video, I enabled the overlay so you can observe frame times and FPS. Also, you'll notice a time run recording, DLSS will run on average over 80 FPS while TAA sits just below 60 FPS.

Example video with DLSS

Example video with TAA

Quite a powerful demonstration right? Again DLSS versus TAA in Ultra HD running the UE4 engine. I know if my card could handle it, I'd opt DLSS anytime.

Realtime Raytracing performance

One of the most frustrating things of this review series is that we cannot show you any game demos that support DirectX Raytracing, adn thus for that matter anything with RT cores enabled. While a dozen or so games are underway that will get support, the simple fact remains that none of them have been released.

Ergo we cannot show you the game performance of the RT cores. However, NVIDIA has provided the Star Wars Reflections Elevator Raytracing Demo and I sneakily ran and recorded here in my lab at Ultra HD. The video below is recorded with a GeForce RTX 2080 Ti.

You do need to grasp a couple of things, reflections is a cinematic demo created in a collaboration between Epic Games, ILMxLAB and NVIDIA to achieve film quality at 1080p on 4 Tesla V100s, each with 16 GB of framebuffer. This Turing graphics card is running it as a single GPU with half the GPU memory. In the video you'll notice that once I unlock the 24 FPS cap, we hover in the 30 to 35 FPS ranges. The above was recorded at Ultra HD and one RTX 20780 Ti card my friends.

3DMark Dandia - Real-time Raytracing

We have a bit of an exclusive here. Meet the upcoming RT enabled 3DMark Dandia from UL (formerly Futuremark), this 3DMark tech demo shows real-time ray tracing in action. The demo is based on a new 3DMark benchmark test that they plan to release later this year. The demo will run on any hardware with drivers that support the Microsoft DirectX Ray Tracing API. The purpose of this demo is to show practical, real-time applications of the Microsoft DirectX Ray Tracing API.

The demo does not contain all the ray tracing effects that will be present in the final benchmark, nor is it fully optimized. so it cannot be used to measure or compare GPU performance in a meaningful way.

The demo is based on a work in progress.

---

Tensor core

The deep learning revolution - Why tensor cores in a GPU. WHen deep learning started out it didn't work out really well. Once they moved hat to GPUs things started moving in the right direction. Solving problems that normally took ages to math. Deep learning is greater images, is what NVIDIA is claiming. Deep learning can now be applied towards AA (anti-aliasing) into a normal game. You have software code looking at a frame a or b and here is deep learning. an AA mode that can be offset towards the tens\sor cores and thus they are used in gaming.

What are tensor operations? FMA units, you put one value in and it comes out. Instead of FMA, Tensor cores Turing does it all at once. Super efficient even compared to Volta.

RT cores

It has been a dream to bring ray tracing to gaming for NVIDIA and they claim, also for the developers. Any great dream for the industry, for NVIDIA, meant it would be great to bring that to the industry. Roughly ten years ago NVIDIA started first working on solving the Ray Tracing challenge. Ray tracing is suing the BVG algorithm, an object is divided in boxes, and then boxes and boxes and then triangles. One a ray of light is hitting a box. The fundamental idea is that not all triangles get raytraced, but a certain amount of (multitude) boxes. The architecture in the RT core basically has two fundamental blocks, one checks boxes, the other triangles. It's kind of working like a shader for rasterization code.

So you have shading going in, deep learning and ray tracing. how do you make that work together? So to make an efficient model, NVIDIA is doing roughly 50-50 shading and raytracing.

Advanced shading

Advanced Mesh Shading allows NV to move things off the CPU, the CPU says here is a list of object, and with a script, you can offload that to the GPU and pass it onto in the pipeline. Optimizing, using fidelity where you need it, and not render all of it in an effort to be more efficient.

Variable rate Shading

Motion adaptive shading (MAS).

HW accelerated ray traced Audio

8K60 - DP Display Stream Compression / Native HDR, DP 1.4a ready.

VirtualLink - 4 lanes HBR3 support / USB 3.1 Gen 2 speed / 27 Watts power on a single connector.

Improved video and hardware decoder. HEVC 8K30 HDR real-time + 25% bitrate savings. H.264 up to 15% bitrate savings.

NGX - Neural graphics Architecture

Neural graphics Architecture, this is the name for anything AI. Basically, a path traced image will get a Denoiser applied that enhanced image quality for the final frame.

You take a reference image to train source a to the result in b (what is deep learning slide). You can create a high-quality image while producing fewer samples.. That was one of the fundamental aha moment for NVIDIA, ergo here is what Tensor cores are designed for. AI in games is the next step.

In a game would be a nice idea the AI the NPC would learn from the players and get adaptive and try to beat you, the player. That would be intelligent enemies and allies. There is a lot of possibilities here. But Ai could also be used for cheat detection and facial and character animation.

Cat example, Nvidia can do computational super-resolution through the neural network.

Turing is the start of the next 20 years of graphics

DLSS High-Quality Motion Image generation - Deep Learning Super Sampling

A normal resolution frame gets outputted in super high-quality AA. 64x super-sampled AA is equal to DLSS 2x. This is done with deep learning and ran through the Tensor cores. DLSS is trained based on supersampling.

OC and SLI

NVAPI - NVIDIA is providing anew NVAPI with the inclusion of NV Scanner API. Test algorithm - NV workload. The popular overclocking tools from all brands can make use of it.

It's been a long time coming but NVIDIA is ready to announce their new consumer graphics card next week. Looking back in the past, they’ll start with the GeForce GTX or what I now believe will be called the GeForce RTX 2080 series. In this weekend write-up I wanted to have a peek at what we’re bound to expect as really, the actual GPU got announced this week on Siggraph already. There’s a number of things we need to talk you through. First of names and codenames. We’ve seen and heard it all for the past months. One guy at a forum yells Ampere, and all of the sudden everybody gossiping something called Ampere. Meanwhile, with GDDR6 memory on the rise and horizon, a viable alternative would have been a 12nm Pascal refresh with that new snazzy memory. It would be a cheaper route to pursue as currently the competition really does not have an answer, cheaper to fab however is not always better and in this case, I was already quite certain that Pascal (the current generational architecture from NVIDIA) is not compatible with GDDR6 in the memory interface.

Back in May I visited NVIDIA for a small event and talking to them it already became apparent that a Pascal refresh was not likely. Literally, the comment from the NVIDIA representative was ‘well, you know that if we release something, you know we’re going to do it right’. Ergo, that comment pretty much placed the theory of a Pascal respin down the drain. But hey, you never know right? But there also was this theory. Another route would have been Volta with GDDR6. And that seemed the more viable and logical solution. However, the dynamic changed, big time this week. However, I now need to go back towards February this year where I posted a news-item then, Reuters mentioned a new codename that was not present on any of NVIDIAs roadmaps, Turing. And that was a pivotal point really, but also added more confusion. See, Turing is a name more related and better fitted to AI products (the Turing test for artificial intelligence).

In case you had not noticed, look at this: Tesla, Kepler, Maxwell, Fermi, Pascal, Volta. Here is your main hint to be found. Historically Nvidia has been naming their architectures after famous scientists, mathematicians, and physicist. Turing however sounded more plausible than Ampere, who actually was a physicist.

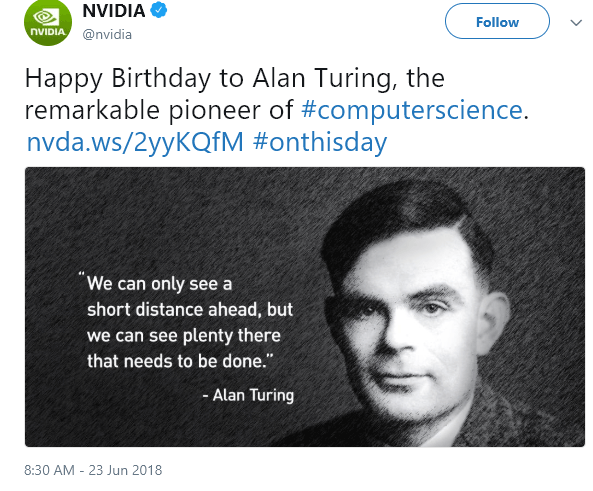

Meet Alan Turing

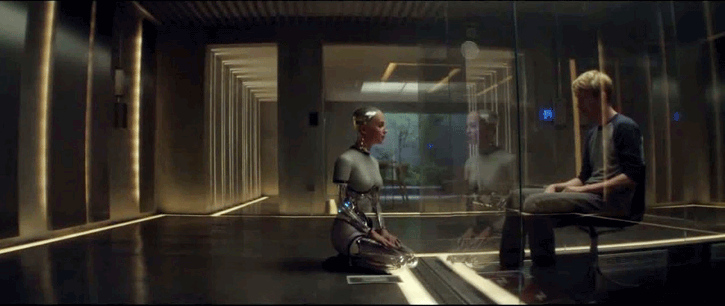

Alan Turing is famous for his hypothetical machine and the Turing test, the machine can simulate any computer algorithm, no matter how complicated it is. That test is applied these days to see if an AI is, in fact, intelligent at a conscious level.

At this point I like to point you towards the beautiful movie Ex Machina, the entire movie could have been named the Turing test. The idea was that a computer could be said to "think" if a human interrogator could not tell it apart, through conversation, from a human being. In the paper, Turing suggested that rather than building a program to simulate the adult mind, it would be better rather produce a simpler one to simulate a child's mind and then to subject it to a course of education. A reversed form of the Turing test is actually widely used on the Internet; the CAPTCHA test is intended to determine whether the user is a human or a computer. That test, my friends, he designed in 1950. During WW2 Turing also has been leading Hut 8, the section which was responsible for German naval cryptanalysis. He devised a number of techniques for speeding the breaking of German ciphers, including improvements to the pre-war Polish bombe method, an electromechanical machine that could find settings for the Enigma machine. He has had a big role in cracking intercepted coded messages that enabled the Allies to defeat the Nazis in many crucial engagements, including the Battle of the Atlantic.

Back to graphics processors though. Back in June NVIDIA posted a tweet congratulating and celebrating the birth date of Alan Turing. That was the point where I realized, at least one of the new architectures would be call Turing. All the hints lead up towards Siggraph 2018, last Monday NVIDIA made an announcement of a new professional GPUs, called .. Quadro RTX 8000, 6000, 5000 and the GPU empowering them is Turing.

Turing the new GPU

The graphics architecture called Turing is new. It’s a notch of Pascal (shader processors for gaming), it’s a bit Volta (Tensor cores for AI and deep learning) and more. Turing has it all, the fastest fully enabled GPU presented will have 4608 shader processors paired with 576 Tensor cores. What’s new however are the addition of RT cores. Now I would love to call them RivaTuner cores (pardon the pun), however, these obviously are Ray tracing cores. Meaning that NVIDIA now has added core logic to speed up certain ray tracing processes. What is that important? Well, in games if you can speed up certain techniques that normally take hours to render, you can achieve greater image quality.

Real-time Ray tracing (well Hybrid kinda .. )

With raytracing, you basically are mimicking the behavior, looks, and feel of a real-life environment in a computer generated 3D scene. Wood looks like wood fur on animals looks like actual fur, and glass gets refracted as glass etc. But can Ray tracing be applied in games? Short answer, Yes and no, partially. As you might have remembered, Microsoft has released an extension towards DirectX; DirectX Raytracing (DXR). Nvidia, on their end, announced RTX on top of that. The hardware and software combined that can make use of the DirectX Raytracing API. NVIDIA has dedicated hardware inbuilt into their GPUs to accelerate certain Ray tracing features. In the first wave of launches you are going to see 20+ games adding support for the new RTX enabled technology, and in games you can really notice it. How much of a performance effect RTX will have on games, is something we'll look deep into as well.

Rasterization has been the default for a long time now. The ability to trace a ray using the same algorithm for complex and deep soft shadows, reflections and refractions. Combining rasterization and ray tracing was the way for NVIDIA to move forward where they are today. The raster and compute phases are what NVIDIA has been working on for the last decade. Game developers have a whole new suite of techniques available to create better-looking graphics.

In case you missed it and as posted in our GeForce RTX announcement news, during the Nvidia event the company showed a new raytracing demo with Battlefield V, and it was showing impressive possibilities of the new technology. Check the reflection of the flamethrower and fires on different surfaces, including on the wet ground and the shiny car.

Nvidia has been working on ray tracing for I think it is over a decade which now in the year 2018 results in what they call “NVIDIA RTX Technology’. All things combined ended up in an API and announced MS DirectX Raytracing API. NVIDIA added to that a layer called RTX. It is a combination of software and hardware algorithms. Nvidia will offer developers libraries that include options like Area Shadows, Glossy reflections, and ambient occlusion. However, to drive such technologies, you need a properly capable GPU. Volta was the first and only GPU that could work with DirectX Raytracing and RTX. And here we land at the Turing announcement, it has Unified Shaders processors, tensor cores but now also what is referred to as RT cores, not RivaTuner but Ray tracing cores.

Chech out the two videos below.

NVIDIA has been offering game works libraries for RTX and is partnering up with Microsoft on this. RTX will find its way towards game applications and engines like Unreal Engine, Unity, Frostbite, they have also teamed up with game developers like EA, Remedy, and 4A Games.

GeForce RTX 2080 and 2080 Ti – Real-time traced computer graphics?

Aaaaalrighty then ... RTX? Yes, NVIDIA has replaced GTX for RTX. We’ve now covered the basics and theoretical bits. The new graphics card series will advance on everything related to gaming performance but seems to have Tensor cores on-board as well as ray tracing cores. The Quadro announcements already indicated and have shown the actual GPU. Historically, NVIDIA will be using the same GPU for their high-end consumer products. It could be that a number of shader processors or Tensor cores are cut off or even disabled, but the generic spec is what you see below.

Meet the GU102 GPU

Looking at the Turing GPU, there is a lot of stuff you can recognize, but there certainly have been fundamental block changes in the architecture, the SM (Streaming Multiprocessor) clusters have separated, core separated isolated blocks, something the Volta GPU architecture also shows as familiarity.

| GeForce | RTX 2080 Ti FE | RTX 2080 Ti | RTX 2080 FE | RTX 2080 | RTX 2070 FE | RTX 2070 |

|---|---|---|---|---|---|---|

| GPU | TU102 | TU102 | TU104 | TU104 | TU104 | TU104 |

| Shader cores | 4352 | 4352 | 2944 | 2944 | 2304 | 2304 |

| Base frequency | 1350 MHz | 1350 MHz | 1515 MHz | 1515 MHz | 1410 MHz | 1410 MHz |

| Boost frequency | 1635 MHz | 1545 MHz | 1800 MHz | 1710 MHz | 1710 MHz | 1620 MHz |

| Memory | 11GB GDDR6 | 11GB GDDR6 | 8GB GDDR6 | 8GB GDDR6 | 8GB GDDR6 | 8GB GDDR6 |

| Memory frequency | 14 Gbps | 14 Gbps | 14 Gbps | 14 Gbps | 14 Gbps | 14 Gbps |

| Memory bus | 352-bit | 352-bit | 256-bit | 256-bit | 256-bit | 256-bit |

| Memory bandwidth | 616 GB/s | 616 GB/s | 448 GB/s | 448 GB/s | 448 GB/s | 448 GB/s |

| TDP | 260W | 250W | 225W | 215W | 185W | 175W |

| Power connector | 2x 8-pin | 2x 8-pin | 8+6-pin | 8+6-pin | 8-pin | 8-pin |

| NVLink | Yes | Yes | Yes | Yes | - | - |

| Performance (RTX Ops) | 78T RTX-Ops | 60T RTX-Ops | 45T RTX-Ops | |||

| Performance (RT) | 10 Gigarays/s | 8 Gigarays/s | 6 Gigarays/s | |||

| Max Therm degree C | 89 | 88 | 89 | |||

| price | $ 1199 | $ 999 | $ 799 | $ 699 | $ 599 | $ 499 |

** table with specs has been updated with launch figures

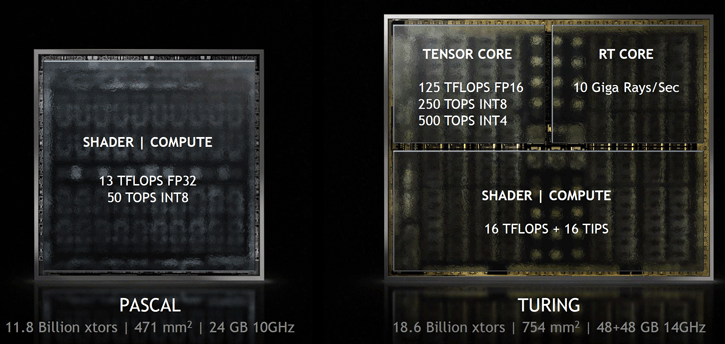

Turing, and allow me to name the GPU TU102 is massive, it counts 18.6 billion transistors localized onto a 754mm2 die. In comparison, Pascal had close to 12 billion transistors on a die size of 471mm2. Gamers will immediately look at the shader processors, the Quadro RTX 8000 has 4608 of them enabled and since everything with bits is in multitudes of eight and while looking at the GPU die photos. Turing has 72 SMs (streaming multiprocessors) each holding 64 cores = 4608 Shader processors. We have yet to learn what blocks are used for what purpose. A fully enabled GPU has 576 Tensor cores (but we're not sure if these are enabled on the consumer gaming GPUs), 36 Geometry units, and 96 ROP units. We expect this GPU to be fabbed on an optimized 12nm TSMC FinFET+ node, the frequencies at this time are unannounced.

Graphics memory - GDDR6

Another difference in-between Volta and Turing is of course graphics memory. HBM2 is a bust for consumer products, at least it seems and feels that way. The graphics industry at this time is clearly favoring the new GDDR6. It’s easier and cheaper to fab and add, and at this time can even exceed HBM2 in performance. Turing will be paired with 14 Gbps GDDR6. The Quadro cards have multitudes of eight (16/32/48GB) ergo we expect the GeForce RTX 2080 to get 8GB of graphics memory. It could 16GB but that just doesn’t make much sense at this time in relation to cost versus benefits. By the way, DRAM manufacturers expect to reach 18 Gbps and faster with GDDR6.

NVLINK

So is SLI back? Well, SLI has never been gone. But it got limited towards two cards over the years, and driver optimization wise, things have not been optimal. If you have seen the leaked PCB shots, you will have noticed a new sort of SLI finger, that is actually an NVLINK interface, and it's hot stuff. In theory, you can combine two or more graphics cards, and the brilliance is that the GPUs can share and use each others memory, e.g direct memory access between cards. As to what extent this will work out for gaming, we'll have the learn just yet. Also, we cannot wait to hear what the NVLINK bridges are going to cost, of course. But if you look at the upper block-diagram, that a 100 GB/sec interface, which is huge in terms of interconnects. We can't compare it 1:1 like that, but SLI or SLI HB passed 1 or 2 GB/sec respectively. One PCIe Gen 3 single lane offer 1 Gb/s, that's 16 GB/sec or 32 GB/sec full duplex. So that one NVLINK between two cards is easily three times faster than a bi-directional PCIe Gen 3 x16 slot.

NVIDIA will support 2-way NVLINK support. Currently, only the GeForce RTX 2080 and 2080 Ti will get NVLINK support and that physical connector present on the cards. The RTX 2070 and presumably future lower positioned models will not get this super fast multi-GPU interconnect.

50 GB/sec on the RTX 2080.

100 GB/sec on the RTX 2080 Ti

3-slot and 4 slot vesion, 2-way maximum

GeForce RTX 2070

NVIDIA also is announcing the 499 USD - 2070. This 185 Watt car will have 2305 shader procs.Is has a 1410 MHz base clock and a 1620 MHz boost clock for the standard founder version. The card has 8GB of GDDR6 memory running a 256-bit wide bus (448 GB/s), the price is 499 USD and 599 for the founders' version. We'll add more detail once it comes in.

GeForce RTX 2080

You will see two products launched in the first launch wave. The GeForce RTX 2080. This graphics card series will not have the full shader count as the GU102, the card will have 2944 shader processors active based on the GU104 GPU. For clock frequencies please look at the upper table for a good indication. Mind you that these will different here and there, AIB partners obviously have different factory tweaked products. The GDDR6 memory will get tied towards a 256-bit bus, and depend on the clock frequency, we are looking at 448 GB/sec (699 USD).

GeForce RTX 2080 Ti

The flagship is the GeForce RTX 2080 Ti with GU102. Expect 4352 active shader processors, which is substantial, seen from the 1080 Ti. The product will get 11GB GDDR6, With 11 GB you will get a 352-bit wide memory bus as each chip is tied towards a 32-bit memory controller, 11x32 = 352-bit. For clock frequencies again please look at the above table and keep some margin in mind for the board partner clock frequencies (999 USD).

Cooling RTX 2080

We've always had our comments about the blower style coolers from NVIDIA, they allowed a thermal threshold of 80 degrees, and all cards reached that in return throttling down. The new two fan (13 blades) cooler design should be much better in that respect. And as long as the cards do not hit a too high-temperature target, of course, they will not throttle down, and in fact can even be clocked higher. NVIDIA mentioned that the new cooler will be much more silent as well, which is something I've complained about a lot with last-gen cooler. The new claim is that the cards will produce just a fifth of the acoustics compared to the last-gen design coolers, they mention it to be 8 DBa lower in noise, and that's a good value alright. We do wonder if NVIDIA isn't pushing too hard, as they are starting to really compete with their own AIB partners this way.

In other news, in the same blog-post, NVIDIA also posted a PCB shot and shows a photo of the new vapor chamber which covers the TU104 GPU (RTX 2080). GeForce RTX 2080 uses 225 Watts of power out of the box, and tops out around 280 Watts for enthusiasts chasing overclocking performance.

The power delivery system has been rebuilt for GeForce RTX Founders Edition graphics cards, starting with the all-new 13-phase iMON DrMOS power supply, it should offer cleaner power delivery.

Of particular note here is a new ability to switch off arbitrarily power delivery phases, increasing phases normally increases wattage in low power modes (idle) for reduced power consumption at low workloads, which increases power efficiency. So if you're not doing anything demanding with the graphics card, the net result is less active power phases.

Factory overclocked-

- Sign up to receive a notice when we publish a new article

- Or go back to Guru3D's front page