4:2:2 Color compression - Nits Explained

Chroma subsampling - Color compression

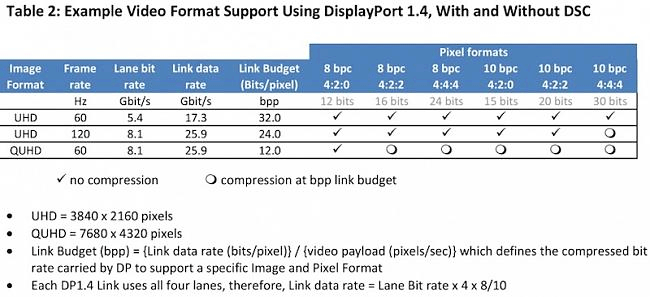

Right, the elephant in the room. We're going to spend a full and separate chapter on this topic, as recently it became apparent that in its highest refresh-rate mode (144 Hz), the monitor applies color compression. I need to advance that, this screen makes use of color compression at anything above 98 Hz. A true fact is that it's not the fault of ASUS, AOC or NVIDIA, no the main limitation for this need is signal bandwidth over DisplayPort 1.4. DisplayPort 1.4 has too little bandwidth available to drive 4k, 144 Hz and HDR, and thus it needs some sort of compression. NVIDIA decided to bypass the issue at hand by applying 4:2:2 chroma subsampling.

Chroma subsampling very bluntly put is color compression. While information like brightness will remain intact, the color information will be based on half the resolution, 1920 x 2160 pixels. All is good up to 98 Hz, after that the Display Port connection runs unto bandwidth issues. 4:2:2 chroma subsampling.

Is 4:2:2 chroma subsampling a bad thing?

That I can only answer with both yes and no. This form of color compression has been used for a long time already, in fact, if you ever watched a Bluray movie, it was color compressed. Your eyes will be hard-pressed to see the effect of color compression in movies and games. However, with very fine thin lines and fonts, you will notice it. For gaming, you'll probably never ever notice a difference (unless you know exactly what to look for in very specific scenes). In your Windows Desktop, however, that's something else. And let me show you an example of that. Have a peek of a zoomed in Windows desktop, ...

Above you can see a good example of the problem at hand. Compare the two photos left and right, focus on the icon text To the right the N in Heaven and letters N H and M on Benchmark are discolored. That's is the effect that 4:2:2 chroma subsampling. The photo is blown up, but you can see this rather clearly with your own eyes. If you hate it, you'll need to move back to 120 Hz or preferably 98 Hz to bypass the issue. Let me reiterate, you'll only notice this effect in the Windows desktop with thin fonts. In gaming, you will not notice the effect, at least I could not see it. For gaming he panel is visually impressive even with 4:2:2 chroma, the Quantum Dots do their work alright, very nice reds and blues that look deep, dynamic and rich. But I want to make very clear that this 2700 Euro / 2000 USD monitor is making compromises if you will be using the full bandwidth of its DisplayPort 1.4 connector to deliver 4K at 120Hz natively.

I refuse to use 4:2:2 what now?

NVIDIA has made a few options available in it's latest drivers (please use GeForce 398.82 WHQL or newer). The new drivers will allow you to select different refresh rates, the refresh rates will enforce a preferential (you can read out the proper modes in the information section of the monitor OSD):

| Refresh rate | Color output | comment |

| up-to 98 Hz | YUV444 10-bit | With GSYNC much better than 60 Hz |

| 120 Hz | YUV444 8-bit | Gaming without 10-bit HDR |

| 144 Hz | YUV422 10bit | Gaming with 10-bit hdr + color compression |

And there you have it:

- You can game at 144Hz / 3840x2160 / HDR10 but that has YUV4:2:2 chroma subsampling enabled

- At 120 Hz you will not have any color compression, but your color gamut will be set in SDR mode.

- At up-to (and including) 98 Hz all is peachy perfect at YUV444 10-bit

How much you are / will be bothered by the compromise that NVIDIA had to enforce due to DisplayPort limitation is up to you. I'll say it once more, in-game you will be hard-pressed to see YUV422, so as a gamer this might not be an issue. In the Windows Desktop however that is a totally different story and it's unacceptable to me.

The easy way out is configuring your panel 98 Hz, all problems will have been solved. But you are paying huge amounts of money for a 144 Hz screen, so that is not the way it's meant to be played, for many gamers.

HDR 10 and nits

Better pixels, a wider color space, more contrast will result in more interesting content on that screen of yours. FreeSync 2 and the new G-Sync enabled monitors have HDR10 support built in, it is a requirement for any display panel with the label to offer full 10bpc support. High-dynamic-range will reproduce a greater dynamic range of luminosity than is possible with digital imaging. We measure this in nits, and the number of nits for UHD screens and monitors is going up. Candle brightness measured over one meter is 1 nit, also referred to as Candela; the sun is 1.6000.000.000 nits, typical objects have 1~250 nits, current pc displays have 1 to 250 nits, and excellent non-HDR HDTVs offer 350 to 400 nits. An HDR OLED screen is capable of 500 to maybe 700 nits for the best models and here it’ll get more important, HDR enabled screens will go towards 1000 nits with the latest LCD technologies. HDR allows high nit values to be used. HDR had started to be implemented back in 2016 for PC gaming, Hollywood has already got end-to-end access content ready of course. As consumers start to demand higher-quality monitors, HDR technology is emerging to set an excitingly high bar for overall display quality.

Good HDR capable panels are characterized by:

- Brightness between 600-1200 cd/m2 of luminance, industry goal is to reach 1000 to 2000

- Contrast ratios that closely mirror human visual sensitivity to contrast (SMPTE 2084)

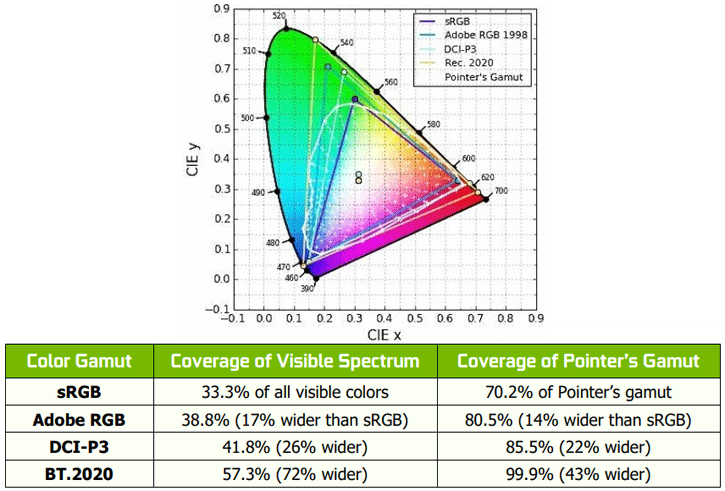

- And the DCI-P3 and/or Rec.2020 color gamut can produce over 1 billion colors at 10 bits per color

HDR video and gaming increase vibrancy in colors, details with contrast and luminosity ranges with brightness. You will obviously need a monitor that supports it as well as a game title that supports it. HDR10 is an open standard supported by a wide variety of companies, which includes TV manufacturers such as LG, Samsung, Sharp, Sony, and Vizio, as well as Microsoft and Sony Interactive Entertainment, which support HDR10 on their PlayStation 4 and Xbox One video game console platforms (the latter exclusive to the Xbox One S and X). Dolby Vision is a competing HDR format that can be optionally supported on Ultra HD Blu-ray discs and from Streaming services. Dolby Vision, as a technology, allows for a color depth of up to 12-bits, up to 10,000-nit brightness, and can reproduce color spaces up to the ITU-R Rec. 2020 and SMPTE ST-2084. Ultra HD (UHD) TVs that support Dolby Vision include LG, TCL, and Vizio, although their displays are only capable of 10-bit color and 800 to 1000 nits luminance. The maximum range of colors reproducible by the monitor, generally expressed as the percent coverage of a defined standard like sRGB, Adobe RGB, DCI-P3, and BT.2020. These each specify a “color space,” or a portion of the visible spectrum, that delivers a consistent viewing experience between different imaging devices like monitors, televisions, and cameras. HDR10 requires displays cover at least 90% of the DCI-P3 color gamut (which is a subset of the currently unachievable BT.2020 color gamut).

Another way to interpret color gamuts are their coverage of Pointer’s gamut, a collection of all diffuse colors found in nature. Expanding a color space to include or extend beyond Pointer’s gamut will allow for richer and more natural imaging, as human vision is capable of interpreting many artificial colors beyond Pointer’s gamut that are commonly found in manufactured goods like automobile paints, food dyes, fashionable clothing, and Coca-Cola’s signature red. Think big and a lot of bandwidth. Monitor resolutions are expanding. A problem with that is that the first 8K monitors needed multiple HDMI and or DisplayPort connectors to be able to get a functional display. Alright, we've got the basics covered, let's move onwards into the review.