Page 2

PCI-Express

PCI Express is a new development in I/O interconnect standard that companies like NVIDIA, ATI and many others will deploy in future graphics solutions. Basically it's going to replace the traditional AGP bus in the next year or two. NVIDIA won't be releasing GPUs with native PCI Express initially, but will rely on bridged solutions to handle the translation from the multiple serial busses to the card's native AGP interface.

NVIDIA calls their bridge-chip the HSI, High Speed Interconnect. With up to 4GB/s of one-directional and 8GB/s of concurrent bandwidth, users are free to have high-speed graphics output. PCI Express significantly increases bandwidth between the central processing unit (CPU) and graphics processing unit (GPU). We are not yet 100% sure if Series 6600 uses HSI or is a native solution, the AGP version though will be bridged. The differences between ATI and NVIDIA (NVIDIA HSI/ ATI Native) can be discussed and argued. As far as I'm concerned it just doesn't matter; as long as it works okay who really cares?

PCI-Express mainboard

PCI-Express mainboard

Do you remember the good old days, years ago... 3dfx's Voodoo2 in SLI mode (Scan Line Interleave)? No? Basically you take two graphics cards, each card renders half of the image scan lines, which resulted in double the performance of a single board.

When we look back through the years we can see that not one manufacturer has come up with a similar concept (set aside the XGI Volari Dual Core products) for one simple reason... AGP, there's no dual AGP solution at hand and PCI does not have the bandwidth to handle modern graphics accelerators.

Now that PCI-Express is here, things have changed for the better. Motherboards featuring the Tumwater chipset will have dual PCI-E-x16 slots making dual graphics accelerators a possibility again. NVIDIA steps up to the plate today with the re-introduction of the SLI concept on the GeForce 6800 and 6600 series, again using the SLI configuration but now with a different approach to the same principles that made Voodoo2 SLI a big success. As stated the GT version is of course slightly faster but here's an interesting fact: it will also be SLI compatible. Well, you might as well buy a high-end 6800 in that case but still... I wouldn't mind a 4 monitor desktop to be honest :)

This is what the GeForce 6600 GT would look like in SLI configuration. I'm curious how popular this is going to become.

GeForce 6600GT cards come with a 500 MHz clock and memory rate, 128-bit (GDDR3, 128 MB) and will cost $/EUR229. The GeForce 6600 will also come with a 128-bit bus (GDDR, 128 MB), will cost $150 and has a way lower 300 MHz core clock. The memory clock is something that can be decided by the manufacturer, however.

When we take a closer look we can see that indeed the 6600 GT is using a 500 Mhz core and 500 MHz(2x) on the memory with '8' Pixel pipelines and 3 Vertex processors. The 6600 series can write only four color pixels per clock and has a fragment crossbar. The NV43 does appear to have eight pixel shader/texture units, so its not an "8 x 1" design or a "4 x 1" design. It's more of a hybrid, but the fragment crossbar ensures all four of the chip's ROPs are well utilized. As the benchmarks will show, this works quite well.

$ffffffffff Display adapter information

$ffffffffff ---------------------------------------------------

$0000000000 Description : NVIDIA GeForce 6600 GT

$0000000001 Vendor ID : 10de (NVIDIA)

$0000000002 Device ID : 0140

$0000000003 Location : bus 1, device 0, function 0

$0000000004 Bus type : PCI

$ffffffffff ---------------------------------------------------

$ffffffffff NVIDIA specific display adapter information

$ffffffffff ---------------------------------------------------

$0100000000 Graphics core : NV43 revision A2 (8x1,3vp)

$0100000001 Hardwired ID : 0140 (ROM strapped to 0140)

$0100000002 Memory bus : 128-bit

$0100000003 Memory type : DDR (RAM configuration 07)

$0100000004 Memory amount : 131072KB

$0100000005 Core clock : 501.428MHz

$0100000006 Memory clock : 501.188MHz (1002.375MHz effecti...

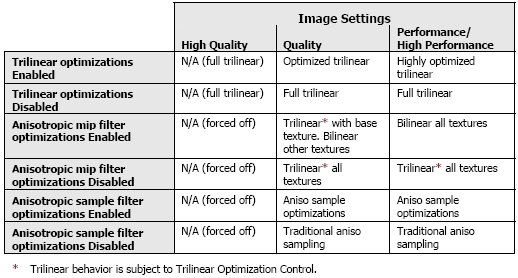

Legit Driver Optimizations

In recent builds of NVIDIA ForceWare drivers we can see that NVIDIA introduced a selectable optimization. Recent drivers, and in fact the drivers supplied with this 6600GT, show even more options in optimizations. Let's have a look at them:

These are generic and not application specific optimizations. The image quality loss is hardly noticeable with the naked eye yet they offer you a slight performance increase. The optimizations are user selectable from the graphics card's control panel. You decide whether you want them enabled or not. These optimizations are now enabled by default. We here at Guru3D.com currently run the default test with Trilinear optimizations enabled. We did however test with all optimizations on and off. More on that in our benchmarks.

We are not going in depth on the optimizations yet I do want to show you that there indeed are very tiny differences in image quality when you make use of generic optimizations. Let's have a look at the image below.

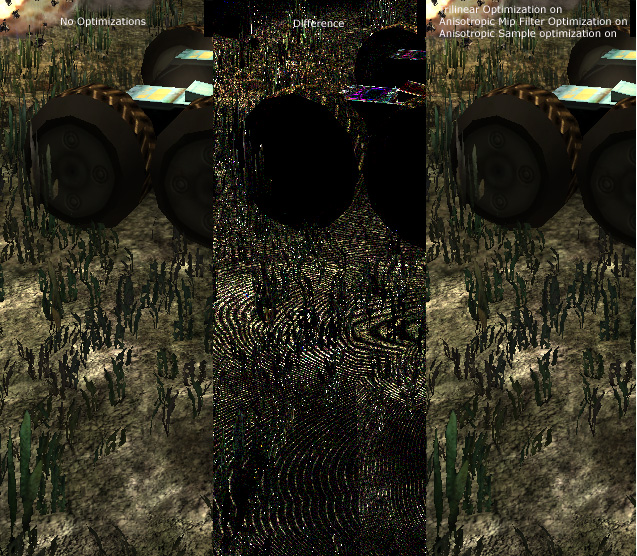

Difference (brightness on the middle image is 3 times enhanced)

Now then, the left image is a partial screenshot from Aquamark 3 at 1280x1024 with 16 levels of Anisotropic filtering with all Optimizations disabled. To your right you'll see the same thing with all optimization enabled. Now ignore the middle picture for a while and try to detect some differences. Also keep in mind that an actual game or benchmark of whatever is doing that at high framerates, you are now looking at a still image in the game. Let's do that again yet place the camera position into a better perspective and deeper view:

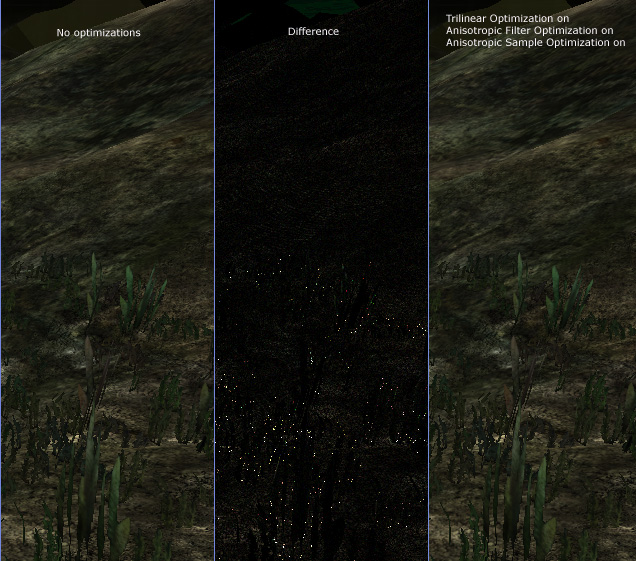

difference - brightness default

Difficult to detect the difference huh? Now in the middle picture we see a program called The Compressonator (funnily enough an ATI application) at work. Basically it compares two images and spots the differences. For the top images I had to increase the difference brightness 3 times to show the difference a bit better so you know what exactly to look for. The second one shows the difference with the Compressonator's default view. The dots highlightes show the area with the most differences. I know this is just one application and image comparisons can go on and on for pages and eventually you will find differences if you look hard enough.

The quantity of optimizations is slowly irritating me a little though. It's getting very confusing for the end-user and an extremely pain in the you know what for us reviewers. Don't get me wrong here, I praise NVIDIA for the option to make a selectable difference for full trilinear filtering and I truly wish that ATI would follow that trend, but this is a little over the top isn't it? For the true performance tweakers among us, this is l33t stuff though.

Anyway, draw your own conclusions.

Power Supply demand

NVIDIA recommends a 300 Watt Power Supply for the 6600 series, so basically anyone has that minimal in their system these days.

Architecture Characteristics of the GeForce 6 Series

- Pixel pipelines: 16 / 12 / 8

- Superscalar shader: Yes

- Pixel shader operations/pixel: 8

- Pixel shader operations/clock: 128

- Pixel shader precision: 32 bits

- Single texture pixels/clock: 16

- Dual texture pixels/clock: 8

- Adaptive Anisotropic Filtering: Yes

- Z-stencil pixels/clock: 32

Let's talk compare a little, the GeForce 6600 series was manufactured at 0.11µ where I expected it to be 0.13 µ!

Get ready for the most vibrant, lifelike, and elegant graphics ever experienced on a PC. The groundbreaking NVIDIA® GeForce 6 Series of graphics processing units (GPUs) and their revolutionary technologies power worlds where reality and fantasy meet; worlds in which new standards are set for performance, visual quality, realism, and video functionality. The GeForce 6 Series GPUs deliver powerful, elegant graphics to drench your senses, immersing you in unparalleled worlds of visual effects for the ultimate PC experience.

| Specs | GeForce 6600 | GeForce 6600 GT | GeForce 6800 | GeForce 6800 GT | GeForce 6800 Ultra |

| Codename | NV43 | NV43 | NV40 | NV40GT | NV40U |

| Transistors | ? | ? | 222 million | ||

| Process, GPU maker | 110nm | 110nm | 130nm, IBM | ||

| Core clock | 300 MHz | 500 MHz | Up to 400 MHz | 350MHz | 400-450 MHz |

| Memory | 128MB DDR1 | 128MB GDDR3 | 128MB DDR1 | 256MB GDDR3 | 256MB GDDR3 |

| Memory bus | 128-bit | 256-bit | |||

| Memory clock | Up to manufacturer | 2x500 MHz | 2 x 550MHz | 2 x 500MHz | 2 x 600MHz |

| PCB | P212 | P212 | P2?? | P210 | P210 |

| Pipelines | 8 | 8 | 12 | 16 | 16 |

| FP operations | FP16, FP32 | ||||

| DirectX | DirectX 9.0c | ||||

| Pixel shaders | PS 3.0 | ||||

| Vertex shaders | VS 3.0 | ||||

| OpenGL | 1.5+ (2.0) | ||||

| Price | $150 | $229 | $299 | $399 | $499 |

| Availability | Sep/Oct 2004 | Sep/Oct 2004 | May/June 2004 | ||