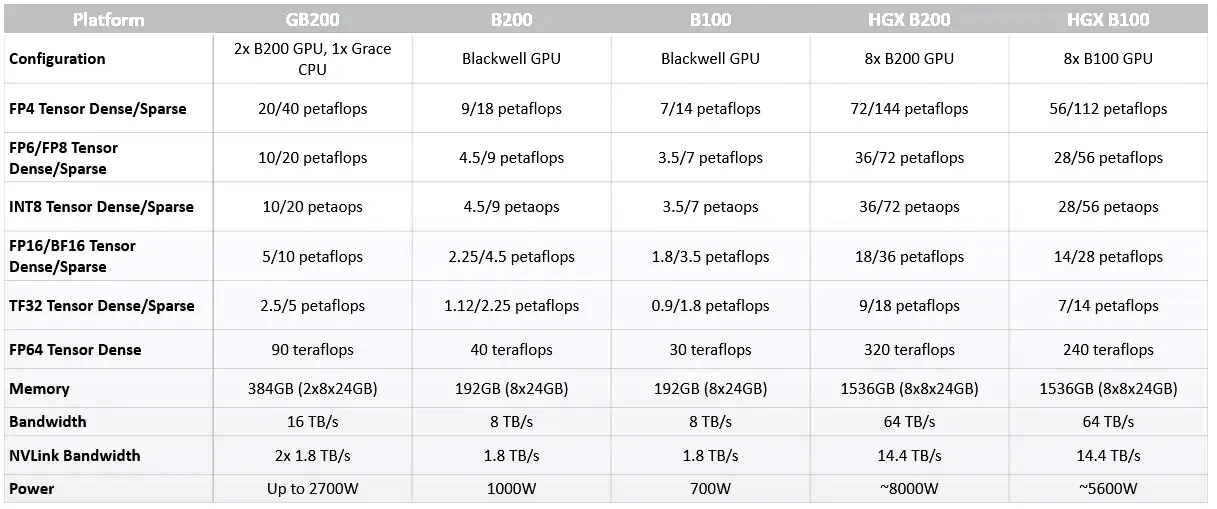

The Blackwell architecture, featuring the B200 GPU, succeeds the H100/H200 series. In addition, NVIDIA announced the Grace Blackwell GB200 superchip, which combines the Grace CPU architecture with the new Blackwell GPU, suggesting future consumer versions may diverge significantly for data center applications, potentially launching in 2025.

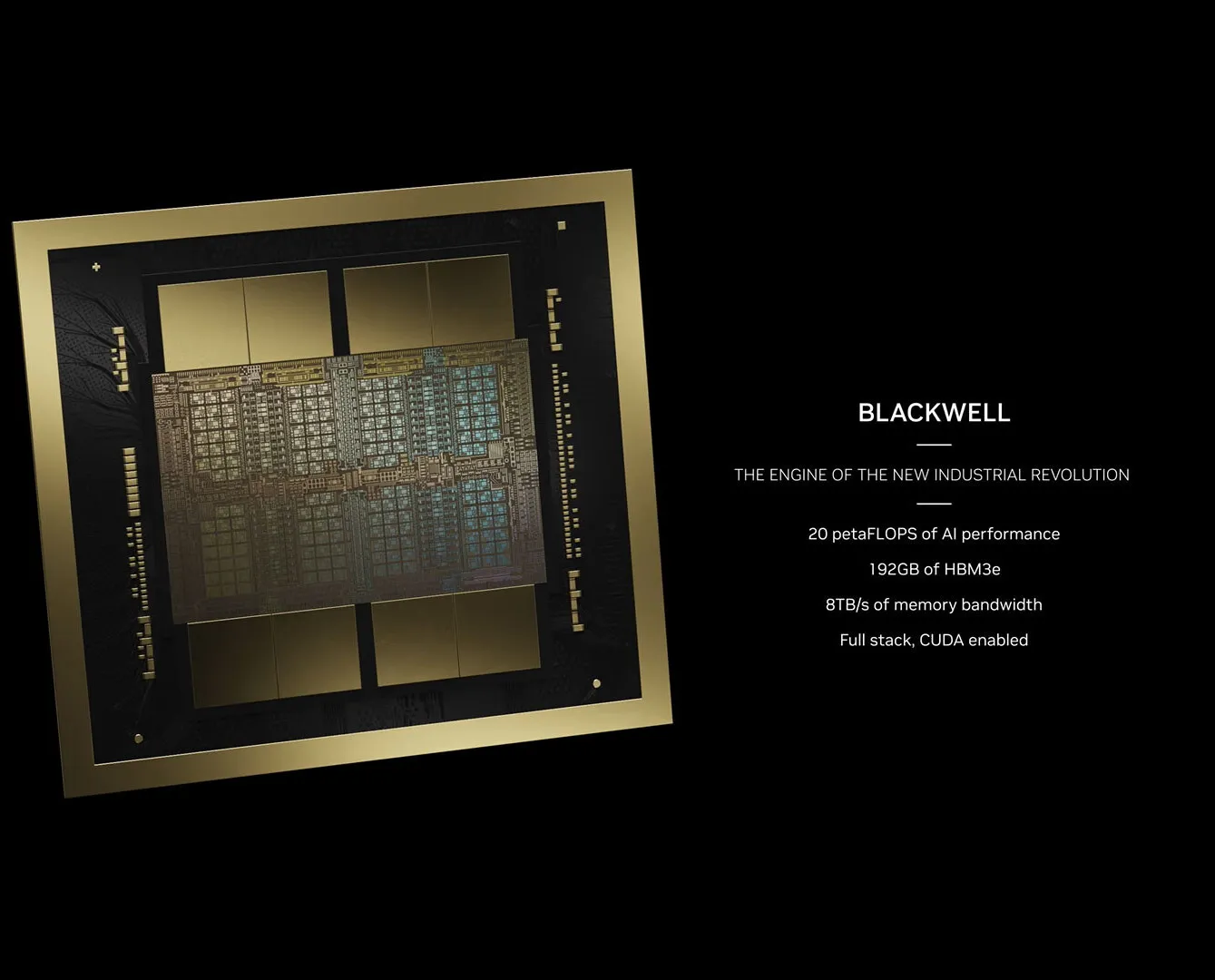

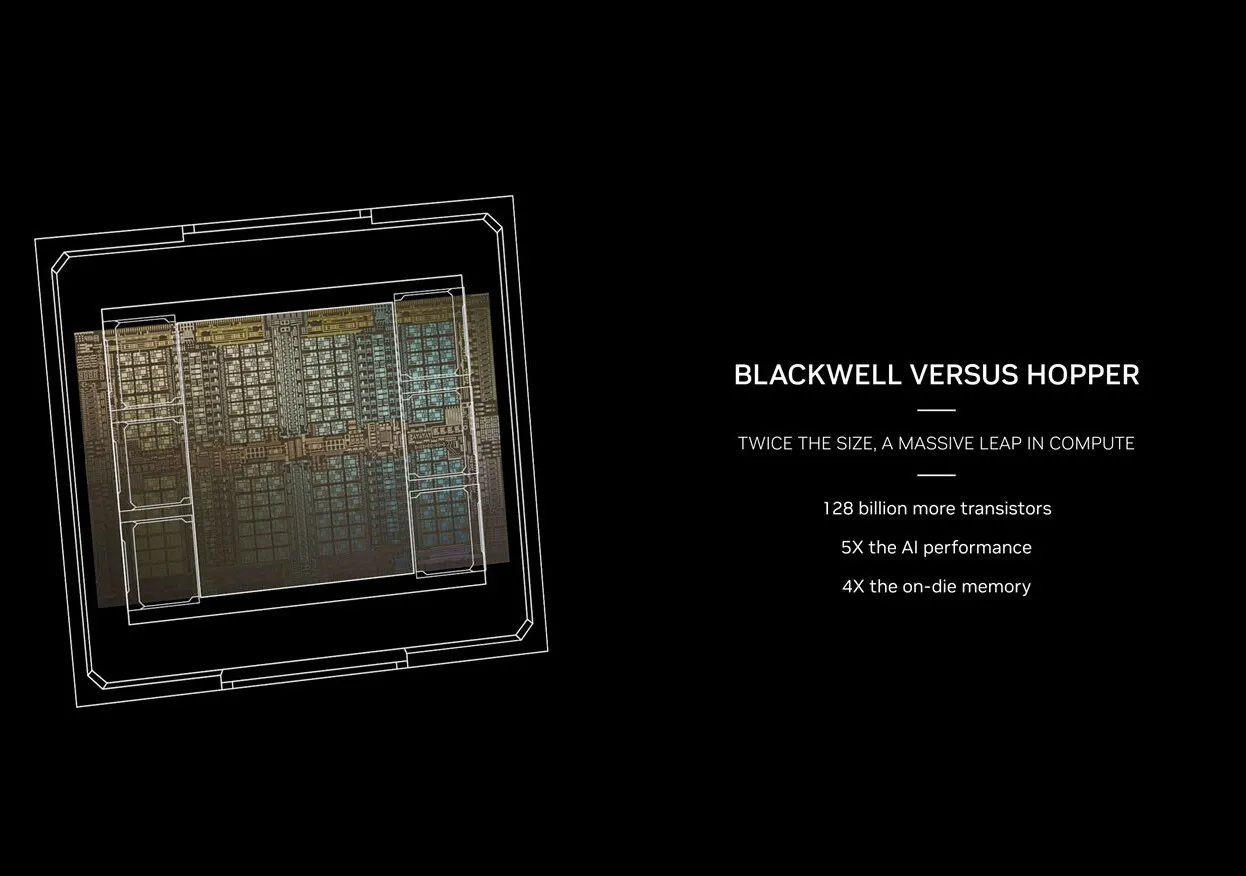

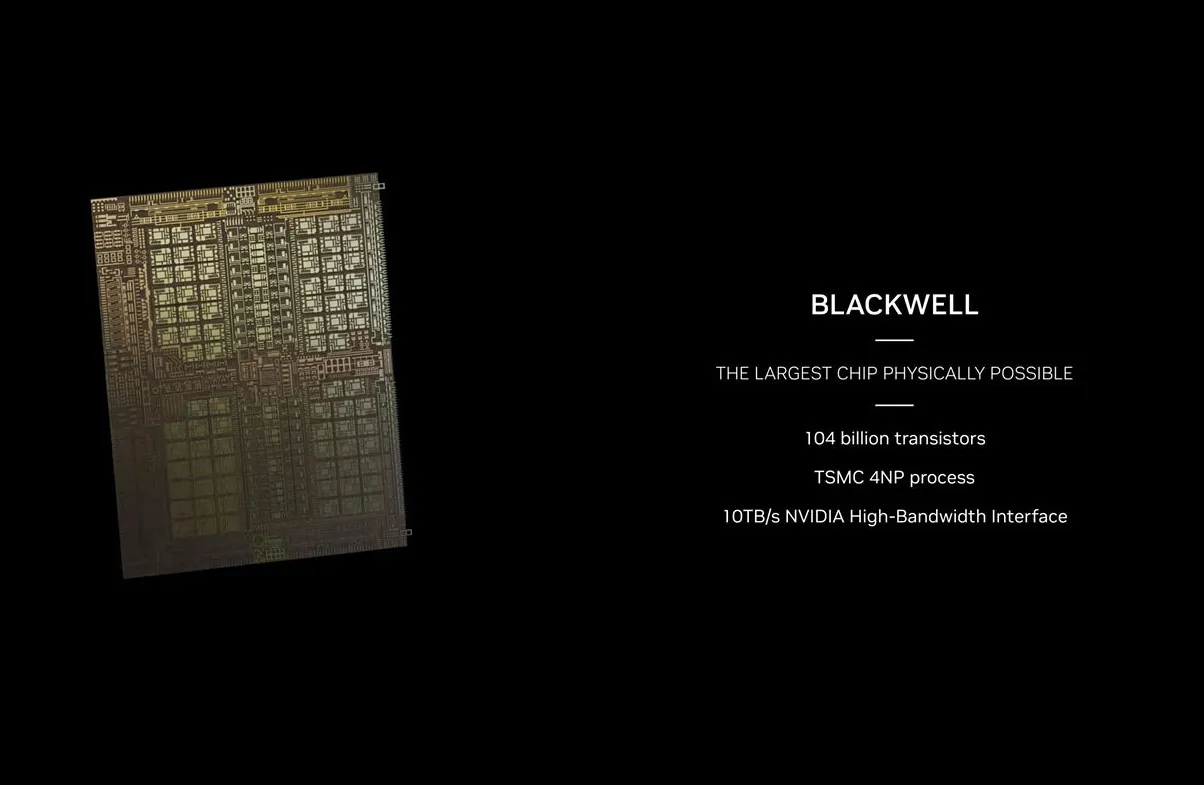

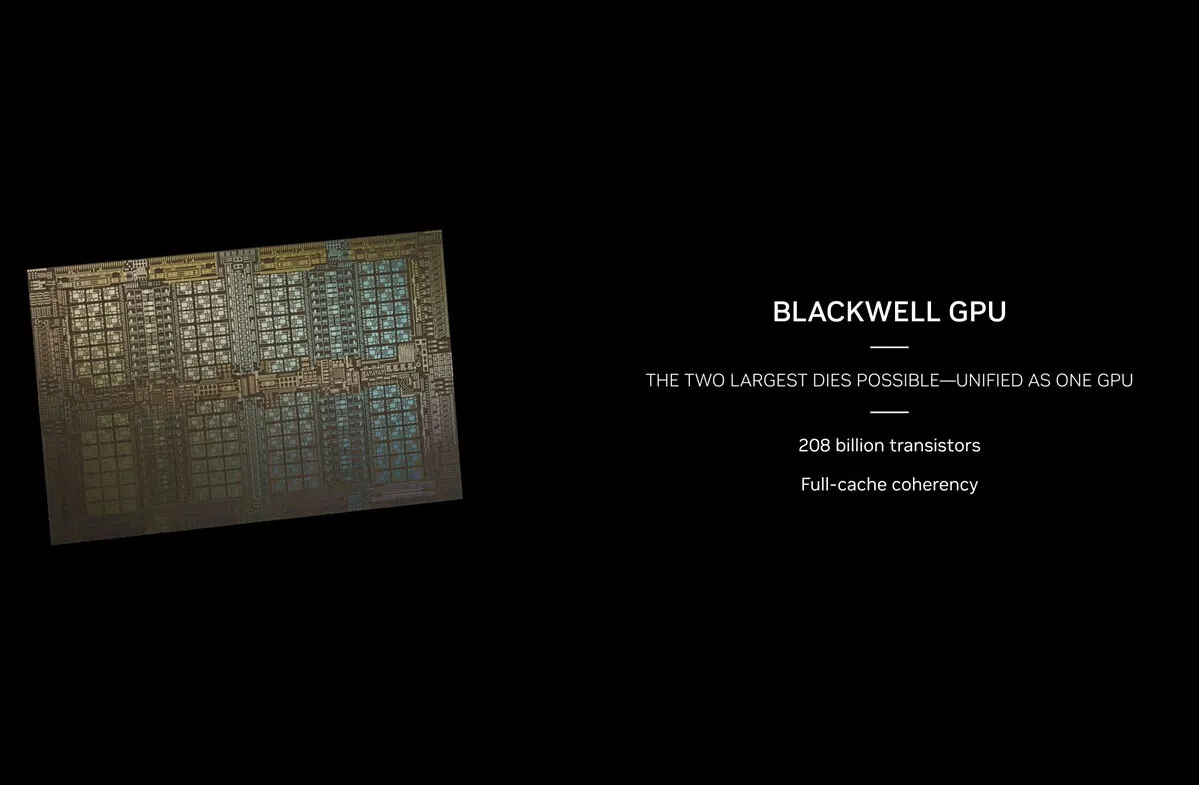

The B200, utilizing maximum foundry capabilities, incorporates 208 billion transistors across two chiplets, each containing 104 billion transistors fabricated on TSMC's advanced N4P 4 nm-class node. These chiplets are interconnected by a custom 10 TB/s interface, supporting cache coherency and a 4096-bit memory bus connected to 192 GB of HBM3E memory, yielding an 8 TB/s memory bandwidth. The package includes a 1.8 TB/s NVLink for interconnectivity.

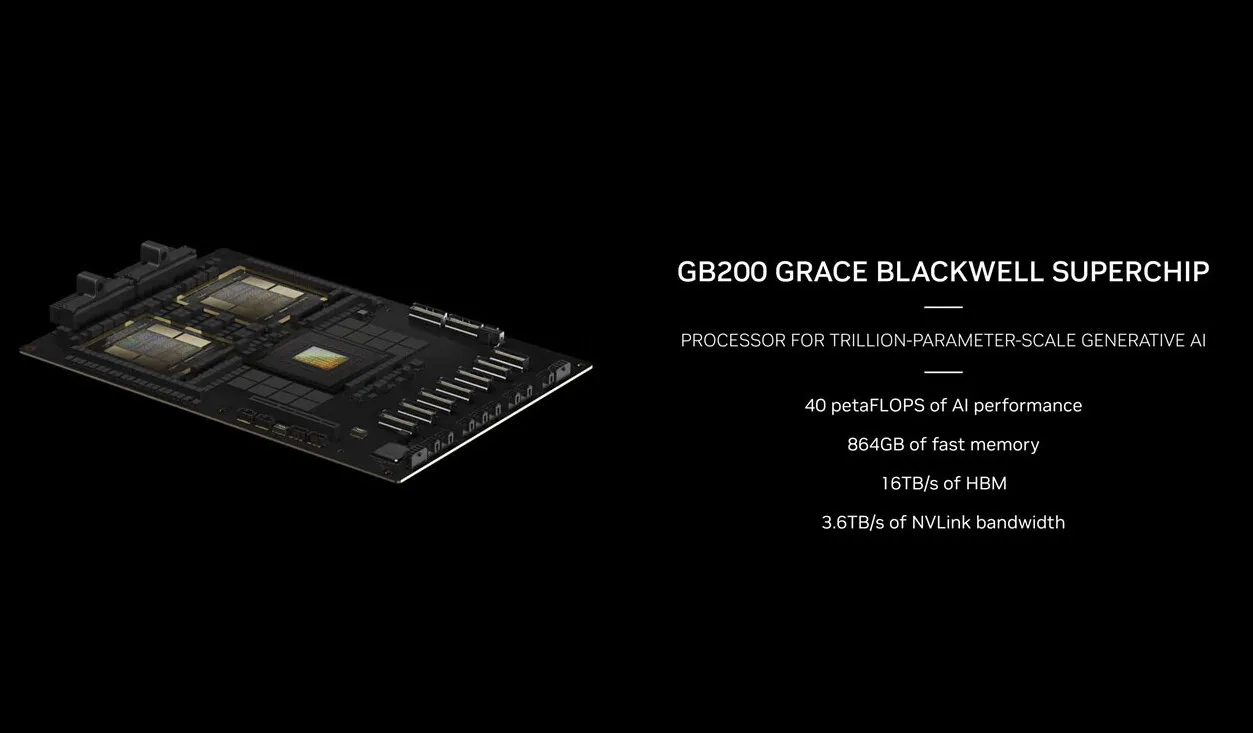

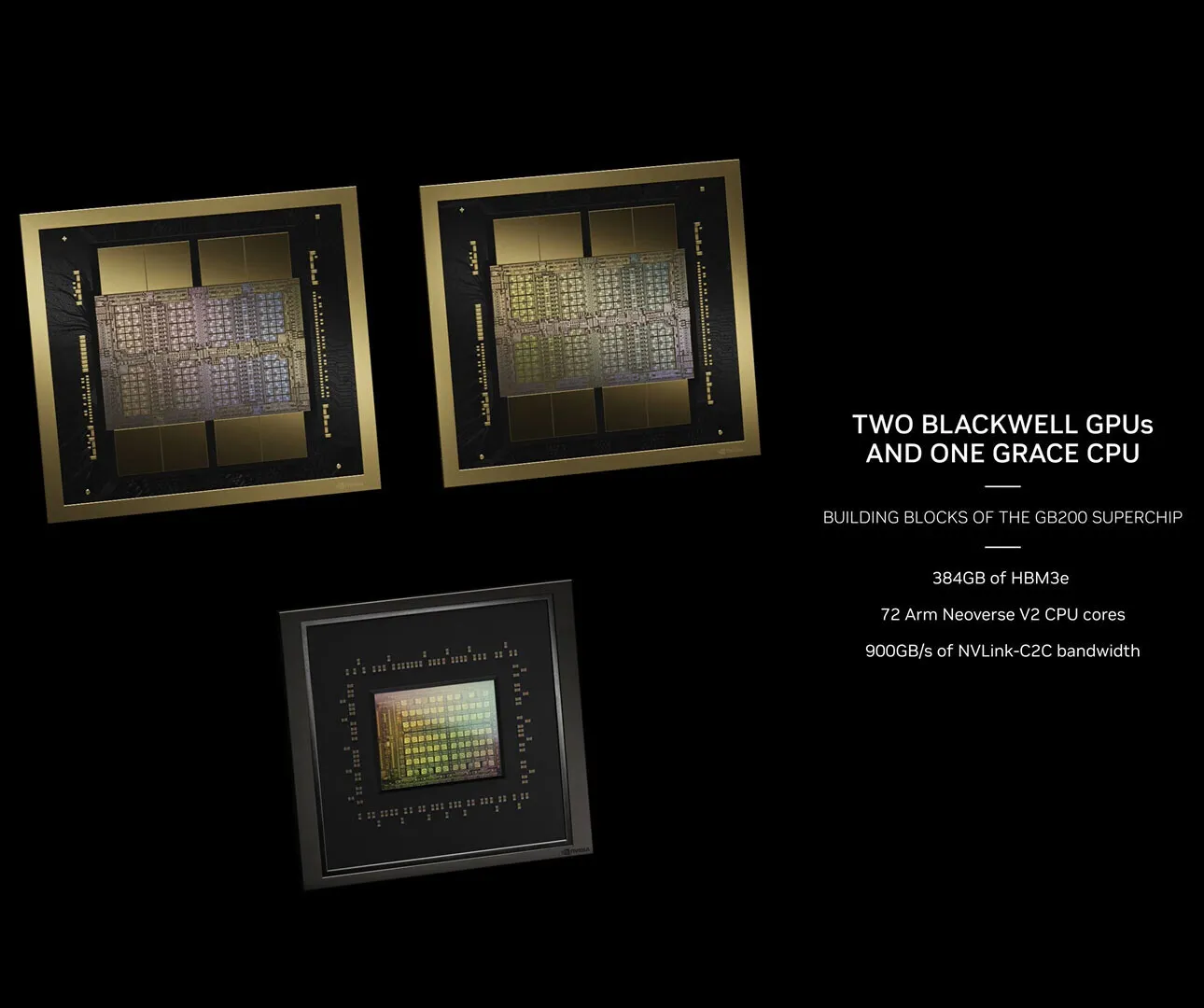

The GB200 superchip is even more special because it has two of these B200 GPUs and also includes a NVIDIA Grace CPU. This makes it really good at doing a lot of calculations one after the other, better than the usual CPUs most computers use. It also has an even faster way to connect different parts of the computer together. NVIDIA says this setup can do a lot of AI work very quickly, up to 20 petaflops, which is a way to measure how many calculations it can do. They've also made improvements to some parts of the GPU that help with AI work, but they didn't give all the details about everything inside.

NVIDIA is also planning to sell different versions of this technology. One version, called HGX B200, puts eight of these B200 GPUs together with regular CPUs, and each GPU uses up to 1000W of power. Another version, called HGX B100, is made to work with the older HGX H100 system and each GPU in this setup uses up to 700W of power. Even though these versions are different, they all can move data at a super-fast rate of 8 TB/s inside the GPU. NVIDIA is planning to sell these new B100, B200, and GB200 GPUs, along with some other versions, within this year. This means people and companies interested in these new computer parts won't have to wait too long to get their hands on them.