Fueling the growth of AI services worldwide, NVIDIA today launched an AI data center platform that delivers the industry’s most advanced inference acceleration for voice, video, image and recommendation services.

The NVIDIA TensorRT Hyperscale Inference Platform features NVIDIA Tesla T4 GPUs based on the company’s breakthrough NVIDIA Turing™ architecture and a comprehensive set of new inference software.

Delivering the fastest performance with lower latency for end-to-end applications, the platform enables hyperscale data centers to offer new services, such as enhanced natural language interactions and direct answers to search queries rather than a list of possible results.

“Our customers are racing toward a future where every product and service will be touched and improved by AI,” said Ian Buck, vice president and general manager of Accelerated Business at NVIDIA. “The NVIDIA TensorRT Hyperscale Platform has been built to bring this to reality — faster and more efficiently than had been previously thought possible.”

Every day, massive data centers process billions of voice queries, translations, images, videos, recommendations and social media interactions. Each of these applications requires a different type of neural network residing on the server where the processing takes place.

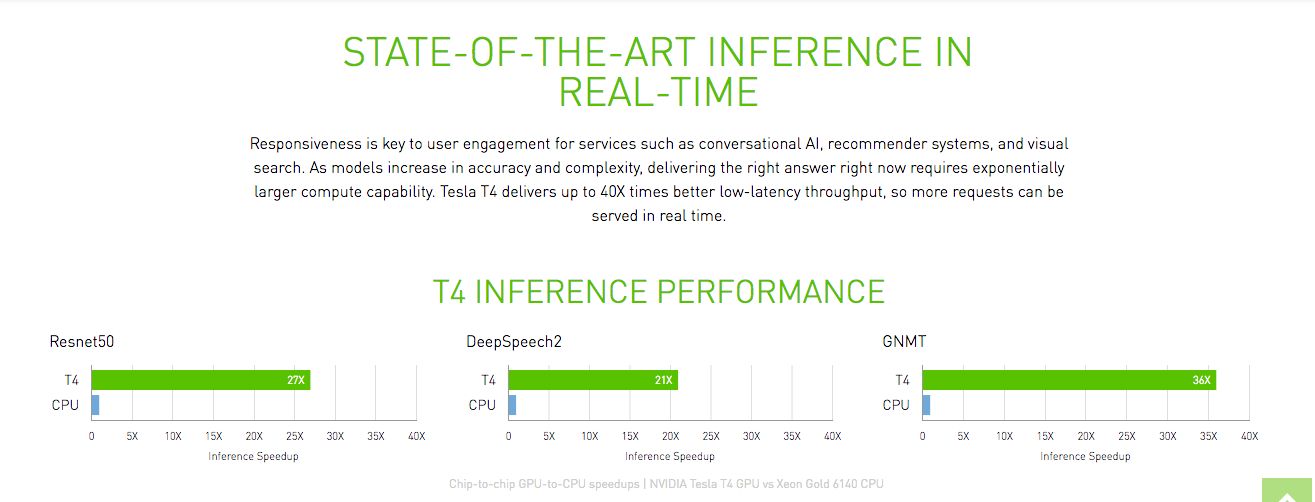

To optimize the data center for maximum throughput and server utilization, the NVIDIA TensorRT Hyperscale Platform includes both real-time inference software and Tesla T4 GPUs, which process queries up to 40x faster than CPUs alone.

NVIDIA estimates that the AI inference industry is poised to grow in the next five years into a $20 billion market.

Industry’s Most Advanced AI Inference Platform

The NVIDIA TensorRT Hyperscale Platform includes a comprehensive set of hardware and software offerings optimized for powerful, highly efficient inference. Key elements include:

- NVIDIA Tesla T4 GPU – Featuring 320 Turing Tensor Cores and 2,560 CUDA® cores, this new GPU provides breakthrough performance with flexible, multi-precision capabilities, from FP32 to FP16 to INT8, as well as INT4. Packaged in an energy-efficient, 75-watt, small PCIe form factor that easily fits into most servers, it offers 65 teraflops of peak performance for FP16, 130 teraflops for INT8 and 260 teraflops for INT4.

- NVIDIA TensorRT 5 – An inference optimizer and runtime engine, NVIDIA TensorRT 5 supports Turing Tensor Cores and expands the set of neural network optimizations for multi-precision workloads.

- NVIDIA TensorRT inference server – This containerized microservice software enables applications to use AI models in data center production. Freely available from the NVIDIA GPU Cloud container registry, it maximizes data center throughput and GPU utilization, supports all popular AI models and frameworks, and integrates with Kubernetes and Docker.

Supported by Technology Leaders Worldwide

Support for NVIDIA’s new inference platform comes from leading consumer and business technology companies around the world.

“We are working hard at Microsoft to deliver the most innovative AI-powered services to our customers,” said Jordi Ribas, corporate vice president for Bing and AI Products at Microsoft. “Using NVIDIA GPUs in real-time inference workloads has improved Bing’s advanced search offerings, enabling us to reduce object detection latency for images. We look forward to working with NVIDIA’s next-generation inference hardware and software to expand the way people benefit from AI products and services.”

Chris Kleban, product manager at Google Cloud, said: “AI is becoming increasingly pervasive, and inference is a critical capability customers need to successfully deploy their AI models, so we’re excited to support NVIDIA’s Turing Tesla T4 GPUs on Google Cloud Platform soon.”

More information, including details on how to request early access to T4 GPUs on Google Cloud Platform, is available here.