It has been an interesting week with all the hype on the GeForce GTX 970 with what now is know as a crippled memory sub-system. Three weeks ago here on Guru3D.com users in our forums started to report oddities with their GeForce GTX 970 graphics card, the card ran out of memory at 3.5 GB, not utilizing anything higher or reports of stutters in high graphics memory usage.

The initial problem was hard to detect, and really .. it still is. Three weeks ago even yours truly dismissed the issue, as once I loaded op the latest COD I could see 4 GB graphics memory being used, without any performance issues. The problem persisted to display itself in our forums from end-users though, and just before the weekend a small CUDA application was released that showed weird behavior in the graphics subsystem.

There has been discussion about the validity of that application. But regardless of what anybody thinks of it, it did place the finger precisely at a sore spot. From there on things escalated rapidly, the Nvidia forums got under attack from their customer base and Nvidia went into full damage control. They cherry picked three US sites to explain the problem, we however have been bypassed from the issue ever since the very beginning. But once the train gets rolling, it’s hard to stop right ?

It would not take long before Nvidia now responded in public:

The GeForce GTX 970 is equipped with 4GB of dedicated graphics memory. However the 970 has a different configuration of SMs than the 980, and fewer crossbar resources to the memory system. To optimally manage memory traffic in this configuration, we segment graphics memory into a 3.5GB section and a 0.5GB section. The GPU has higher priority access to the 3.5GB section. When a game needs less than 3.5GB of video memory per draw command then it will only access the first partition, and 3rdparty applications that measure memory usage will report 3.5GB of memory in use on GTX 970, but may report more for GTX 980 if there is more memory used by other commands. When a game requires more than 3.5GB of memory then we use both segments.

We understand there have been some questions about how the GTX 970 will perform when it accesses the 0.5GB memory segment. The best way to test that is to look at game performance. Compare a GTX 980 to a 970 on a game that uses less than 3.5GB. Then turn up the settings so the game needs more than 3.5GB and compare 980 and 970 performance again.

On GTX 980, Shadows of Mordor drops about 24% on GTX 980 and 25% on GTX 970, a 1% difference. On Battlefield 4, the drop is 47% on GTX 980 and 50% on GTX 970, a 3% difference. On CoD: AW, the drop is 41% on GTX 980 and 44% on GTX 970, a 3% difference. As you can see, there is very little change in the performance of the GTX 970 relative to GTX 980 on these games when it is using the 0.5GB segment.

After some internal testing here over the weekend we could quite honestly not really reproduce stutters or weird issues other than the normal stuff once you run out of graphics memory. Once you run out of ~3.5 GB memory or on the ~4GB GTX 980 slowdowns or weird behavior can occur, but that goes with any graphics card that runs out of video memory.

Then we posted update #3 on our story - Nvidia outs and relays again to cherry picked press that they have made a mistake by releasing wrong information about the GTX 970. The GeForce GTX 970 has 56 ROPs, not 64 as listed in their reviewers guides. Having fewer ROPs is not a massive thing here but it exposes a thing or two about effects in the memory subsystem and L2 cache. Combined with some new features in the Maxwell architecture herein we can find the answers of the cards being split up in 3.5GB/0.5GB partition as noted above.

Look above, (and I am truly sorry to make this so complicated, as it really is just that .. complicated). You'll notice that for GTX 970 compared to 980 there are three disabled SMs giving the GTX 970 13 active SM (clusters with things like shader processors). The SMs shown at the top are followed by 256KB L2 caches and then pairs with 32-bit memory controllers located at the bottom. The crossbar is responsible for communication in-between the SM's, cache en and memory controllers.

You will notice that grayed-out right-hand L2 for this GPU right ? That is a disabled L2 block and each L2 block is tied to ROPs, GTX 970 does not have 2,048KB but instead has 1,792KB of L2 cache. Disabling ROPs and thus L2 like that is actually new and Maxwell exclusive, on Kepler disabling a L2/ROP segment would disable the entire section including a memory controller. So while the L2/ROP unit is disabled, that 8th memory controller to the right still is active and in use. Now that we know that Maxwell can disable smaller segments and keep the rest activated, we just learned that we can still use the 64-bit memory controllers and associated DRAM, but the final 1/8th L2 cache is missing/disabled. As you can see the DRAM controller actually need to buddy up into the 7th L2 unit, that it the root cause of a big performance issue. The GeForce GTX 970 has a 256-bit bus over a 4GB framebuffer, the memory controllers are all active and in use, but disabling that L2 segment tied to the 8th memory controller will result in the fact that overall L2 performance would operate at half of its normal performance.

Nvidia needed to tackle that problem and did so by splitting the total 4GB memory into a primary (196 GB/sec) 3.5GB partition that makes use of the first seven memory controllers and associated DRAM, then there is a (28 GB/sec) 0.5GB tied to the last 8th memory controller. Nvidia could have and probably should have marketed the card as 3.5GB, or they probably could even have deactivated an entire right side quad and go for a 192-bit memory interface tied to just 3GB of memory but did not pursue that as alternative as this solution offers better performance. Nvidia's claims that games hardly suffer from this design / workaround. In a rough simplified explanation the disabled L2 unit causes a challenge, an offset performance hit tied to one of the memory controllers. To divert that performance hit the memory is split up into two segments, bypassing the issue at hand, a tweak to get the most out of a lesser situation. Both memory partitions are active and in use, the primary 3.5 GB partition is very fast, the 512MB secondary partition is much slower.

Thing is, the quantifying fact is that nobody really has massive issues, dozens and dozens of media have tested the card with in-depth reviews like the ones here on my site. Replicating the stutters and stuff you see in some of the video's, well to date I have not been able to reproduce them unless you do crazy stuff, and I've been on this all weekend. Overall scores are good, and sure if you run out of memory at one point you will see perf drops. But then drop from 8 to like 4x AA right ?

The continuation of the story

While I do think this easily could have been a mistake in the very beginning from Nvidia in matters of disclosing information, I do believe Nvidia has been lying. There are dozens if not hundreds of GeForce GTX 970 reviews out on the web. You can not explain to me that with all the engineers that Nvidia has, not one of them noticed the wrong specs in one of these reviews ? This was kept low profile. You also need to realize that the memory partitioning was an intentional fix to solve and address an otherwise nagging issue. Nvidia has actively chosen to not share that information with media and thus their end-users.

Middle Earth: Shadow of Mordor GeForce GTX 970 VRAM stress test

So I have been asked to look into this by many of you. And we did so, all are reviews on the GTX 970 stand as they are. They show the real performance of gaming with this card. The past few days we did look a little closer to some of the reports out there. At 2560x1440 I tried filling that graphics memory, but most games simply do not use more than 1.5 to 3 GB at that resolution combined with the very best image quality settings. This includes MSAA levels of up-to 8x. At the best settings and WHQD we tried, Alien Isolation, Alan Wake, BioShock Infinite, Hitman, Absolution, Metro Last Light, Thief, Tomb Raider, Asassin’s Creed Black Flag. Now the only way to try and replicate stutters that people have been experiencing is to use FCAT. Our FCAT setup cannot measure at Ultra HD, we are DVI bound to 2560x1440. If we wanted to upgrade to a DP splitter that would easily cost roughly 600 EURO, our capture card likely can’t handle UHD either, which is a 1500 EURO product as it sits right now (just to get you an idea what this stuff costs).

Let me clearly state this, the GTX 970 is not an Ultra HD card, it has never been marketed as such and we never recommended even a GTX 980 for Ultra HD gaming either. So if you start looking at that resolution and zoom in, then of course you are bound to run into performance issues, but so does the GTX 980. These cards are still too weak for such a resolution combined with proper image quality settings. Remember, Ultra HD = 4x 1080P. Let me quote myself from my GTX 970 conclusions “it is a little beast for Full HD and WHQD gaming combined with the best image quality settings”, and within that context I really think it is valid to stick to a maximum of 2560x1440 as 1080P and 1440P are is the real domain for these cards. Face it, if you planned to game at Ultra HD, you would not buy a GeForce GTX 970.

So the two titles that do pass (without any tricks) 3.5 GB are Call of Duty Advanced Warfare and of course that has been most reported to stutter is Middle Earth: Shadow of Mordor. We measured, played and fragged with COD, and there is just NOTHING to detect with the graphics memory fully loaded and in use. But we know that COD simply likes to cache a lot of stuff in VMEM, opposed to using it for rendering. So our focus for this quick test will remain Middle Earth: Shadow of Mordor.

We render 3840x2160 (Ultra HD) but we output in a 2560x1440 (WHQD) resolution

Above you will find an FCAT measurement, we’ll run a similar event twice, one at roughly 3GB (lower IQ settings) and one where we are at 3.6 GB, the maximum this card and game seems to deal with. Obviously in the first condition the frame rates will be higher and this the latency lower. That is not interesting, what is interesting is that if we pass 3.5 GB will there be any stuttering effect once the last 512 MB starts to weigh into rendering ? Basically we load up VRAM by using the new DSR feature. We render at Ultra , output at 1440P. This method is perfect for usage with FCAT.

Update: We tested specifically with this title as reportedly it runs into problems (stutters) at the point where it can't use more VRAM, with our card that is 3.6 GB. Read that carefully, we can not load up the card with more VRAM even with heavier DSR - and that is the precisely point where people have been complaining about stuttering and other issues.

Let’s have a look with FCAT.

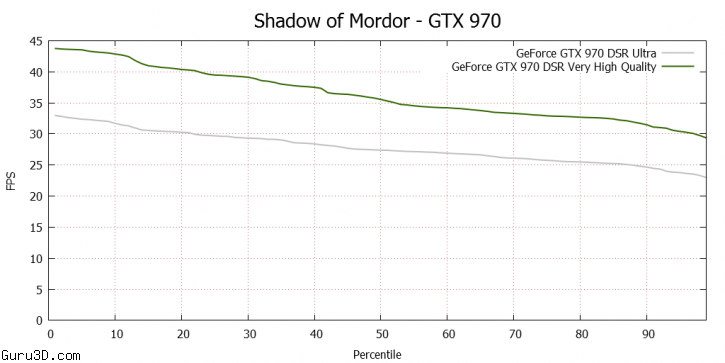

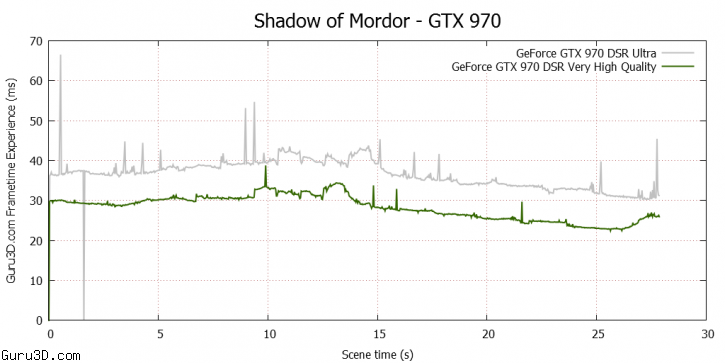

- 2560x1440 – Very high Quality + DSR @ 3840x2160 = almost 3 GB VRAM usage

- 2560x1440 – Ultra Quality + DSR @ 3840x2160 = almost 3.6 GB VRAM usage

Obviously the framerates differ. But the focus should be be spikes in the second chart, frames with a higher latency. I again stick to my initial findings here, there's no significant evidence that once you graphics memory runs out and starts using the 512MB (or not at all) that you can see massive and weird behavior. There is however an increase (grey line = UHD DSR with Ultra Quality settings) of really tiny latency spikes. Since they are above 40ms, these are visible. But they last a split second and you only see four of them measured over 28 seconds. That's nowhere close to what you see in some of the posted video's you see on YouTube.

We can show you FCAT results like above in an endless fashion, and they will all look rather similar. And remember, we are rendering at Ultra HD quality with Ultra quality settings there.

Concluding

Our product reviews in the past few months and its conclusion are not any different opposed to everything that has happened in the past few days, the product still performans similar to what we have shown you as hey .. it is in fact the same product. The clusterfuck that Nvidia dropped here is simple, they have not informed the media or their customers about the memory partitioning and the challenges they face. Overall you will have a hard time pushing any card over 3.5 GB of graphics memory usage with any game unless you do some freaky stuff. The ones that do pass 3.5 GB mostly are poor console ports or situations where you game in Ultra HD or DSR Ultra HD rendering. In that situation I cannot guarantee that your overall experience will be trouble free, however we have a hard time detecting and replicating the stuttering issues some people have mentioned.

The Bottom line

Utilizing graphics memory after 3.5 GB can result into performance issues as the card needs to manage some really weird stuff in memory, it's nearly load-balancing. But fact remains it seems to be handling that well, it’s hard to detect and replicate oddities. If you unequivocally refuse to accept the situation at hand, you really should return your card and pick a Radeon R9 290X or GeForce GTX 980. However, if you decide to upgrade to a GTX 980, you will be spending more money and thus rewarding Nvidia for it. Until further notice our recommendation on the GeForce GTX 970 stands as it was, for the money it is an excellent performer. But it should have been called a 3.5 GB card with a 512MB L3 GDDR5 cache buffer.

The solution Nvidia pursued is complex and not rather graceful, IMHO. Nvidia needed to slow down the performance of the GeForce GTX 970, and the root cause of all this discussion was disabling that one L2 cluster with it's ROPs. Nvidia also could have opted other solutions:

- Release a 3GB card and disable the entire ROP/L2 and two 32-bit memory controller block. You'd have have a very nice 3GB card and people would have known what they actually purchased.

- Even better, to divert the L2 cache issue, leave it enabled, leave the ROPS intact and if you need your product to perform worse to say the GTX 980, disable an extra cluster of shader processors, twelve instead of thirteen.

- Simply enable twelve or thirteen shader clusters, lower voltages, and core/boost clock frequencies. Set a cap on voltage to limit overlclocking. Good for power efficiency as well.

We do hope to never ever see a graphics card being configured like this ever again as it would get toasted by the media, for what Nvidia did here. It’s simply not the right thing to do. Last note, right now Nvidia is in full damage control mode. We submitted questions on this topic early in the week towards Nvidia US, in specific Jonah Alben SVP of GPU Engineering. On Monday Nvidia suggested a phonecall with him, however due to appointments we asked for a QA session over email. To date he or anyone from the US HQ has not responded to these questions for Guru3D.com specifically. Really, to date we have yet to receive even a single word of information from Nvidia on this topic.

We slowly wonder though why certain US press is always so much prioritized and is cherry picked … Nvidia ?