Meet NVIDIA Turing

NVIDIA GeForce RTX 2080 and 2080 Ti

Unraveling NVIDIA's biggest enigma to date

It's been a long time coming but NVIDIA is ready to announce their new consumer graphics card next week. Looking back in the past, they’ll start with the GeForce GTX or what I now believe will be called the GeForce RTX 2080 series. In this weekend write-up I wanted to have a peek at what we’re bound to expect as really, the actual GPU got announced this week on Siggraph already. There’s a number of things we need to talk you through. First of names and codenames. We’ve seen and heard it all for the past months. One guy at a forum yells Ampere, and all of the sudden everybody gossiping something called Ampere. Meanwhile, with GDDR6 memory on the rise and horizon, a viable alternative would have been a 12nm Pascal refresh with that new snazzy memory. It would be a cheaper route to pursue as currently the competition really does not have an answer, cheaper to fab however is not always better and in this case, I was already quite certain that Pascal (the current generational architecture from NVIDIA) is not compatible with GDDR6 in the memory interface.

Back in May I visited NVIDIA for a small event and talking to them it already became apparent that a Pascal refresh was not likely. Literally, the comment from the NVIDIA representative was ‘well, you know that if we release something, you know we’re going to do it right’. Ergo, that comment pretty much placed the theory of a Pascal respin down the drain. But hey, you never know right? But there also was this theory. Another route would have been Volta with GDDR6. And that seemed the more viable and logical solution. However, the dynamic changed, big time this week. However, I now need to go back towards February this year where I posted a news-item then, Reuters mentioned a new codename that was not present on any of NVIDIAs roadmaps, Turing. And that was a pivotal point really, but also added more confusion. See, Turing is a name more related and better fitted to AI products (the Turing test for artificial intelligence).

In case you had not noticed, look at this: Tesla, Kepler, Maxwell, Fermi, Pascal, Volta. Here is your main hint to be found. Historically Nvidia has been naming their architectures after famous scientists, mathematicians, and physicist. Turing however sounded more plausible than Ampere, who actually was a physicist.

Meet Alan Turing

Alan Turing is famous for his hypothetical machine and the Turing test, the machine can simulate any computer algorithm, no matter how complicated it is. That test is applied these days to see if an AI is, in fact, intelligent at a conscious level.

At this point I like to point you towards the beautiful movie Ex Machina, the entire movie could have been named the Turing test. The idea was that a computer could be said to "think" if a human interrogator could not tell it apart, through conversation, from a human being. In the paper, Turing suggested that rather than building a program to simulate the adult mind, it would be better rather produce a simpler one to simulate a child's mind and then to subject it to a course of education. A reversed form of the Turing test is actually widely used on the Internet; the CAPTCHA test is intended to determine whether the user is a human or a computer. That test, my friends, he designed in 1950. During WW2 Turing also has been leading Hut 8, the section which was responsible for German naval cryptanalysis. He devised a number of techniques for speeding the breaking of German ciphers, including improvements to the pre-war Polish bombe method, an electromechanical machine that could find settings for the Enigma machine. He has had a big role in cracking intercepted coded messages that enabled the Allies to defeat the Nazis in many crucial engagements, including the Battle of the Atlantic.

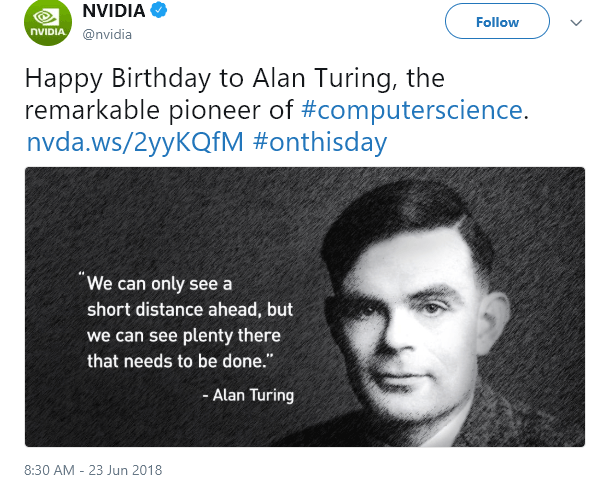

Back to graphics processors though. Back in June NVIDIA posted a tweet congratulating and celebrating the birth date of Alan Turing. That was the point where I realized, at least one of the new architectures would be call Turing. All the hints lead up towards Siggraph 2018, last Monday NVIDIA made an announcement of a new professional GPUs, called .. Quadro RTX 8000, 6000, 5000 and the GPU empowering them is Turing.

Turing is the new GPU architecture (and adds assisted Hybrid Raytracing )

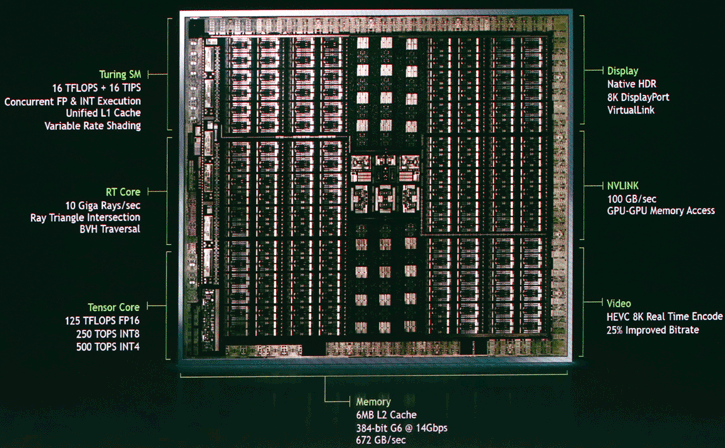

The graphics architecture called Turing is new. It’s a notch of Pascal (shader processors for gaming), it’s a bit Volta (Tensor cores for AI and deep learning) and more. Turing has it all, the fastest fully enabled GPU presented will have 4608 shader processors paired with 576 Tensor cores. What’s new however are the addition of RT cores. Now I would love to call them RivaTuner cores (pardon the pun), however, these obviously are Ray tracing cores. Meaning that NVIDIA now has added core logic to speed up certain ray tracing processes. What is that important? Well, in games if you can speed up certain techniques that normally take hours to render, you can achieve greater image quality.

With raytracing, you basically are mimicking the behavior, looks, and feel of a real-life environment in a computer generated 3D scene. Wood looks like wood fur on animals looks like actual fur, and glass gets refracted as glass etc. But can Ray tracing be applied in games? Short answer, Yes and no, partially. As you might have remembered, Microsoft has released an extension towards DirectX; DirectX Raytracing (DXR). Nvidia, on their end, announced RTX on top of that, hardware and software combined that can make use of the DirectX Raytracing API.

Nvidia has been working on ray tracing for I think it is over a decade which now in the year 2018 results in what they call “NVIDIA RTX Technology’. All things combined ended up in an API and announced MS DirectX Raytracing API. NVIDIA added to that a layer called RTX. It is a combination of software and hardware algorithms. Nvidia will offer developers libraries that include options like Area Shadows, Glossy reflections, and ambient occlusion. However, to drive such technologies, you need a properly capable GPU. Volta was the first and only GPU that could work with DirectX Raytracing and RTX. And here we land at the Turing announcement, it has Unified Shaders processors, tensor cores but now also what is referred to as RT cores, not RivaTuner but Ray tracing cores. NVIDIA has been offering game works libraries for RTX and is partnering up with Microsoft on this. RTX will find its way towards game applications and engines like Unreal Engine, Unity, Frostbite, they have also teamed up with game developers like EA, Remedy, and 4A Games.

GeForce RTX 2080 and 2080 Ti – Real-time traced computer graphics?

Aaaaalrighty then ... RTX?

Yes, we believe that NVIDIA will replace GTX for RTX. There have been a number of certifications and registrations from NVIDIA noting down RTX, earlier today also a new leak revealed an AIB product listed as being RTX. The new Quadro series already has been named as Quadro RTX 8000, and with everything Raytracing in this GPU, we do think the naming will end up to be RTX opposed to GTX. Now NVIDIA could easily keep using GTX for the consumer parts, however, logic seems to defy that sense.

|

Quadro RTX 8000 |

Quadro RTX 6000 |

Quadro RTX 5000 |

Quadro GV100 |

Quadro P6000 |

|

|

Architecture |

Turing |

Turing |

Turing |

Volta |

Pascal |

|

FP32 ALUs |

4608 |

4608 |

3072 |

5120 |

3840 |

|

Tensor cores |

576 |

576 |

384 |

640 |

- |

|

RT-cores |

Yes |

Yes |

Yes |

- |

- |

|

FP32 performance |

16 TFLOPS |

? TFLOPS |

? TFLOPS |

14.8 TFLOPS |

12 TFLOPS |

|

Memory |

48 GB GDDR6 |

24 GB GDDR6 |

16 GB GDDR6 |

32 GB HBM2 |

24 GB GDDR5X |

We’ve now covered the basics and theoretical bits. The new graphics card series will advance on everything related to gaming performance but seems to have Tensor cores on-board as well as ray tracing cores. The Quadro announcements already have shown the actual GPU. Historically, NVIDIA will be using the same GPU for their high-end consumer products. It could be that a number of shader processors or Tensor cores are cut off or even disabled, but the generic spec is what you see below.

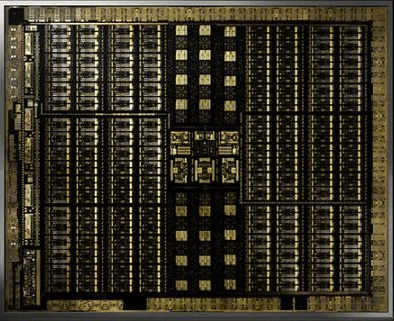

Meet the GU102 GPU

Looking at the Turing GPU, there is a lot of stuff you can recognize, but there certainly have been fundamental block changes in the architecture, the SM (Streaming Multiprocessor) clusters have separated, core separated isolated blocks, something the Volta GPU architecture also shows as familiarity.

| GeForce | RTX 2080 Ti FE | RTX 2080 Ti | RTX 2080 FE | RTX 2080 | RTX 2070 FE | RTX 2070 |

|---|---|---|---|---|---|---|

| GPU | TU102 | TU102 | TU104 | TU104 | TU104 | TU104 |

| Shader cores | 4352 | 4352 | 2944 | 2944 | 2304 | 2304 |

| Base frequency | 1350 MHz | 1350 MHz | 1515 MHz | 1515 MHz | 1410 MHz | 1410 MHz |

| Boost frequency | 1635 MHz | 1545 MHz | 1800 MHz | 1710 MHz | 1710 MHz | 1620 MHz |

| Memory | 11GB GDDR6 | 11GB GDDR6 | 8GB GDDR6 | 8GB GDDR6 | 8GB GDDR6 | 8GB GDDR6 |

| Memory frequency | 14 Gbps | 14 Gbps | 14 Gbps | 14 Gbps | 14 Gbps | 14 Gbps |

| Memory bus | 352-bit | 352-bit | 256-bit | 256-bit | 256-bit | 256-bit |

| Memory bandwidth | 616 GB/s | 616 GB/s | 448 GB/s | 448 GB/s | 448 GB/s | 448 GB/s |

| TDP | 260W | 250W | 225W | 215W | 185W | 175W |

| Power connector | 2x 8-pin | 2x 8-pin | 8+6-pin | 8+6-pin | 8-pin | 8-pin |

| NVLink | Yes | Yes | Yes | Yes | - | - |

| Performance (RTX Ops) | 78T RTX-Ops | 60T RTX-Ops | 45T RTX-Ops | |||

| Performance (RT) | 10 Gigarays/s | 8 Gigarays/s | 6 Gigarays/s | |||

| Max Therm degree C | 89 | 88 | 89 | |||

| price | $ 1199 | $ 999 | $ 799 | $ 699 | $ 599 | $ 499 |

** table with specs has been updated with launch figures

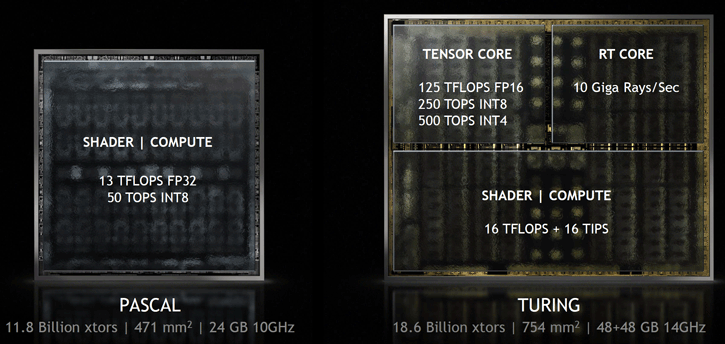

Turing, and allow me to name the GPU TU102 is massive, it counts 18.6 billion transistors localized onto a 754mm2 die. In comparison, Pascal had close to 12 billion transistors on a die size of 471mm2. Gamers will immediately look at the shader processors, the Quadro RTX 8000 has 4608 of them enabled and since everything with bits is in multitudes of eight and while looking at the GPU die photos. Turing has 72 SMs (streaming multiprocessors) each holding 64 cores = 4608 Shader processors. We have yet to learn what blocks are used for what purpose. A fully enabled GPU has 576 Tensor cores (but we're not sure if these are enabled on the consumer gaming GPUs), 36 Geometry units, and 96 ROP units. We expect this GPU to be fabbed on an optimized 12nm TSMC FinFET+ node, the frequencies at this time are unannounced.

Graphics memory - GDDR6

Another difference in-between Volta and Turing is of course graphics memory. HBM2 is a bust for consumer products, at least it seems and feels that way. The graphics industry at this time is clearly favoring the new GDDR6. It’s easier and cheaper to fab and add, and at this time can even exceed HBM2 in performance. Turing will be paired with 14 Gbps GDDR6. The Quadro cards have multitudes of eight (16/32/48GB) ergo we expect the GeForce RTX 2080 to get 8GB of graphics memory. It could 16GB but that just doesn’t make much sense at this time in relation to cost versus benefits. By the way, DRAM manufacturers expect to reach 18 Gbps and faster with GDDR6.

NVLINK

So is SLI back? Well, SLI has never been gone. But it got limited towards two cards over the years, and driver optimization wise, things have not been optimal. If you have seen the leaked PCB shots, you will have noticed a new sort of SLI finger, that is actually an NVLINK interface, and it's hot stuff. In theory, you can combine two or more graphics cards, and the brilliance is that the GPUs can share and use each others memory, e.g direct memory access between cards. As to what extent this will work out for gaming, we'll have the learn just yet. Also, we cannot wait to hear what the NVLINK bridges are going to cost, of course. But if you look at the upper block-diagram, that a 100 GB/sec interface, which is huge in terms of interconnects. We can't compare it 1:1 like that, but SLI or SLI HB passed 1 or 2 GB/sec respectively. One PCIe Gen 3 single lane offer 1 Gb/s, that's 16 GB/sec or 32 GB/sec full duplex. So that one NVLINK between two cards is easily three times faster than a bi-directional PCIe Gen 3 x16 slot.

NVIDIA will support 2-way NVLINK support.

GeForce GTX 2080

A notch of speculation then. Judging from the specs, the GDDR6 memory will get tied towards a 256-bit bus, and depend on the clock frequency, we seem to be looking at 448 GB/sec. This graphics card series will not have the full shader count as the GU102 shows, the card will have 2944 shader processors active, clock frequencies remain an enigma (pardon the pun) but look at the upper table for a good indication, we assume base/boost clocks at the 1500~1800 MHz at this time.

GeForce GTX 2080 Ti

That GeForce RTX 2080 Ti with GU102 will hopefully get the full shader count and memory bus. A shader count of 4608 might actually be less, as NVIDIA historically applies the full count towards a Titan. Expect 4352 active shader processors. A GeForce GTX 2080 Ti has been rumored and some leaked photos today have proven that to be a product with 11GB GDDR6 which opens the question on the memory bus, advancing even further on the memory bandwidth. In fact, if you look at the block diagram above, you’ll notice a 384-bit interface with 14 Gbps memory listed at 672 GB/sec (!). That obviously is the full chip enabled and loaded up. With 11 Gb we expect 352-bit as each chip is tied towards a 32-bit memory controller, 11x32 = 352-bit. We can only speculate on clock frequencies again for the board partners, but here we're thinking the 1350~1600 MHz sounds plausible with such a big and complex GPU, so we'll stay at the safe side.

The teaser video this week had riddles leading towards RTX 2080 as well as the launch venue and more. Riddles are ciphers and a cipher is an enigma, who is the mac daddy of an enigma ... Alan Turing. You probably did not think about it like that, have you? Yes, we reached full circle here?

Exciting times

We’re going to see a lot of new stuff in the weeks, months and year to come. Anything from Geforce RTX 2050, 2060, 2070, 2080, 2080 Ti, RTX Titan and so on. It will be very interesting to see who will release what, as the initial release, of course, will be a founder edition solely, with likely a month later to see new AIB/AIC board partner products, often with improved cooling.

I hope you enjoyed this little write-up, more next week, of course. Have a good weekend!

- Sign up to receive a notice when we publish a new article

- Or go back to Guru3D's front page