We'll probably go back and forth a bit when it comes to the topic of Ampere until NVIDIA lifts all mystery, expected was that NVIDIA's upcoming GPUs would be fabbed at 7nm. However, that fabrication node is over-utilized. NVIDIA could be reverting towards 10nm baked by Samsung.

It's once again Twitter user KittyCorgy (who has a bit of a questionable reputation for these things as never ever he explains how he obtained the info) claims to have information about Ampere GPUs. Remember, it's just some guy posting some stuff, unvalidated with a Twitter account created in January. Anyhow, all cards would support ray tracing and thus be fabricated using a different process than expected, 10nm. We do hope to see some announcements at an NVIDIA GDC live stream presentation from mister leather jacket himself, as yeah .. it's about time eh? We kind of expect an announcement on the GA102 GPU, let's call it GeForce RTX 3080 Ti for now.

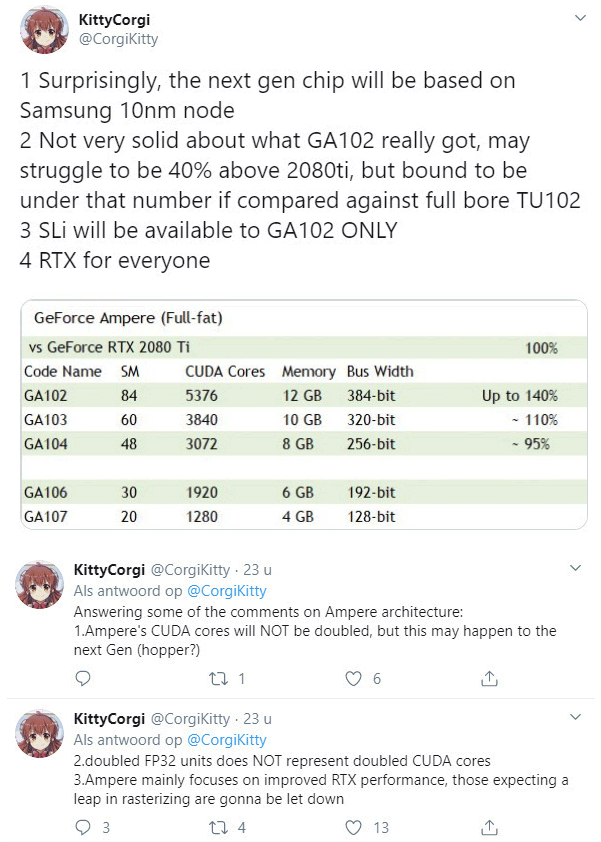

Rumored for the consumer products would be a GPU with 84 shader units resulting in 5376 shader cores paired with 12 GB graphics memory and a bus width of 384-bits. based on that bus width we can declare the memory type, that's GDDR6. KittyCorgi also mentioned the GA102 is the only Multi-GPU compatible product and mentioned that at best a 40% uplift in performance can be expected.

It is suggested the biggest improvement would be ray tracing performance, as granted that was the Achilles heel of the current RTX 2000 generation cards. As far as validity and credibility go, all this need to be taken with a grain of salt and then some extra, and then some huge disclaimers in mind, but yeah here it is:

Rumor: NVIDIA GeForce Ampere to be fabbed at 10nm, all cards RTX ?