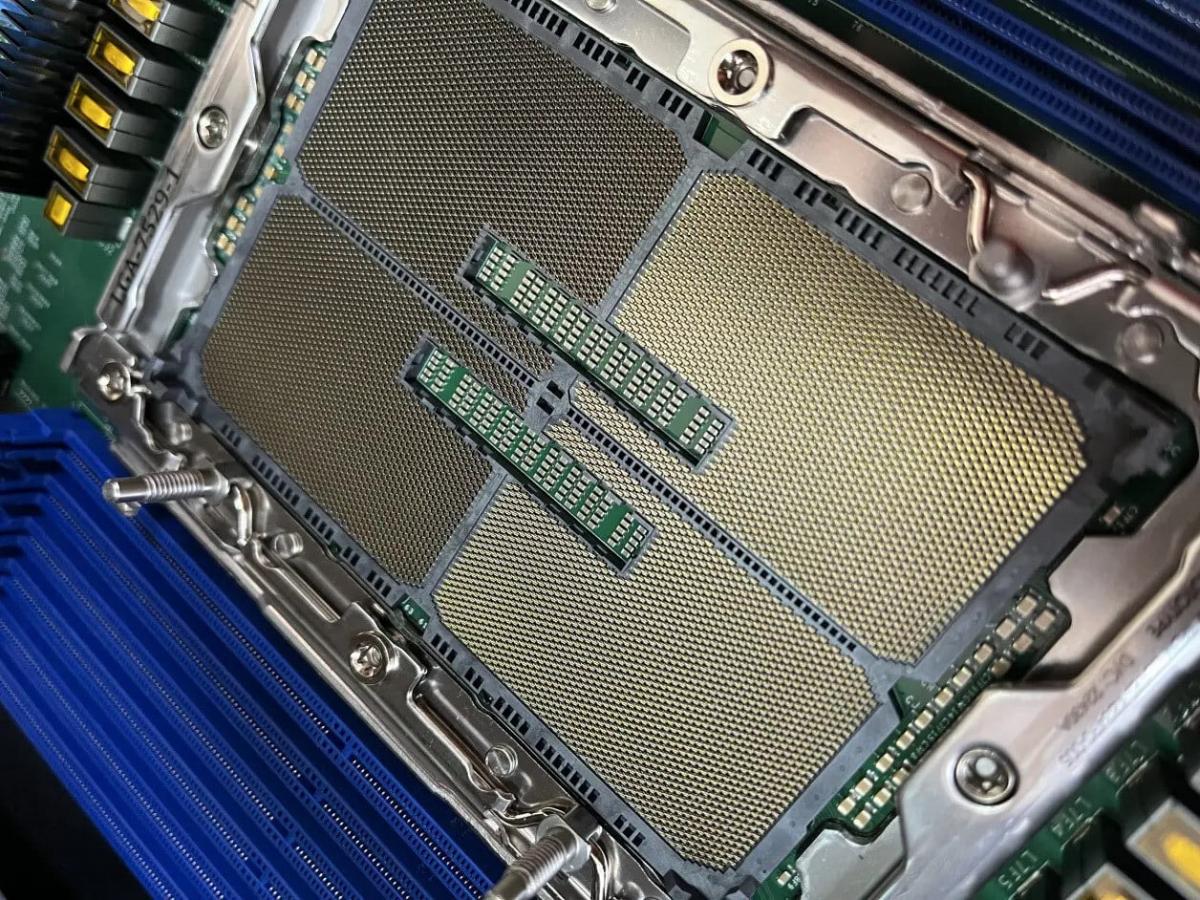

Recently, Bilibili, a Chinese website, published images of the LGA 7529 socket, confirming that the upcoming processors will be significantly larger than their predecessors.

Although the LGA 4677 was already considered large with dimensions of 61 x 82mm, the new "Mountain Stream" platform will surprise users with its size of 66 x 92.5mm, nearly 10 cm in length. The new platform is designed to work alongside Intel's Sierra Forest and Granite Rapids lines of CPUs and is scheduled for release in 2024.

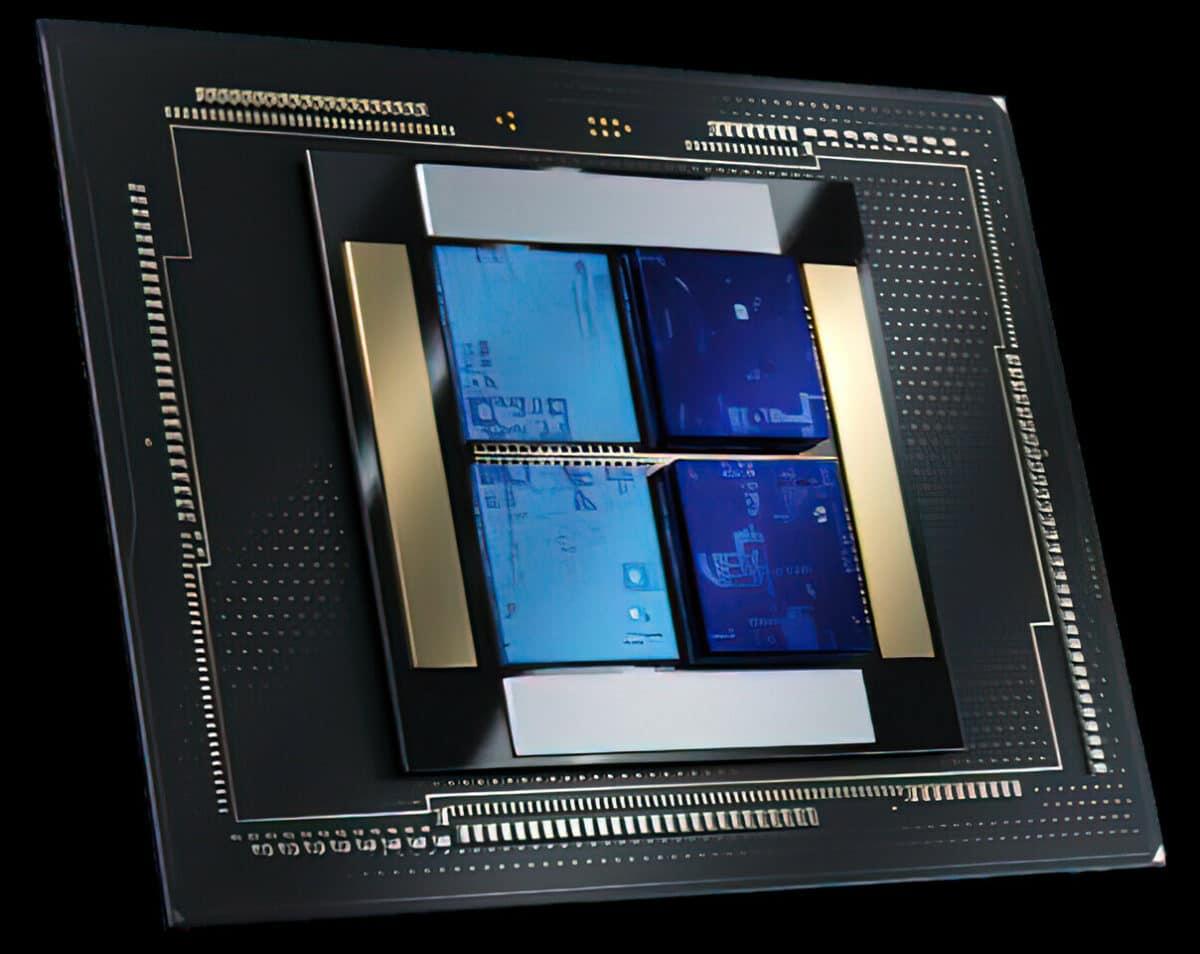

Industry analysts predict that Intel's fifth-generation Xeon microarchitectures, codenamed Avenue City, will revolutionize server and cloud computing. These processors will support dual-socket configurations and offer two distinct models. The P-Core variants will feature 86 and 132 cores, while the E-Core variants will range from 334 to 512 cores and include HBM variants. Recently leaked photos of the prototypes reveal the "ES" (engineer sample) inscription, indicating that they were designed for testing purposes.

Intel's commitment to delivering high-performance processors has driven the decision to abandon the LGA 1700 socket in favor of inline production, with Arrow Lake-S set to launch in 2023. As the launch date for the fifth-generation Xeon processors approaches in 2024, Intel is expected to provide more details about the new processors.

Intel LGA 7529 Processors are Nearly 10cm in Length