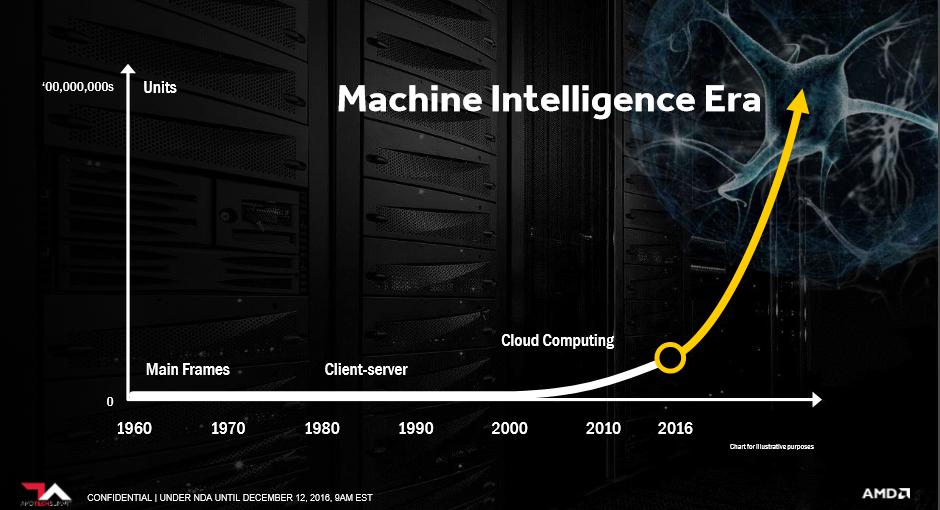

AMD today unveiled its strategy to accelerate the machine intelligence era in server computing, alongside the products announced is the MI25 which seems to be offering 25 TFLOPs (fp16-bit) of performance, and is using a confirmed VEGA GPU. New Radeon Instinct accelerators will offer organizations powerful GPU-based solutions for deep learning inference and training.

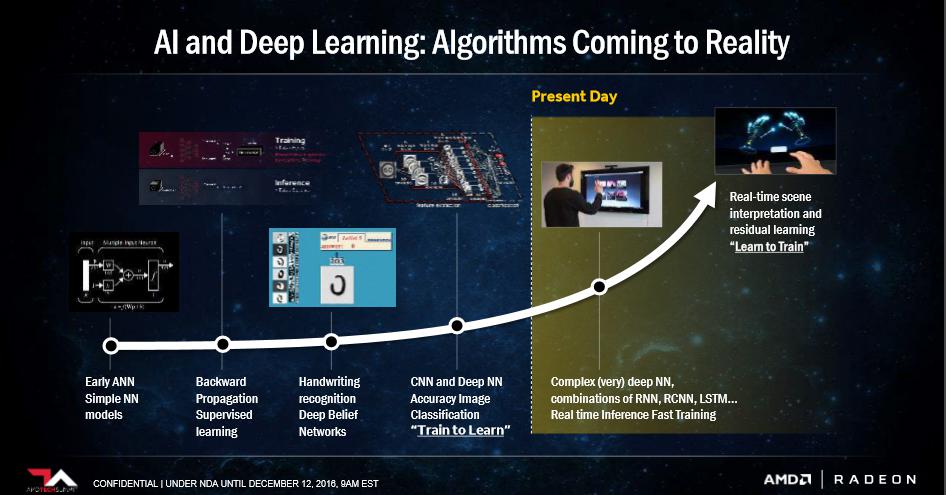

Under the name Radeon Instinct AMD is releasing the MI6, MI8 and MI25 and a combo of (open) software applications. The number in the MI series is actually the listed performance at 16-bit floating-point TFLOP performance. Machine Learning is what these enterprise level cards are aimed at, they will be the driving force behind this Mi series release. For the card implementation think in lines of artificial intelligence (AI) and such. GPUs are in fact very suitable for AI and machine learning applications, since these have been optimized to process a lot of data in parallel at very high speed.

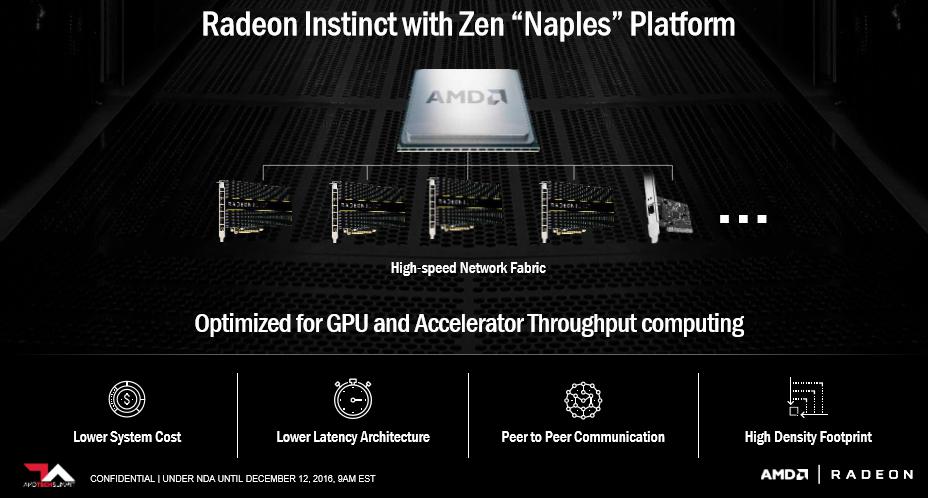

The new 'Instinct' products as such are aimed at different market segments like self-driving cars, complex drug development, fighting diseases, prediction of weather models, robotics and even financial applications. Machine Learning aka Deep Learning is a series of technologies that make computers more intelligent by huge amounts of data that can be analyzed with a result of making predictions and choices. Instinct is a hardware and software model based on the Radeon Open Compute Platform, or ROCm. previously called the Boltzmann Initiative. ROCm offers the foundation for running HPC applications on MI accelerators powered by AMD graphics cards. ROCm can accelerate common deep-learning frameworks like Caffe, Torch 7, and TensorFlow on AMD hardware. All that software runs atop a new series of Radeon Instinct compute accelerators.

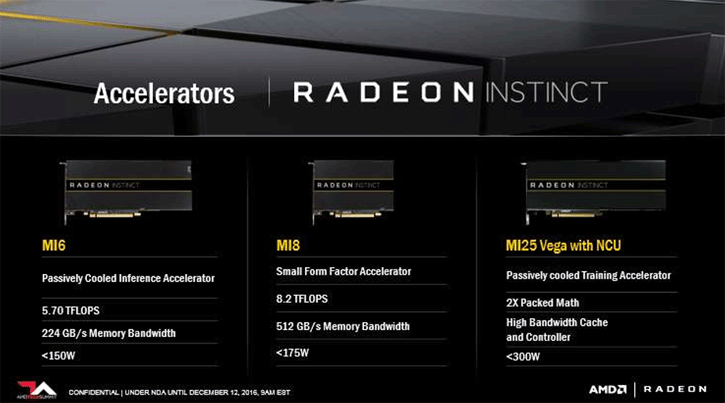

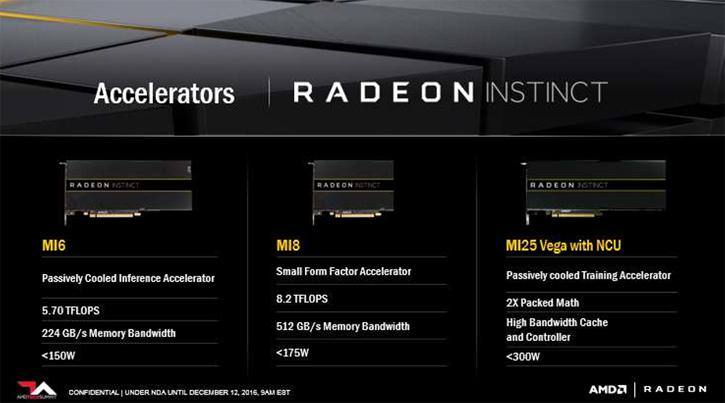

AMD is to release three products being the:

- Radeon Instinct MI6 - with Polaris based GPU aka Radeon RX 480 @ 5,7 TFLOPS

- Radeon Instinct MI8 - with Fiji-chip aka R9 Nano / Fury @ 8.2 TFLOPS

- Radeon Instinct MI25 - With a Vega GPU which is listed at 25 TFLOPS

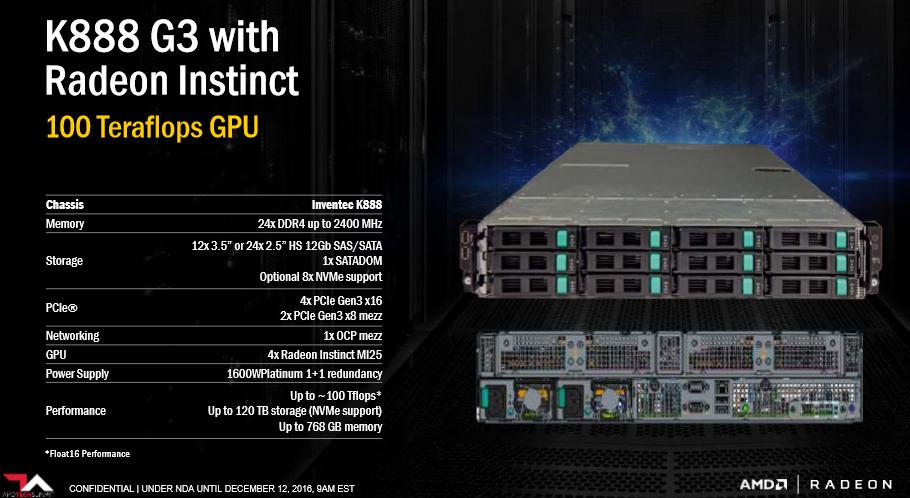

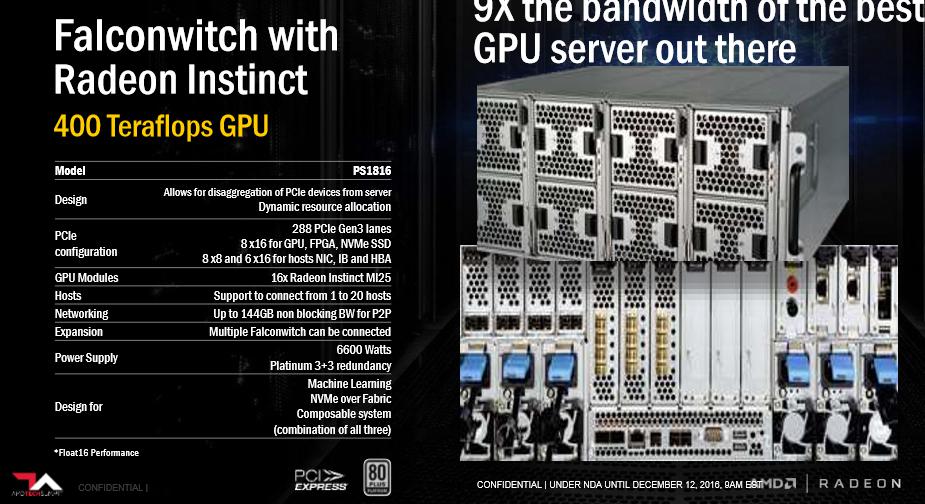

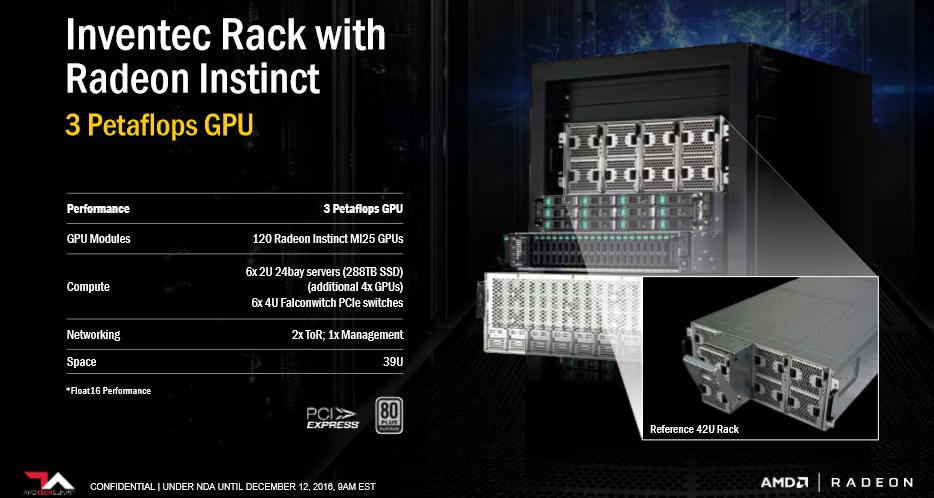

Before we dive into the press-release, there's a few things interesting. In terms of the VEGA GPU being used in this platform. The Mi25 is the model that will be fitted with VEGA. It is listed as <300 Watt but that is board power. Secondly AMD has not shared any performance or spec slides on VEGA just yet. However if you dig deeply into the slides you'll notice a twofold of server clusters:

- The K888 G3 with Radeon Instinct offers 4x Radeon Instinct MI2 @ a listed 100 Teraflops

- The Falcon Radeon Instinct cluster with 16x Radeon Instinct MI25 @ 400 Teraflops

Simple math gets us to the mention 25 TFLOPS per GPU. And earlier rumor stated this: "It's mentioned that this GPU has 64 Compute Units, multiply that with 64 shader processors per cluster and you'll get to 4096 shader processors. It will offer up to 24 TFLOP/s 16-bit (half-precision) floating point performance."

Why a 16-bit TFLOP mention in the slides? Simple, AI is often using 16-bit data so that is the performance bracket they work in. Now then, the rumor is very close to what the MI25 is offering. VEGA for consumers could be 12.5 TFLOP of Single precision (fp32-bit) game performance. A reference clocked GTX 1080 offers 9 TFLOPs and a GTX Titan X (Pascal) is at roughly 11 TFLOPS. So yes, this all does sound well. Obviously VEGA10 will be fitted with HBM2 memory as well as a new IO gateway and cache dubbed High bandwith Cache and controller.

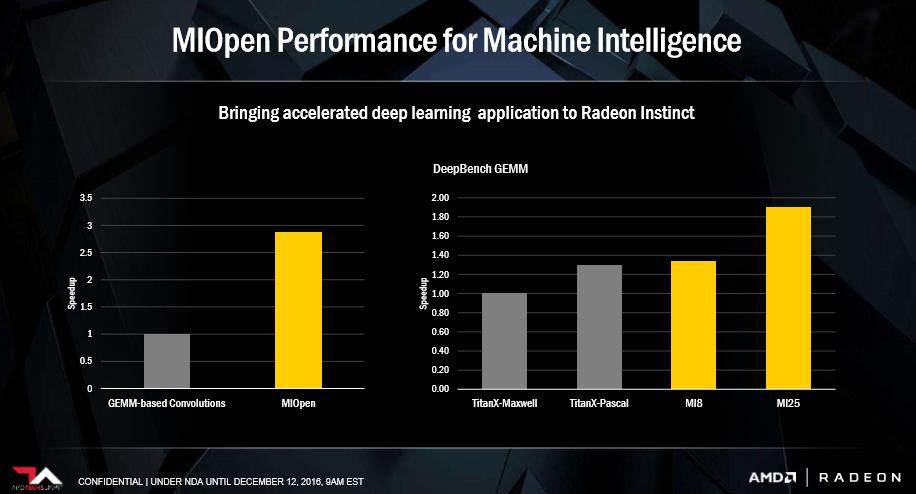

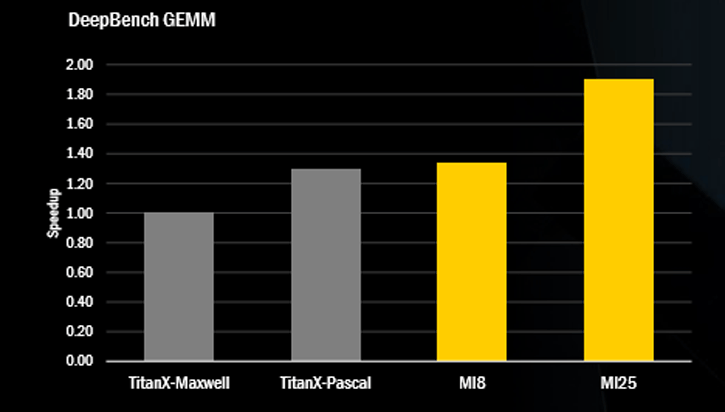

In the rather interesting slide above you can see a compute application crunching away in performance, AMD certainly is settings expectations high. The internal AMD slide dhows its VEGA 10 performance relative to the competition with the Nvidia Titan X Maxwell and Pascal. But to be fair, also shows the Mi8 (Nano/Fury) at Titan X Pascal performance levels.

Now back to the press-release:

Along with the new hardware offerings, AMD announced MIOpen, a free, open-source library for GPU accelerators intended to enable high-performance machine intelligence implementations, and new, optimized deep learning frameworks on AMD’s ROCm software to build the foundation of the next evolution of machine intelligence workloads.

Inexpensive high-capacity storage, an abundance of sensor driven data, and the exponential growth of user-generated content are driving exabytes of data globally. Recent advances in machine intelligence algorithms mapped to high-performance GPUs are enabling orders of magnitude acceleration of the processing and understanding of that data, producing insights in near real time. Radeon Instinct is a blueprint for an open software ecosystem for machine intelligence, helping to speed inference insights and algorithm training.

“Radeon Instinct is set to dramatically advance the pace of machine intelligence through an approach built on high-performance GPU accelerators, and free, open-source software in MIOpen and ROCm,” said AMD President and CEO, Dr. Lisa Su. “With the combination of our high-performance compute and graphics capabilities and the strength of our multi-generational roadmap, we are the only company with the GPU and x86 silicon expertise to address the broad needs of the datacenter and help advance the proliferation of machine intelligence.”

At the AMD Technology Summit held last week, customers and partners from 1026 Labs, Inventec, SuperMicro, University of Toronto’s CHIME radio telescope project and Xilinx praised the launch of Radeon Instinct, discussed how they’re making use of AMD’s machine intelligence and deep learning technologies today, and how they can benefit from Radeon Instinct. Radeon Instinct accelerators feature passive cooling, AMD MultiGPU (MxGPU) hardware virtualization technology conforming with the SR-IOV (Single Root I/O Virtualization) industry standard, and 64-bit PCIe addressing with Large Base Address Register (BAR) support for multi-GPU peer-to-peer support.

Radeon Instinct accelerators are designed to address a wide-range of machine intelligence applications:

Radeon Instinct MI6

The Radeon Instinct MI6 accelerator based on the acclaimed Polaris GPU architecture will be a passively cooled inference accelerator optimized for jobs/second/Joule with 5.7 TFLOPS of peak FP16 performance at 150W board power and 16GB of GPU memory

Radeon Instinct MI8

The Radeon Instinct MI8 accelerator, harnessing the high-performance, energyefficient “Fiji” Nano GPU, will be a small form factor HPC and inference accelerator with 8.2 TFLOPS of peak FP16 performance at less than 175W board power and 4GB of High-Bandwidth Memory (HBM).

Radeon Instinct MI25

The Radeon Instinct MI25 accelerator will use AMD’s next-generation highperformance Vega GPU architecture and is designed for deep learning training, optimized for time-to-solution

A variety of open source solutions are fueling Radeon Instinct hardware:

- MIOpen GPU-accelerated library: To help solve high-performance machine intelligence implementations, the free, open-source MIOpen GPU-accelerated library is planned to be available in Q1 2017 to provide GPU-tuned implementations for standard routines such as convolution, pooling, activation functions, normalization and tensor format

- ROCm deep learning frameworks: The ROCm platform is also now optimized for acceleration of popular deep learning frameworks, including Caffe, Torch 7, and Tensorflow*, allowing programmers to focus on training neural networks rather than low-level performance tuning through ROCm’s rich integrations. ROCm is intended to serve as the foundation of the next evolution of machine intelligence problem sets, with domain-specific compilers for linear algebra and tensors and an open compiler and language runtime.

AMD is also investing in developing interconnect technologies that go beyond today’s PCIe Gen3 standards to further performance for tomorrow’s machine intelligence applications. AMD is collaborating on a number of open high-performance I/O standards that support broad ecosystem server CPU architectures including X86, OpenPOWER, and ARM AArch64. AMD is a founding member of CCIX, Gen-Z and OpenCAPI working towards a future 25 Gbit/s phienabled accelerator and rack-level interconnects for Radeon Instinct. Radeon Instinct products are expected to ship in 1H 2017.

For more information, visit Radeon.com/Instinct.