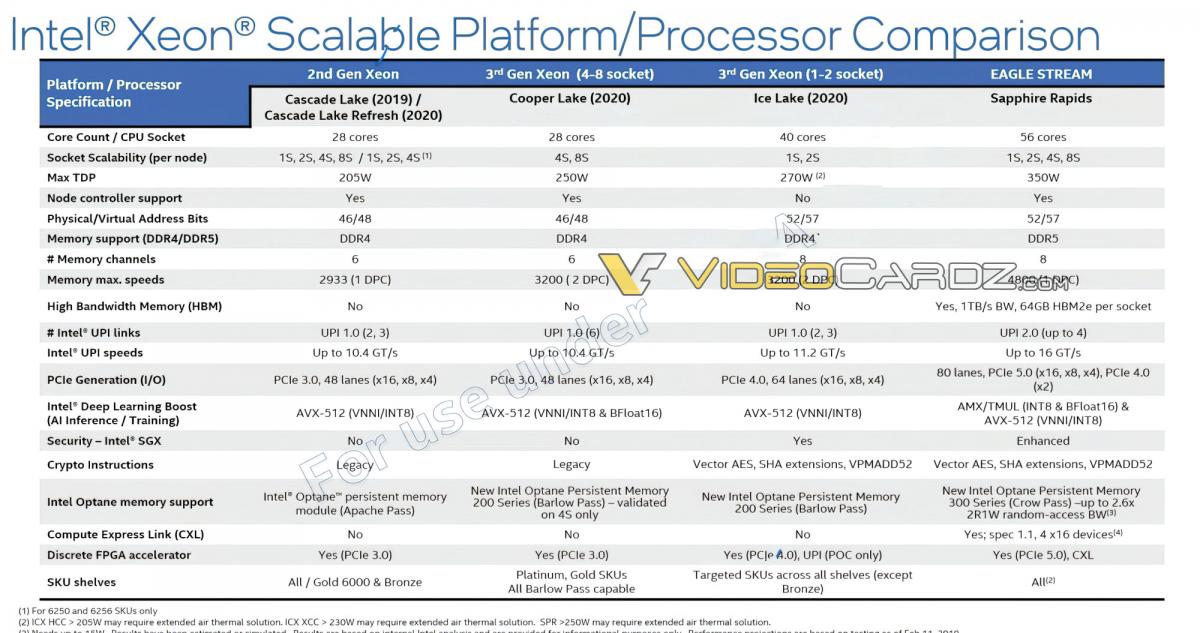

Intel is releasing details about the next generation of Xeon processors to the general public. It will now be revealed, among other things, how large the optional HBM cache. It was already known that Intel would use up to four HBM memory chips in conjunction with the four tiles of a Sapphire Rapids CPU, but it was unknown how many.

In a press conference held today at SC2021, Intel announced that the chip will include four stacks of HBM2e memory, each with a capacity of 16GB. This means that the Xeon Scalable will be capable of supporting up to 64GB of memory in total.

It is important to note that when Intel says "up to 64 GB," it is also indicating that there will be Xeon CPUs with a value less than this. These are available with capacities of 16 and 8 GB per chip from Micron and SK Hynix, who are the only two manufacturers of HBM2e. Therefore, there should also be Xeon processors with 32 GB of RAM, or if Intel does not always install four tiles, this would also be an option. Xeon processors with 32 GB of RAM are also recommended.

L1 and L2 cache configurations of the Ponte Vecchio high-performance computing GPU have been validated by Intel. The data center accelerator will be used in conjunction with Xeon Sapphire Rapids processors in supercomputers. The Ponte Vecchio GPU will have 4MB of L1 cache and 144 MB of L2 cache per tile, according to the company. There will be a total of 408 MB of L2 cache available. A number of fabrication nodes, including Intel 7 for the base tile, TSMC N7 for Xe-Link, and TSMC N5 for the computational tile, will be used in the construction of this GPU.

Up to 64GB of HBM2e memory is available for Intel Sapphire Rapids Xeon CPUs.