AMD's professional Radeon Instinct MI200 for the data center accelerator won't be shy of memory as it appears to be receiving 128GB of HMB2e. That is four times as much as the prevailing MI100. The accelerator is also the first GPU from AMD that is made up of multiple chiplets, much like Ryzen processors.

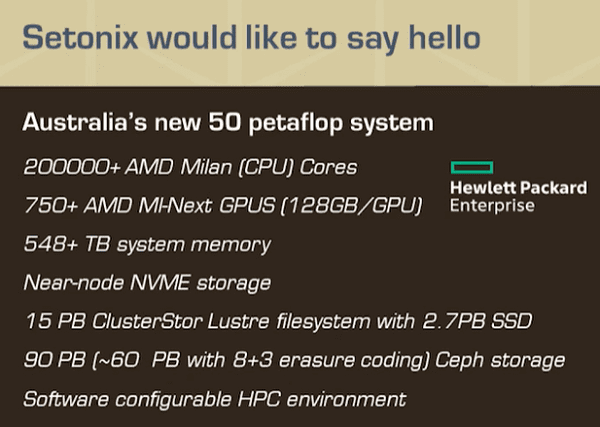

The Supercomputing Center Australia Pawsey works on a new supercomputer known as Setonix which will use 'MI-next' GPUs with 128GB, says HPC Wire. But further information about AMD has yet to be released, as AMD has not revealed its Instinct MI200 Card.

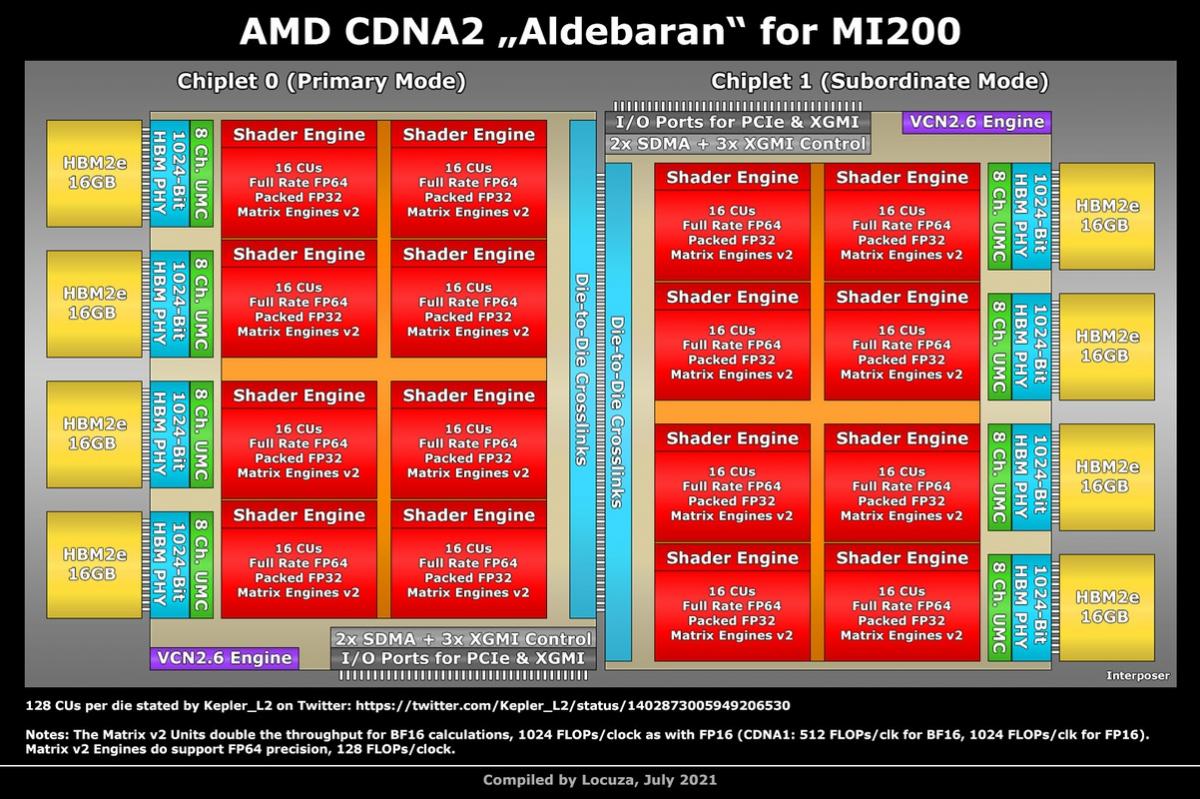

Last week, Twitter user Locuza shared an AMD-based CDNA2 schematic for the GPU. This would be a multichip design or an MCM. As with the Ryzen processors, several chips are coupled with chips holding core components. A total of eight stacks of 16GB HBM2e would be used, and the MI200 would be based on two chiplets, as described in the diagram. It is stated that the accelerator contains 128 compute units that may hold 16,384 stream processors, although it is not yet known if they are all operational.

The MI200 is the first CDNA2-based accelerator for 2022. The GPU is named Aldebaran. Accelerators from AMD's Instinct are utilized in data centers and supercomputers.

| AMD Instinct Accelerators | ||

|---|---|---|

| Accelerator Name | AMD Instinct MI100 | AMD Instinct MI200* |

| Architecture | 7nm CDNA1 (GFX908) | cDNA2 (GFX90A) |

| GPU | Arcturus | Aldebaran (Multi-Chip-Module) |

| GPU cores | 7680 | - |

| GPU speed | ~1500MHz | Nnb |

| FP16 Compute | 185TFLOPs | - |

| FP32 Compute | 23.1TFLOPs | - |

| FP64 Compute | 11.5TFLOPs | - |

| Vram | 32GB HBM2 | 128GB HBM2E |

| Memory Speed | 1200MHz | - |

| Memory bus | 4096 bit bus | - |

| Memory bandwidth | 1.23TB/s | - |

| form factor | Dual Slot, Full Length | - |

| Cooling | passive | - |

| TPD | 300W | - |

*Specifications not yet officially confirmed.