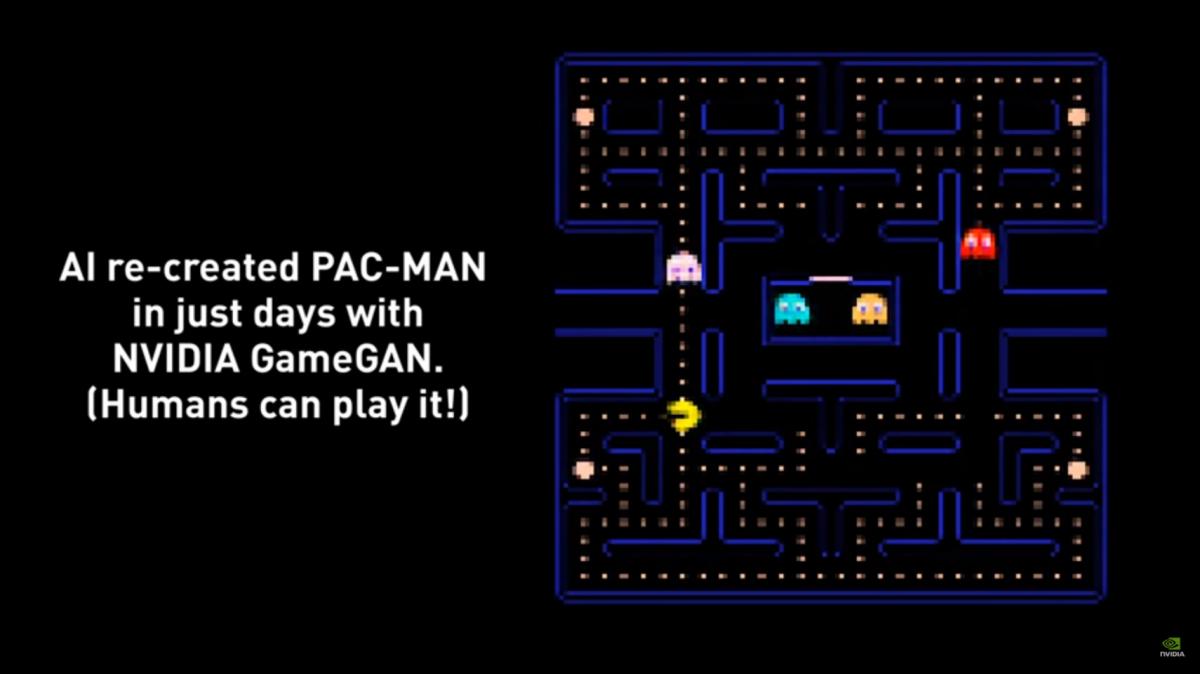

PAC-MAN is back, and that would be after uit's initial released 40 years ago! the retro classic has been reborn, delivered courtesy of AI. Trained on 50,000 episodes of the game, a new AI model created by NVIDIA Research, called NVIDIA GameGAN, can generate a fully functional version of PAC-MAN.

That's without an underlying game engine. That means that even without understanding a game’s fundamental rules, AI can recreate the game with convincing results.

GameGAN is the first neural network model that mimics a computer game engine by harnessing generative adversarial networks, or GANs. Made up of two competing neural networks, a generator and a discriminator, GAN-based models learn to create new content that’s convincing enough to pass for the original.

“This is the first research to emulate a game engine using GAN-based neural networks,” said Seung-Wook Kim, an NVIDIA researcher and lead author on the project. “We wanted to see whether the AI could learn the rules of an environment just by looking at the screenplay of an agent moving through the game. And it did.”

As an artificial agent plays the GAN-generated game, GameGAN responds to the agent’s actions, generating new frames of the game environment in real time. GameGAN can even generate game layouts it’s never seen before, if trained on screenplays from games with multiple levels or versions.

This capability could be used by game developers to automatically generate layouts for new game levels, as well as by AI researchers to more easily develop simulator systems for training autonomous machines.

“We were blown away when we saw the results, in disbelief that AI could recreate the iconic PAC-MAN experience without a game engine,” said Koichiro Tsutsumi from BANDAI NAMCO Research Inc., the research development company of the game’s publisher BANDAI NAMCO Entertainment Inc., which provided the PAC-MAN data to train GameGAN. “This research presents exciting possibilities to help game developers accelerate the creative process of developing new level layouts, characters and even games.”

Nvidia will be making our AI tribute to the game available later this year on AI Playground, where anyone can experience our research demos firsthand.

In 1981 alone, Americans inserted billions of quarters to play 75,000 hours of coin-operated games like PAC-MAN. Over the decades since, the hit game has seen versions for PCs, gaming consoles and cell phones.

The GameGAN edition relies on neural networks, instead of a traditional game engine, to generate PAC-MAN’s environment. The AI keeps track of the virtual world, remembering what’s already been generated to maintain visual consistency from frame to frame.

No matter the game, the GAN can learn its rules simply by ingesting screen recordings and agent keystrokes from past gameplay. Game developers could use such a tool to automatically design new level layouts for existing games, using screenplay from the original levels as training data.

With data from BANDAI NAMCO Research, Kim and his collaborators at the NVIDIA AI Research Lab in Toronto used NVIDIA DGX systems to train the neural networks on the PAC-MAN episodes (a few million frames, in total) paired with data on the keystrokes of an AI agent playing the game.

The trained GameGAN model then generates static elements of the environment, like a consistent maze shape, dots and Power Pellets — plus moving elements like the enemy ghosts and PAC-MAN itself.

It learns key rules of the game, both simple and complex. Just like in the original game, PAC-MAN can’t walk through the maze walls. He eats up dots as he moves around, and when he consumes a Power Pellet, the ghosts turn blue and flee. When PAC-MAN exits the maze from one side, he’s teleported to the opposite end. If he runs into a ghost, the screen flashes and the game ends.

Since the model can disentangle the background from the moving characters, it’s possible to recast the game to take place in an outdoor hedge maze, or swap out PAC-MAN for your favorite emoji. Developers could use this capability to experiment with new character ideas or game themes.

Autonomous robots are typically trained in a simulator, where the AI can learn the rules of an environment before interacting with objects in the real world. Creating a simulator is a time-consuming process for developers, who must code rules about how objects interact with one another and how light works within the environment.

Simulators are used to develop autonomous machines of all kinds, such as warehouse robots learning how to grasp and move objects around, or delivery robots that must navigate sidewalks to transport food or medicine.

GameGAN introduces the possibility that the work of writing a simulator for tasks like these could one day be replaced by simply training a neural network.

“We could eventually have an AI that can learn to mimic the rules of driving, the laws of physics, just by watching videos and seeing agents take actions in an environment,” said Sanja Fidler, director of NVIDIA’s Toronto research lab. “GameGAN is the first step toward that.”

Well NVIDIA, give it some RTX while you're at it as well eh?

.png)