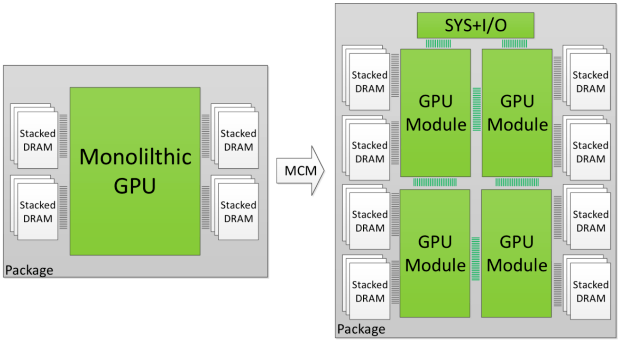

With Moore's law becoming more difficult each year technology is bound to change. At one point it will be impossible to shrink transistors even further, hence companies like Nvidia already are thinking about new methodologies and technologies to adapt to that. Meet the Multi-Chip-Module GPU design.

Nvidia published a paper that shows how they can connect multiple parts (GPU modules) with an interconnect. According to the research, this will allow for bigger GPUs with more processing power. Not only will is help tackling the common problems, it would also be cheaper to achieve as fabbing four dies that you connect is cheaper to do than to make one huge monolithic design.

Thinking about it, AMD is doing exactly this with Threadripper and EPYC processors where they basically connect two to four Summit Ridge (ZEN) dies with that wide PCIe lane link (they use 64 PCie lanes per link with 128 available), Infinity Fabric.

According to the researchers, as an example a GPU with four GPU modules they recommend three architecture optimizations that will allow for minimal loss off data-communication in-between the different modules. According to the paper the loss in performance compared to a monolithic single die chip would be merely 10%

Of course when you think about it, in essence SLI is already a similar methodology (not technology), however as you guys know it can be rather inefficient and challenging in scaling and compatibility. The paper states this MCM design would be performing 26.8% better compared to any multi-GPU solution. If and when Nvidia is going to fab MCM multi GPU module based chips is not known, for now this is just a paper on the topic. The fact that they publish it indicates it is bound to happen at one point in time though.

Sorry, I could not resist ... ;)

Sorry, I could not resist ... ;)

Nvidia might be moving to Multi-Chip-Module GPU design