It has been a rumor for a long time now, would NVIDIA be ballsy enough to release an 1100 series that have no Tensor and RT cores? Fact is they are missing out on a lot of sales, as the current pricing stack is just too much to swallow for many people.

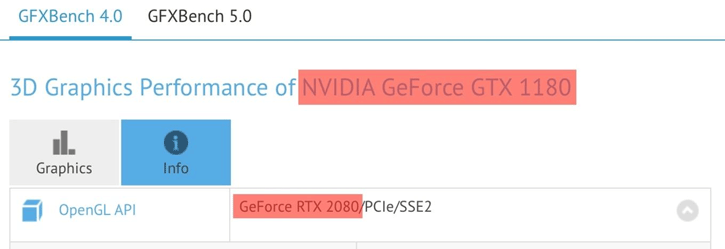

Meanwhile, Andreas over at Hardwareluxx (German website) noticed an NVIDIA GeForce GTX 1180 has appeared in the online database of the GFXBench 4.0 . The device ID is already recognized and the hardware information also indicates in which area the GeForce GTX 1180 has a certain similarity to the Turing cards because this is called the "GeForce RTX 2080 / PCIe / SSE2".

The entry could be indicative of a complete product line of the GTX-11 series. When looking closer, the entry shown above indicates a GeForce GTX 1180 with similar specs towards the GeForce RTX 2080 with 2,944 shader units and a GeForce GTX 1160 accordingly a GeForce RTX 2060 with 1,920 shader units.

I still don't believe that an 1100 series is inbound for the simple reason it is too expensive to design two architectures, it just does not make much sense. But evidence and leaks are slowly prooving my ideas on this wrong.

New Signs of a GTX 1100 Series, GeForce GTX 1180 without RT cores?