Advanced shading and DLSS (AI AA)

Neural Graphics Architecture

We've talked shaders and Raytracing, you'd almost forget about the briefly touched Tensor cores in the previous pages, we now land at what NVIDIA refers to as its Neural graphics Architecture. Simply put, this is a name for anything AI. Advanced supersampling techniques that can make a low-res image a super high-quality high res one by means of a path traced the image and applying a denoiser applied. Stuff that enhances image quality for the final frame, hey put short that's AI image optimizations. AI being used in a game, wouldn't it be a nice idea that we apply AI to an NPC and that it would learn from the players (you) which then gets adaptive and tries to beat you based in your behavior and patterns? That's game specific AI. AI could also be used for cheat detection and facial and character animation. There just a lot of possibilities here. However, in the initial state of technology, DLSS is the magic word that a lot of you are going to use. And it is part of NGX, as you can perform supersampling antialiasing, with the help of your Tensor cores inside the Turing GPU.

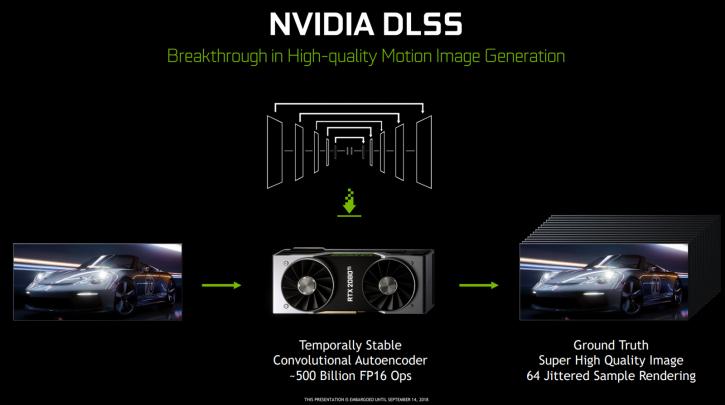

DLSS High-Quality Motion Image generation

Deep Learning Super Sampling - DLSS is a supersampling AA algorithm that uses tensor core-accelerated neural network inferencing in an effort to create what NVIDIA refers to as a high-quality super sampling like anti-aliasing. By itself the GeForce RTX graphics card will offer a good performance increase compared to the last gen counterparts, NVIDIA mentions roughly 50% higher performance based on 4K HDR 60Hz conditions for the faster graphics cards. That obviously excludes real-time raytracing or DLSS, that would be your pure shading performance. Deep learning can now be applied in games as an alternative AA (anti-aliasing) solution. Basically, the shading engine renders your frame, passes it onwards to the Tensor engine, which will super sample and analyze it based on an algorithm (not a filter), apply its AA and pass it back. The new DLSS 2X function will offer TAA quality anti-aliasing at little to no cost as you render your games 'without AA' on the shader engine, however, the frames are passed to the Tensor engines who apply supersampling and perform anti-aliasing at a quality level comparable to TAA, and that means super-sampled AA at very little cost. At launch, a dozen or so games will support Tensor core optimized DLAA, and more titles will follow. Contrary to what many believe it to be, Deep learning AA is not a simple filter. It is an adaptive algorithm, the setting, in the end, will be available in the NV driver properties with a slider. Here's NVIDIAs explanation; To train the network, they collect thousands of “ground truth” reference images rendered with the gold standard method for perfect image quality, 64x supersampling (64xSS). 64x supersampling means that instead of shading each pixel once, we shade at 64 different offsets within the pixel, and then combine the outputs, producing a resulting image with ideal detail and anti-aliasing quality. We also capture matching raw input images rendered normally. Next, we start training the DLSS network to match the 64xSS output frames, by going through each input, asking DLSS to produce an output, measuring the difference between its output and the 64xSS target, and adjusting the weights in the network based on the differences, through a process called backpropagation. After many iterations, DLSS learns on its own to produce results that closely approximate the quality of 64xSS, while also learning to avoid the problems with blurring, disocclusion, and transparency that affect classical approaches like TAA. In addition to the DLSS capability described above, which is the standard DLSS mode, we provide a second mode, called DLSS 2X. In this case, DLSS input is rendered at the final target resolution and then combined by a larger DLSS network to produce an output image that approaches the level of the 64x super sample rendering - a result that would be impossible to achieve in real time by any traditional means.

Let me also clearly state that it's not a 100% perfect supersampling AA technology, but it's pretty good from what we have seen so far. Considering you run them on the tensor cores, your shader engine is offloaded. So you're rendering a game with the perf of no AA, as DLSS runs on the tensor cores. So very short, a normal resolution frame gets outputted in super high-quality AA. 64x super-sampled AA is comparable to DLSS 2x. All done with deep learning and ran through the Tensor cores. DLSS is trained based on supersampling.

** Some high res comparison shots can be seen here, here and here. Warning file-sizes are 1 to 2 MB each.

More advanced shading

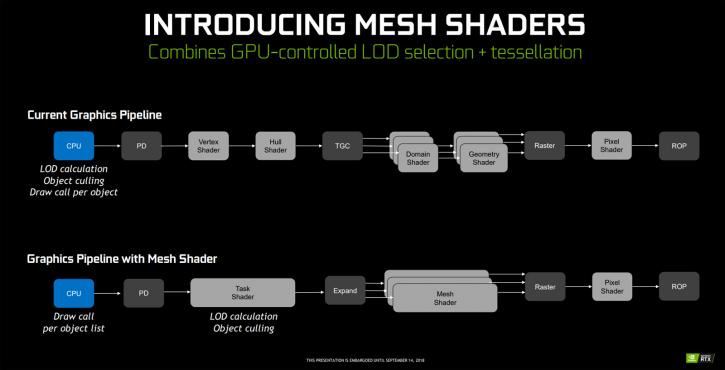

Starting at Turing and the RTX cards, NVIDIA is also opening a new chapter in shader processing. Mesh shaders, Variable rate shading and Motion adaptive shading. Advanced Mesh Shading allows NV to move things off the CPU, the CPU says here is a list of object, and with a script, you can offload that to the GPU and pass it onto in the pipeline. Optimizing, using fidelity where you need it, and not render all of it in an effort to be more efficient.

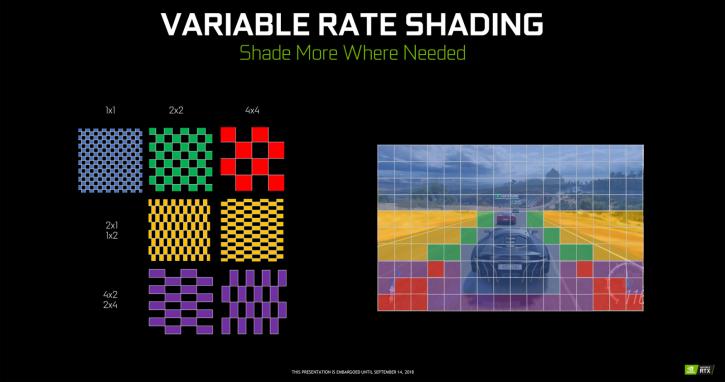

Variable Rate Shading is precisely what it sounds like, but in easy wording, it could be named eye tracked rendering. When you look at your monitor, your eyes are focussed on the object you are watching. Pretty much anything outside 10cm will get fuzzy blurry. NVIDIA is using that, by implementing Variable rate shaders they can now optimize shading where you look, aka where the focus is -- a process that only fully renders the segments of the screen you are actually looking at.

If you look at the above photos you can see the shader engine predominantly active at the car and road on the sides and ahead, that is what your eyes are focussing on. The parts in red get less shading applied, in green the most. In other words, this enables the GPU to change shading rates on-the-fly between different fragments of each primitive. Additionally, or independently, the GPU utilizes each respective shading rate parameter to determine how many sample positions to consider to be covered by the computed shaded output, e.g., the fragment color, thereby allowing the color sample to be shared across two or more pixels. In even more fancy wording you could look at this as favoring or biasing the mipmap level on a per-triangle basis by checking the normal variance, and biasing the mipmap level for lighting calculations if the triangle was relatively flat. It’s also possible to actually shade at different rates for the same fragment. The GeForce RTX 2070, 2080, and 2080 Ti, all come with support for the variable-rate shading that should enable VRS rendering. BTW this new shading technology will likely find its way real fast towards HMDs, with VR you need the quality where your gaze is, where you do not look at directly can be lesser quality. There's much to gain in performance for VR.

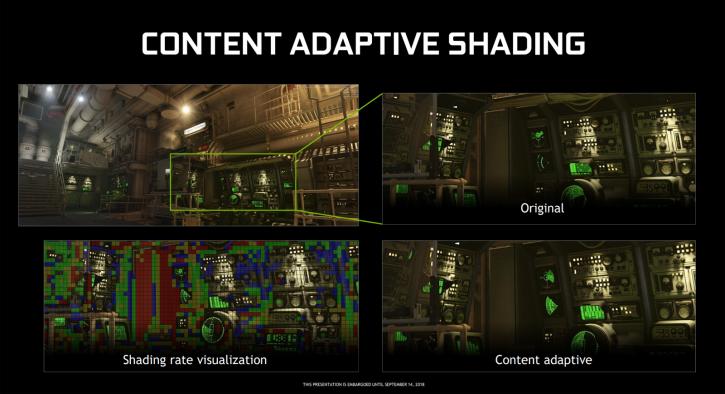

Content Adaptive Shading

Content Adaptive Shading, shading rate is simply lowered by considering factors like spatial and temporal (across frames) color coherence. The desired shading rate for different parts of the next frame to be rendered are computed in a post-processing step at the end of the current frame. If the amount of detail in a particular region was relatively low (sky or a flat wall etc.), then the shading rate can be locally lowered in the next frame. The output of the post-process analysis is a texture specifying a shading rate per 16 x 16 tile, and this texture is used to drive shading rate in the next frame. A developer can implement content-based shading rate reduction without modifying their existing pipeline, and with only small changes to their shaders. Figure 31 shows an example application of Content Adaptive Shading. Of course, watching Content Adaptive Shading in action in real time is the best way to appreciate its effectiveness, but for illustrating its operation some screenshots were used. The green box in the upper left full-screen image shows a crop area that is zoomed into. The lower left shows this area magnified, with a shading rate overlay. Note that the flat vertical wall is shaded at the lowest rate (red = 4 x 4), while the gauges and dials are shaded at full rate (no color overlay = 1 x 1), and various intermediate rates are used elsewhere in the scene. On the right side, the upper and lower images show screenshots of this cropped area with Content Adaptive Shading off (top) versus on (bottom), with no visual difference in image quality (the images are slightly different due to different sampling times versus the instrument panel animations).

Texture Space Shading

Turing GPUs introduce a new shading capability called Texture Space Shading (TSS), where shading values are dynamically computed and stored in a texture as texels in a texture space. Later, pixels are texture mapped, where pixels in screen-space are mapped into texture space, and the corresponding texels are sampled and filtered using a standard texture lookup operation. With this technology, we can sample visibility and appearance at completely independent rates, and in separate (decoupled) coordinate systems. Using TSS, a developer can simultaneously improve quality and performance by (re)using shading computations done in a decoupled shading space. Developers can use TSS to exploit both spatial and temporal rendering redundancy. By decoupling shading from the screen-space pixel grid, TSS can achieve a high-level of frame-to frame stability, because shading locations do not move between one frame and the next. This temporal stability is important to applications like VR that require greatly improved image quality, free of aliasing artifacts and temporal shimmer. TSS has intrinsic multi-resolution flexibility, inherited from texture mapping’s MIP-map hierarchy, or image pyramid. When shading for a pixel, the developer can adjust the mapping into texture space, which MIP level (level of detail) is selected, and consequently exert fine control over shading rate. Because texels at low levels of detail are larger, they cover larger parts of an object and possibly multiple pixels. TSS remembers which texels have been shaded and only shades those that have been newly requested. Texels shaded and recorded can be reused to service other shade requests in the same frame, in an adjacent scene, or in a subsequent frame. By controlling the shading rate and reusing previously shaded texels, a developer can manage frame rendering times, and stay within the fixed time budget of applications like VR and AR. Developers can use the same mechanisms to lower shading rate for phenomena that are known to be low frequency, like fog. The usefulness of remembering shading results extends to vertex and compute shaders and general computations. The TSS infrastructure can be used to remember and reuse the results of any complex computation.