Page 2 - Panel Discussions

GeForce FX and 3DMark03

As was pointed out on the web a few months ago, it appeared that the GeForce FX was cheating on the 3DMark03 benchmark suite by not rendering certain areas to gain speed. This particular problem caused NVIDIA to implement a whole new optimizing and testing strategy for its drivers. Their new optimization scheme must produce the correct image, can't contain any pre-computed states, and be not for just a benchmark.

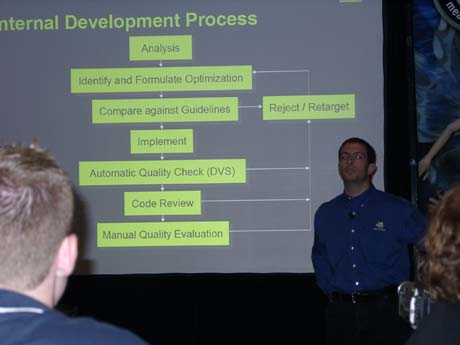

Take a look at the slide. Their new multi-step process has multiple checks and can fail at any step of the way, returning development to square one. NVIDIA are proud of its drivers, and now with this brand-spanking new QA, some very good drivers will get even better.

As of 44.03, released some months ago, the NVIDIA drivers were re-architected such that the 3DMark03 'cheating' was taken care of. NVIDIA stated that the drivers, pre 44.03, simply realized that it didn't need to draw certain areas of the benchmark scene, and so it didn't. It wasn't a 3DMark03 specific optimization, just a general optimization that it would also do for other applications as well. Also keeping with its quarterly driver releases, there will also be a new driver release sometime this week. The new drivers will be the ForceWare 50.x series of drivers. The 50.x series will have much better optimizations all around for the GeForce FX, and should show a good performance boost overall. Eventually, NVIDIA wishes to get so good at writing drivers it will implement a yearly driver release schedule.

The question that was on everybody's mind was about NVIDIA's implementation of the DX9 Pixel Shader 2.0 and High Dynamic Range Lighting. NVIDIA's approach to DX9 is different from the 'Red' company's, and not necessarily worse, just different. NVIDIA was quick to point out that there were problems with the DX9 specs in regard to precision, and also that no one really does PS 2.0 in 32-bit precision all the time. Most games use a mixed mode of 16-bit, 24-bit, and 32-bit with PS 2.0.

Through a great talk given by Chief Technology Scientist, David Kirk, NVIDIA basically claims that if 16-bit precision is good enough for Pixar and Industrial Light and Magic, for use in their movie effects, it's good enough for NVIDIA. There's not much use for 32-bit precision PS 2.0 at this time due to limitations in fabrication and cost, and most notably, games don't require it all that often. The design trade-off is that they made the GeForce FX optimized for mostly FP16. It can do FP32, when needed, but it won't perform very well. Mr. Kirk showed a slide illustrating that with the GeForce FX architecture, its DX9 components have roughly half the processing power as its DX8 components (16Gflps as opposed to 32Gflops, respectively). I know I'm simplifying, but he did point it out, very carefully.

This is where NVIDIA's developer relations group kicks in and works very hard with a developer to write code that is optimized for the GeForce FX architecture. Let's face it, it isn't a performance problem with the NV3x hardware. It does lots of math really fast. The message that NVIDIA wants to send to developers is, "We're going to kick everyone's ass." That's a direct quote, actually.

During the technical presentation, there was a surprise of sorts. NVIDIA, along with the assistance of Mark Rein from Epic Games, pointed out a rendering flaw in ATI's R360. The ATI hardware did not seem to render all of the detail textures. This was a huge surprise to the audience, as none of us had seen the flaw in ATI hardware before.

If you look very closely, and squint a bit, the wall on the 9800XT is missing one detail texture, which looks like random dark splotches.

There was a second example in the AquaMark 3.0 benchmark in the Massive Overdraw scene where NVIDIA pointed out another flaw in ATI's rendering strategy. The scene should look like a washed out bright spot, but the 'Red' company's render of the same frame looked like several textures were being left out.

There were also quite a few pointed barbs at ATI, oops, I mean the 'Red' company. All of the developers present, some seemingly acting out some sort of strained confessional, were pointing out that sure, the ATI cards were indeed faster, but they also weren't drawing everything either, like the detail textures and alpha values. These kinds of image quality issues are hard to prove, so take it with a grain of salt.

Apparently NVIDIA has known about these particular ATI issues for some months now, but as a general practice, it doesn't write white papers about its competitor's hardware. Of course, NVIDIA was not keen on commenting on the broken FSAA and anisotropic filtering with Halo or Splinter Cell, nor were the developers. We never did get a satisfactory answer about those issues.