Final words and conclusion

Final words

Man, what a beast. It might have been a long wait, but it has been worth it. Ampere certainly delivers on all fronts. The fact that we still have to test the GeForce RTX 3090 makes my eyebrows stick in that upwards position. This conclusion page is however, all about that Founders edition GeForce RTX 3080 and what it brings to the table. We have a lot to discuss as, yes, there are a few pros and some cons.

Performance

We need to start with performance, as really that's what this card is all about, and it does so with outstanding values. Yes, at Full HD you'll be quite often bottlenecked and CPU limited. But even there, in some games with proper programming and the right API (DX12/ASYNC), the sheer increase in performance is staggering. The good old rasterizer engine first, as hey it is still the leading factor. Purely speaking from a Shading/rasterizing point of view, you're looking at 125% to 160% performance increases seen (relative) from the similarly priced GeForce RTX 2080 (SUPER), so that is a tremendous step. The unimaginable number of Shader processors is staggering. The new FP32/INT32 combo clusters remain a compromise that will work extremely well in most cases, but likely not all of them. But even then, there are so many Shader cores that not once was the tested graphics card slower than an RTX 2080 Ti; in fact (and I do mean in GPU bound situations), the RTX 3080 stays ahead by at least a margin of a relative 125%, but more often 150% and even 160%. Performance-wise we can finally say, hey, this is a true Ultra HD capable graphics card (aside from Flight Simulator 2020, haha, that title needs D3D12/AYSNC and some DLSS!). The good news is that any game that uses traditional rendering will run excellently at 3840x2160. Games that can ray-trace and manage DLSS also become playable in UHD. A good example was Battlefield V with ray-tracing and DLSS enabled, in Ultra HD now running in that 75 FPS bracket. Well, you've seen the numbers in the review, I'll mute now.

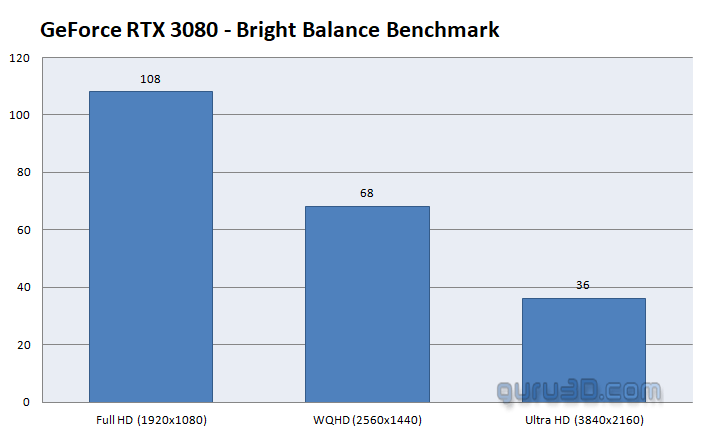

DXR ray-tracing and Tensor performance; the RTX 30 series has received new Tensor and RT cores. So don't let the RT and Tensor core count confuse you. They're located close inside that rendering engine, they became more efficient and that shows. If we look at an RTX 2080 with port Royale, we will hit almost 30 FPS. The RTX 3080 nearly doubles that at 53 FPS. Tensor cores are harder to measure, but overall from what we have seen, it's all in good balance. Overall though, the GeForce RTX 3080 starts to make sense starting at a Quad HD resolution (2560x1440), but again I deem this to be an Ultra HD targeted product, whereas for 2560x1440, I'd see the GeForce RTX 3070 playing a more important roll in terms of sense and value for money. At Full HD then, the inevitable GeForce RTX 3060, whenever that may be released. Games like Red Dead Redemption 2 will make you aim, shoot, and smile at 70 FPS in UHD resolutions with the very best graphics settings. As always, comparing apples and oranges, the performance results vary here and there as each architecture offers advantages and disadvantages in certain game render workloads. So, for the content creators among us, have you seen the Blender and V_Ray NEXT results? No? Go to page 30 of this review, and your eyes will pop out. The sheer compute performance has nearly exponentially doubled one step in the right direction. We need to stop for a second and talk VRAM, aka framebuffer memory. The GeForce RTX was fitted with new GDDR6X memory, it clocks in at 19 Gbps and that is a freakfest of memory bandwidth, which the graphics card likes. You'll get 10GB of it. I can also tell you that there are plans for a 20GB version. We think initially, the 20GB was to be released as the default, but for reasons none other than the bill of materials used, it became 10GB. In the year 2020 that is a very decent amount of graphics memory. Signals are however, that the 20GB version may become available at a later stage, for those that want to run Flight Simulator 2020; haha, that was a pun, sorry. We feel 10GB right now is fine, but with DirectX Ultimate and added scene complexity and ray-tracing becoming the new norm, I am not so sure if that will still be enough two years from now.

Cooling & noise levels

The new cooling design looks awesome and 'it just works'. It has made the product 'overall' more quiet. In extremely stressed conditions, we did hit 38 dBA though, but still, that is considered a quiet to normal acoustic level. Depending on the level of airflow inside your chassis, expect the card to sit in the 75~80 Degrees C range temperature-wise under hefty load conditions. As FLIR shows, the top side of the card shows substantial heat bleeding though.

Energy

The power draw under intensive gaming for the GeForce RTX 3080 is significant. We measured it to be in the 340 Watt range. Now, that is a peak value under stressed conditions. Gaming wise, that number will fluctuate a bit. Are we happy with that amount of energy consumption in the year 2020? No, not at all. Will you as an end consumer care about it? We doubt that as well. Our power consumption measurements are based on a shading based test. That did make us wonder what would happen if we try combos of ray-tracing and DLSS in that energy consumption mix. Check below; please make note of the fact that the listed wattage is the entire PC power consumption, not the graphics card wattage:

- Power consumption shader performance 490 Watts

- Power consumption shader performance DLSS OFF 480 Watts

- Power Consumption RTX ON, DLSS ON 480 Watts

Coil whine

The GeForce RTX 3080 does exhibit some coil squeal. Is it annoying? Hmm, it's at a level you can hear it. In a closed chassis that noise would fade away in the background. However, with an open chassis, you can hear coil whine/squeal.

Pricing

NVIDIA is pricing the GeForce RTX 3080 at USD 699. It's a great deal of money, especially considering what the upcoming consoles are going to bring to the table. It's however, not exorbitantly more expensive when seen in comparison to last-gen products. In fact, NVIDIA has priced the 3080 the same as the RTX 2080 SUPER and, for the same amount of money, you'll receive a much better performing product, on all fronts. So in that respect, pricing is good. My main worry currently is that NVIDIA opted for the 8nm Samsing node over 7nm TSMC, which could lead to high volume shortage, and this lack of availability of the GPUs in the first months after release. And shortages will always drive up prices at etailers. The good thing here however, is that you can purchase the Founders cards directly at NVIDIA at MSRP prices.

Cons

The sheer rendering power has gone up truly unexpectedly high; however, so did power consumption, which has risen significantly. The 12-pin adapter cable then; we feel that the new PCIe PEG power connector works really well. It's a more gentle and subtle solution. It will however only look nice if you have a proper dedicated 12-pin power cable coming from the PSU, and PSU manufacturers are working on that. The problem with the current adapter cable is that it's relatively short, so it is not easy to hide. The cooler design then, no matter how I look at it, in a year these black fins will catch some dust. Also, if you mount your graphics card horizontally, hot air will be blowing towards the CPU and VRM area. Not an issue per se, but with a chassis that exhibits poor airflow and a heat pipe cooled processor, this does raise a bit of a red flag. Also, we have to make note of the top side PCB temperatures reaching 90 degrees C. If you are uncomfortable with that, a custom AIB card probably is something for you to look out for. Lastly, we have to make a note of the 10GB GDDR6X graphics memory, it might not be enough in volume for the years to follow. 20GB or even 16GB would have been a more sound choice.

Ray-tracing

So what is the state of Hybrid Ray-tracing combined with rasterized Shading? Well, with the RTX 2000 series, NVIDIA was pioneering and they ran into snags mostly found in performance. Still, titles like Battlefield V have been playable in the somewhat lower resolutions with RTX enabled. The good news is that DX-R performance seems to have almost doubled and, while it's still not extremely fast, it should be fast enough for most titles in Full HD, Quad HD and even some in Ultra HD, especially with DLSS 2.0 applied things look fantastic. Ray-tracing is a trend, you'll see it on the new 2020 consoles and you'll also see it from competing products. Not because it's RTX, no, because Microsoft added DirectX ray-tracing. It is the future of game rendering and in the coming years things should start to take off. I want to show you the following video I recorded:

Above, you can see the new Bright Memory RTX Benchmark. Bright memory is an indie game release in development and has added ray-traced reflections and NVIDIA DLSS. It is built on the Unreal Engine 4 and combines the ranged shooting style of a traditional FPS, with quick-paced combo attacks from melee skills and close-range abilities typically found in action titles. Since this is an RTX showcase, we cannot use it as a benchmark. But it does bring an example of what's possible. We enabled RTX and the Very High settings (highest) and DLSS at its best Quality modus. Here are the results:

Please relate FPS to what you just observed in the video. Try to imagine gaming like that; yes we're still not there FPS wise in Ultra HD. But we have more cards to test and the future does look bright. As that is just staggering to see. BTW YouTube immediately claimed a copyright issue after uploading, any ads are of the claimer (we don't enable ads ourselves for YT videos). That's the future of game render quality. And sure, I know it is a showcase, ergo we have not included it in the benchmarks suite. Just trying to make a point here.

Tweaking

Tweaking was a bit of a challenge. I've had quite a puzzling time and experience. The tweaks on the clock frequency and memory run fine, but the performance was just often lower than defaults. It seems that new safety protection is active on memory. A +1000 Mhz would result in poor performance but no stability issues. Ergo +350 to ~750 max currently is what I'd recommend on the RTX 3080 cards. Of course, increase the power limiter to the max so your GPU gets more energy budget, and then the GPU clock can be increased anywhere from +40 to +100 MHz, but that will vary per board, brand and card. So, in the end, I expect ~20 maybe 21 Gbps on the memory subsystem (effective), and with a +75 Core frequency and added power, you should see your card hovering at a 2 GHz range (which is pretty awesome).

Conclusion

We feel it is safe to say that it's been worth the wait. Ampere as an architecture is nothing short of impressive. Combined with hyper-fast GDDR6X memory and a radical new cooling design, a new trend is set, as this product is seriously competing with the board partner cards. I mean, all registers are green, including rendering performance, cooling, and acoustic performance as well as the simple yet so crucial aesthetic feel. I do worry a little about the open fin structure versus dust. Next to that, you are going to yearn for a dedicated 12-pin power connector leading from the PSU and there is some coil whine going on. Of course, overall power consumption has increased really significantly. How important these things are to you, is for you to decide. The flipside of the coin is that you'll receive a product that will be dominant in that Ultra HD space. Your games average out anywhere from 60 to 100+ FPS, well, aside from Flight Simulator 2020 :)

Dropping down in resolutions does create other challenges; you'll be far less GPU bound, but then again, we do not expect you to purchase a GeForce RTX and play games at 1920x1080. Arbitrarily speaking, starting at a monitor resolution of 2560x1440, that's the domain where the GeForce RTX 3080 will start to shine. The raw Shading/rasterizer (read: regular rendered games) performance is staggering as this many Shader cores make a difference. The new generational architecture tweaks for ray-tracing and Tensor also is significant. Coming from the RTX 2080, the RTX 3080 exhibited a roughly 85% performance increase and that is going to bring Hybrid Ray-tracing tow higher resolutions. DX-R will remain to be massively demanding, of course, but when you can play Battlefield V in ultra HD with ray-tracing and DLSS enabled at over 70 FPS, well hey, I'm cool with that. Also, CUDA compute performance in Blender and V-Ray, OMG! The asking price for all this render performance is $699 USD, and that is the biggest GPU bottleneck for most people, especially with the upcoming consoles in the vicinity. However, there always has been a significant distinction between PC and console games; I suspect that will not be any different this time around. We bow to the Ampere architecture as it is impressive as, for those willing to spend the money on it, it's wholeheartedly recommended and eas an easy top pick..

Sign up to receive a notification when we publish a new article.

Or go back to Guru3D's front page

- Hilbert, LOAD"*",8,1.