2 - A Crash Course on Shaders

The quick 101 - Shaders explained

Dude .. what is a shader?

To understand what is going on inside that graphics card of yours, please allow me to explain what is actually happening inside that graphics processor and explain shaders in very easy to understand terminology (I hope). That and how it relates to rendering all that gaming goodness on your screen (the short version).

What do we need to render a three dimensional object; 2D on your monitor? We start off by building some sort of structure that has a surface, and that surface is being built from triangles. Triangles are great as they are really quick and easy to compute. Now we need to processes each triangle. Each triangle has to be transformed according to its relative position and orientation to the viewer.

The next step is to light the triangle by taking the transformed vertices and applying a lighting calculation for every light defined in the scene. At last the triangle needs to be projected to the screen in order to rasterize it. During rasterization the triangle will be shaded and textured.

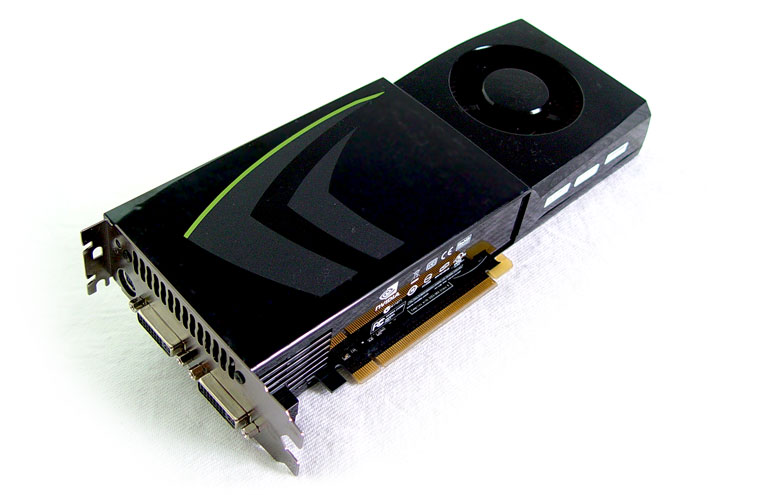

Graphic processors like the GeForce series are able to perform a large sum of these tasks. Actually the first generation (say ten years ago) was able to draw shaded and textured triangles in hardware, which was a revolution.

The CPU still had the burden of feeding the graphics processor with transformed and lit vertices, triangle gradients for shading and texturing, etc. Integrating the triangle setup into the chip logic was the next step and finally even transformation and lighting (TnL) was possible in hardware, reducing the CPU load considerably (surely everyone remembers the GeForce 256 right ?).

The big disadvantage at that time was that a game programmer had no direct (i.e. program driven) control over transformation, lighting and pixel rendering because all the calculation models were fixed on the chip. This is the point in time where shader design surfaced.

We now finally get to the stage where we can explain Shaders.

In the year 2000 DirectX 8 was released, Vertex and Pixel shaders arrived and allowed software and game developers to program tailored transformation and lighting calculations as well as pixel coloring functionality which gave a new graphics dimension towards the gaming experience, and things started to look much more realistic.

Each shader is basically nothing more than a relatively small program (programming code) executed on the graphics processor to control either vertex, pixel or geometry processing. So a shader unit in fact small floating point processor inside your GPU.

When we advance to the year 2002, we see the release of DirectX 9. DX9 had the advantage for the use of way longer shader programs than before, with pixel and vertex shader version 2.0.

In the past graphics processors have had dedicated units for diverse types of operations in the rendering pipeline, such as vertex processing and pixel shading.

Last year with the introduction of DirectX 10 it was time to move away from the pretty inefficient fixed pipeline and create a new unified architecture.

So each time we mention a shader processor, this is one of the many shader processors inside your GPU. Once I mention a shader ... that's the program executed on the Shader engine (the accumulated shader processor domain).

NVIDIA likes to call these stream processors. Same idea, slightly different context. GPUs are stream processors processors that can operate in parallel, aka many independent vertices and fragments at once. A stream is simply a set of records that require similar computation.

Okay, we now have several types of shaders, Pixel, Vertex, Geometry and with the coming of DX11 likely a compute shader, which is a pretty cool one. As you can run physics acceleration without needing to go through a graphics API. Fun thing, this is already possible through through the CUDA engine, but we'll talk about that later on.

There. I do hope you now understand the concept of shaders and shader processors. Let's talk a little about the GeForce series 200, shall we?