3 - Less cheap, RAID (tested)

Going RAID on a CF - The AD4CFPRJ Quad-CF PCI Controller

Obviously speed matters a lot as next to the price it's the big culprit of flash memory. While seek and access times of a CF are just really awesome, the overall read/write performance compared to a normal HDD are a little silly. Only the most expensive professional SSD drives topping 1000/1500 USD offer really good write/read speed.

But is there a way to again save a lot of money yet gain some speed ? Yes of course. Since CF uses the IDE interface we can opt to purchase a little controller that supports RAID. Now the model we bought was 45 EUR, really really cheap ... yet does have certain limitations. But hey we wanted to try it for the sake of my pioneering sprit. This model can only manage 2 CF cards at Mode 1 and another 2 at Mode 2 [Ed - Everyone remembers page one right?]. That's very tricky performance wise as usually everything downgrades speed wise for compatibility. We purchased 2x v2.0 and 2x cheapo v1.0 cards to see how badly the effect of combining slow v1.0 memory with faster v2.0 memory.

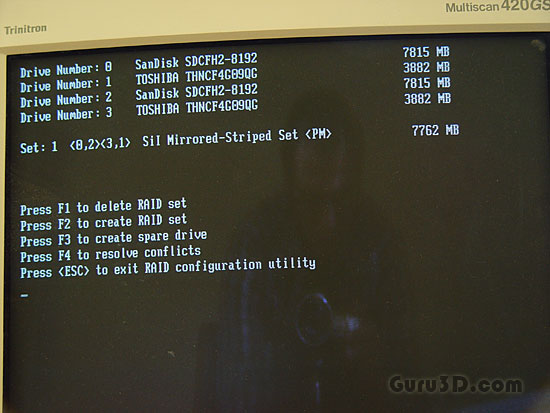

First we plug in the controller and boot up.

After the BIOS post by pressing F3 we enter the SIL controller menu where we very easily can select the RAID mode we want. We spot that all our CFs are recognized, two slow v1.0 4Gb cards and two faster CF 2.0 8 GB cards.

The controller allow us RAID 0 (striping, good performance!), RAID 1 (mirroring, 1:1 copy as fail-safe) and here's the kicker .. RAID 10 (mode 0+1 combined). We obviously choose RAID10.

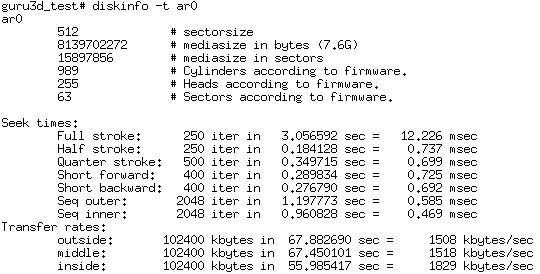

We again pop in the FreeBSD installation disk, and see at partitioning indeed a new RAID array has been built. Nice, let's retest. Remember, we are now mirroring and striping. Yet with two CF v1 based cards which should give us a definitive negative hit on overall performance. CF v1.0 is dirt slow ... in this first test we'll see if the entire write performance will go down by mixing the two (1.0 / 2.0) standards:

Woaaah ... and so it did. Performance went down by a factor of 10 to 15, terrible to be honest. I certainly expected more than this considering we are striping as well. So the two CF v1.0 are useless as they kill performance. All cards have reverted back towards CF V1.0 ... you do not want that at all.

[Ed - Not entirely unexpected though. RAID 0 or 0+1 or any configuration, is limited by the slowest drive)

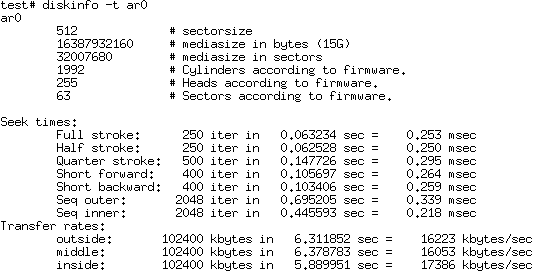

We need to drop RAID10 and remove the two slow v1.0 CF's again and only use the two faster v2.0 CFs and stripe them in RAID 0. This way we'll achieve optimal speed and double up the disk size from 2x8 GB to a 16 GB Disk array. Now let's run the test again:

Above you can tell the difference, we have 16GB at our disposal and though the seek-times are even faster, the performanceremains roughly the same as with 1 single v2.0 CF, we see a small increase only. But this was as expected with v2.0 CF cards. The good thing here is that we are striping, if you took two 32GB CF cards, you can build one 64GB array / partition. The overall slowdown has a lot to do with the controller, in the server world we call it fake-raid. This is not a pure 100% dedicated RAID controller. But we did get a driver CD with the controller .. with optimized Windows drivers. Coooool, next step then.