Page 17 - SahderMark 2.0

S haderMark 2.0DirectX 9 Pixel and Vertex Shader 2.0 performance

haderMark 2.0DirectX 9 Pixel and Vertex Shader 2.0 performance

To measure pure DirectX 9 Shader 2.0 performance, we make use of ShaderMark 2.0 as supplied to us by Thomas Bruckschlegel, the programmer of this software. ShaderMark 2.0 is a DirectX 9.0 pixel shader benchmark. All pixel and vertex shader code is written in Microsofts High Level Shading Language. ShaderMark provides the possibility to use different compiler targets + advanced options. Currently there is no DirectX 9.0 HLSL pixel shader benchmark on the market. Futuremark's 3DMark03 (www.futuremark.com) and Massive's AquaMark 3.0 (www.aquamark.com) are bases on hand written assembler shaders or partly HLSL shaders. HLSL is the future of shader development! The HLSL shader compiler and its different profiles have to be tested and this gap fills ShaderMark v2.0. Driver cheating is also an issue. With ShaderMark, it is easily possible to change the underlying HLSL shader code (registered version only) which makes it impossible to optimize a driver for a certain shader, instead of the whole shader pipeline. The ANTI-DETECT-MODE provides an easy way for non-HLSL programmers to test if special optimizations are in the drivers.

You can download ShaderMark here.

The software tests the following Shader techniques:

Shaders

- (shader 1) ps1.1, ps1.4 and ps2.0 precision test (exponent + mantissa bits)

- (shader 2) directional diffuse lightning

- (shader 3) directional phong lightning

- (shader 4) point phong lightning

- (shader 5) spot phong lightning

- (shader 6) directional anisotropic lighting

- (shader 7) fresnel reflections

- (shader 8) BRDF-phong/anisotropic lighting

- (shader 9) car paint shader (multiple layers)

- (shader 10) environment mapping

- (shader 11) bump environment mapping

- (shader 12) bump mapping with phong lighting

- (shader 13) self shadowing bump mapping with phong lighting

- (shader 14) procedural stone shader

- (shader 15) procedural wood shader

- (shader 16) procedural tile shader

- (shader 17) fur shader (shells+fins)

- (shader 18) refraction and reflection shader with phong lighting

- (shader 19) dual layer shadow map with 3x3 bilinear percentage closer filter

- glare effect shader with ghosting and blue shift (HDR)

- glare types: (shader 20) cross and (shader 21) gaussian

- non photorealistic rendering (NPR) 2 different shaders

- (shader 22) ollutline rendering + hatching

- two simultaneous render targets (edge detection through normals + tex ID and regular image) or two pass version

- per pixel hatching with 6 hatching textures

- (shader 23) water colour like rendering

- summed area tables (SAT)

- (shader 22) ollutline rendering + hatching

To understand this test, I first better explain Vertex and Pixel Lighting, Vertex and pixel shader programming allows graphics and game developers to create photorealistic graphics on the PC. And with DirectX, programmers have access to an assembly language interface to the transformation and lighting hardware (vertex shaders) and the pixel pipeline (pixel shaders).

All real-time 3D graphics are built from component triangles. Each of the three vertices of every triangle contains information about its position, color, lighting, texture, and other parameters. This information is used to construct the scene. The lighting effects used in 3D graphics have a large effect on the quality, realism, and complexity of the graphics, and the amount of computing power used to produce them. It is possible to generate lighting effects in a dynamic, as-you-watch manner.

The Achilles heel of the entire GeForce FX series in the NV3x series remains pure DirectX 9 Shader performance. Basically Pixelshader and Vertexshader 2.0 turns out to be a bottleneck in games who massively utilize them. It has to do with precision, DX9 specified 24bit, ATI is using that. NVIDIA went for 16 and 32 bit precision which has a huge impact on performance. ATI has opted the better solution and thus has the best performance in this specific feature, period.

NVIDIA is working hard on it though, but enough talk.

With recent builds of Detonator drivers NVIDIA definitely found something in the drivers to boost Shader 2.0 performance as they enhanced and optimized Vertex and PixelShaders 2.0 with the help of a real-time shader compiler.

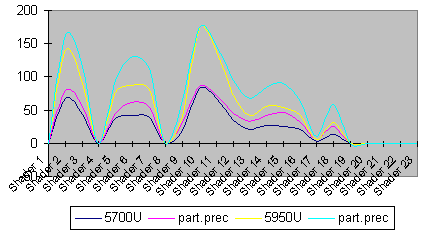

Fact is this, performance is still nowhere near the the competition. Now take a look at this chart:

| ShaderMark_2.0 | 5700U | part.prec | 5950U | part.prec |

| Shader 1 | 0 | 0 | 0 | 0 |

| Shader 2 | 67 | 79 | 140 | 163 |

| Shader 3 | 44 | 56 | 90 | 116 |

| Shader 4 | 0 | 0 | 0 | 0 |

| Shader 5 | 38 | 45 | 77 | 94 |

| Shader 6 | 42 | 62 | 87 | 130 |

| Shader 7 | 39 | 54 | 81 | 112 |

| Shader 8 | 0 | 0 | 0 | 0 |

| Shader 9 | 19 | 31 | 40 | 64 |

| Shader 10 | 83 | 85 | 173 | 174 |

| Shader 11 | 68 | 72 | 139 | 148 |

| Shader 12 | 35 | 45 | 72 | 94 |

| Shader 13 | 21 | 33 | 42 | 68 |

| Shader 14 | 27 | 42 | 56 | 84 |

| Shader 15 | 26 | 46 | 54 | 90 |

| Shader 16 | 21 | 32 | 42 | 63 |

| Shader 17 | 3 | 5 | 6 | 10 |

| Shader 18 | 13 | 26 | 32 | 59 |

| Shader 19 | 0 | 0 | 0 | 0 |

| Shader 20 | 0 | 0 | 0 | 0 |

| Shader 21 | 0 | 0 | 0 | 0 |

| Shader 22 | 0 | 0 | 0 | 0 |

| Shader 23 | 0 | 0 | 0 | 0 |

Partial precision is what NVIDIA is aiming for at this moment. Basically the API will use both 32 and 16 bit color precision (partial precision) which is a compromise yet performance wise this might be the best thing to opt. In all honesty, I doubt if NVIDIA can get Shader 2.0 performance up to a level that can be compared with the competition within it's current generation. Luckily for them there are not too many pure DX9 titles out as the NV3x range will definitely suffer from this issue. I also fail to see why there still are several DX9 Shader operation not functioning while the competition clearly can do it. One thing is a fact though, NVIDIA really did improve overall shader performance with the new detonator drivers yet is nowhere near ATI's solution.

This is what NVIDIA basically is thinking about Shaders

NVIDIA's current line of cards based on the GeForce FX architecture attempts to maximize high-end graphics performance by supporting both 16-bit and 32-bit per-color-channel shaders--most DirectX 9-based games use a combination of 16-bit and 32-bit calculations, since the former provides speed at the cost of inflexibility, while the latter provides a greater level of programming control at the cost of processing cycles. The panel went on to explain that 24-bit calculations, such as those used by the Radeon 9800's pixel shaders, often aren't enough for more-complex calculations, which can require 32-bit math.

The GeForce FX architecture favors long shaders and textures interleaved in pairs, while the Radeon 9800 architecture favors short shaders and textures in blocks.

Enough Shader talk for one page, let's round everything up in the conclusion.