Graphics memory (VRAM) usage and CPU scaling

Graphics memory (VRAM) usage

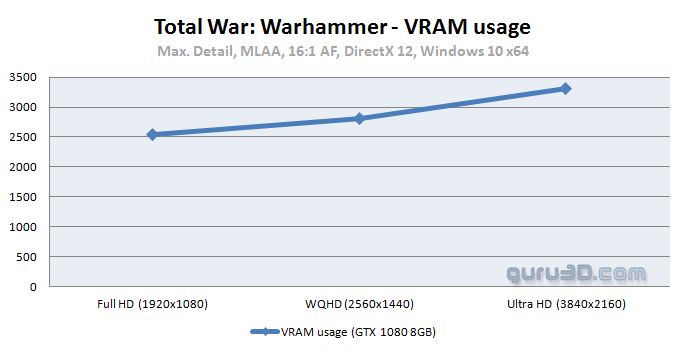

How much graphics memory does the game utilize versus your monitor resolution with different graphics cards and respective VRAM sizes ? Well, lets have a look at the chart below. The listed MBs used in the above chart is the maximum measured utilized graphics memory during the test run. During game-play the game is constantly swapping and loading stuff. As such in the most scenarios you'll notice your VRAM is filled, then memory gets emptied chunk by chunk bit and then filled again. As such the VRAM numbers we monitored continuously jump up and down.

What I can conclude is that the title is reasonably graphics memory hungry when you use the best image quality settings. Really for Ultra quality up-to 1920x1080 ~3GB is actually minimal, 4GB cards seems sufficient in any condition and that amount of VRAM is getting the norm these days. At Ultra quality settings vs. 2560x1440 HD a 4GB card is a requirement if you want to game at the best image quality settings. If you are lacking graphics memory and feel that in your perf, simply flick down in AA (if you have them enabled) and watch your graphics card memory free itself.

Processor scaling analysis

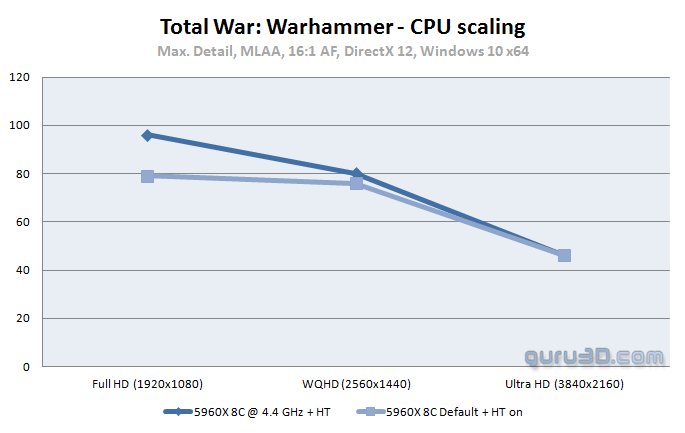

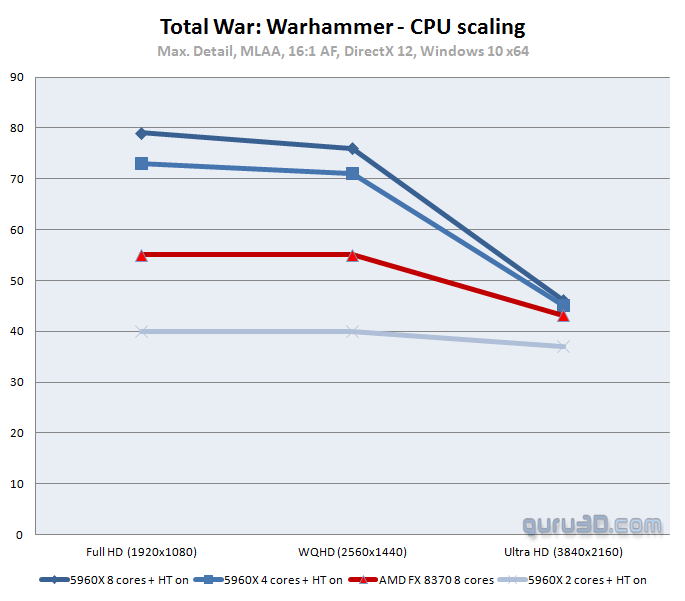

CPU frequency vs performance - In this segment we're going to look at several scenarios with a processor involved as primary focus. And yeah, Warhammer much to my surprize is INCREDIBLY CPU dependant on core clock frequency, but more importantly .. the number of CPU cores. It is totally not what you expect with DX12 title, as a lesser processor should benefit from all the DX12 goodness. But follow me as the results here are pretty darn shocking. So in the first chart above we have our default test system. A Core i7 5960X processor which has 8 CPU cores and is threaded (HT) to 16 threads. Frequency scaling, our setup always runs overclocked at 4.4 GHz on all eight cores, that's the upper dark blue line. Also the configuration we test all graphics cards at.

However prior to testing all graphics cards I recorded a video on a Core i7 4970 (4.4 GHz on all four cores) with a GeForce GTX 970 (the video shown two pages ago). I was rather surprised to see the difference in performance inbetween the 4 and 8 cores system, hence I got curious as the Core i7 4790K system simply resulted into noticeably slower framerates.

First off, above the game in Full HD, WQHD and Ultra HD (X-axis) versus average framerate. As you can see the 4.4 GHz overclock makes a substantial difference in performance up-to 2560x1440, after that yes even the GTX 1080 gets GPU bound. A default 8-core clocked Core i7 5960X runs at a 3.0 Ghz base clock, however turbos to 3.5 GHz. This result by itself is (well I find to be) pretty staggering; as you do not see it a lot in games these days.

But then ..

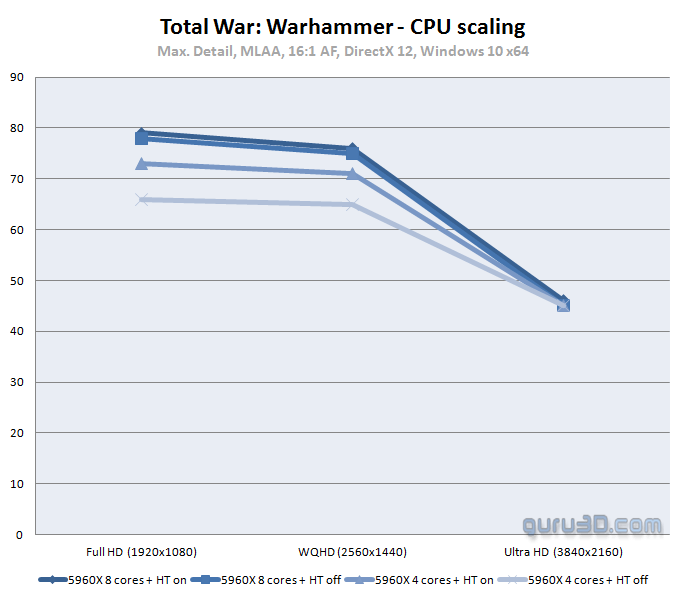

So the 5960X is a very flexible processor, we can enable and disable cores and hyper-threading. Now with a game anno 2016 you do not expect much of a difference in frame-rates in-between a hyper-threaded 8-core or 4-core system really. But that's exactly what I noticed when I was recording that video, the difference in-between 8 cores and a four core setup really substantial.

- So the upper two lines are the default clocked 5960X with 8-cores active, one HT on the other off.

- The lower two lines are the default clocked 5960X with 4-cores active, one HT on the other off.

On eight cores HT on/off doesn't make much of a difference. Moving to a similar clocked 4 core processor you'll notice that once we turn off HT, the performance drops. Also the difference inbetween 4 and 8 cores really is substantial.

Next ..

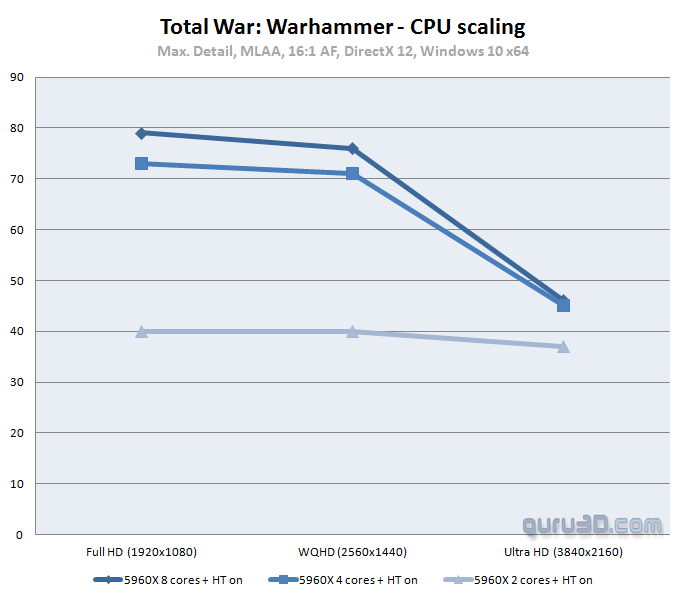

So I created one more scenario, let's visualize imagine that all processors in the world are hyper-threaded. Again, it is the same processor with the same default frequency, yet now we scale from 8 to 4 towards 2 active CPU cores. Amazing isn't it ? This game really requires four cores and it's a notch better when they are hyper-threaded. Obviously the game has massive amounts of objects in the scenes, hence a lot of processing power is needed. But this really where DX12 should help out ?

Hows AMD holding up?

So with that in mind I was also curious, would a 1000 USD 8-core Intel Core i7 5960X much like in Warhammer be slaughtering an AMD FX 8370 worth less then 200 bucks with its 8 cores. Ehm no above the results, the red line is an AMD FX 8370. Remember if a game is CPU intensive and both frequency and cores count matter, then a CPU that per core is half as fast compared to Intel isn't going to cut it. But value for money wise that story would be completely different. BTW in the above chart the 5960X is clocked default at 3.0/3.5 GHz with different activated core variations and the AMD FX 8370 on its default 4.0/4.3 GHz.

Concluding

Warhammer is a title you need to like, turn-based strategy is certainly not my thing, hence thanks to Creative Assembly and Sega for building a proper benchmark. But so many people like this genre and yeah the graphics are looking pretty okay. This is an AMD title and the benchmark does enforce mandatory MLAA as MSAA is mysteriously missing. And yes, MLAA is better suited (well optimized) for AMD cards. So from our point of view there is a somewhat unfair performance benefit for AMD, it's the reality anno 2016 I'm afraid. Regardless, any decent graphics card will have no problem running the game, and the once that cannot run DX12, well .. there's also DX11 mode of course. We obtained a special DirectX 12 Benchmark build for Total War: WARHAMMER. The game is expected to support DirectX 12 upon a patch released, scheduled for June.

That's it for this update you guys. I'll might revisit Crossfire / SLI soon and add the numbers as well.

- Hilbert

- Sign up to receive a notice when we publish a new article

- Or go back to Guru3D's front page