FreeSync 2 - Local Dimming and HDR

FreeSync

Back in the year 2016 AMD had already announced HDR support for their graphics cards, what later transferred into FreeSync 2. Basically, the FreeSync and FreeSync 2 combined, evolving that tearing, stutter and lag-free gaming experience into HDR. So a FreeSync 2 certified monitor ticks these boxes, the two refers to an HDR ready screen. Unfortunately, it's a jungle out there in monitor land, even a 300 nits peak HDR brightness can be tagged with an HDR label these days. Always look for HDR10, 10bit or 8-bit+FRC support and at least 600 nits on the box. Vesa actually has a nice certification program for that, if you see a Vesa HDR 600 label on the box, you know it can handle a 600 nit peak brightness. The Samsung C32HG70 offers all that, with 144 Hz being FreeSync compatible, the latest firmware update, however, made it a FreeSync 2 certified panel. The Freesync 2 is certified from firmware 1013, the 27-inch version from firmware 1012.

|

||

| FreeSync Mode | Interface | Refresh Range |

| Standard Mode | DisplayPort | 120 - 144Hz |

| HDMI | 80 - 100Hz | |

| Ultimate Mode | DisplayPort | 72 48 - 144Hz |

| HDMI | 50 - 100Hz | |

As you can see in the above table, FreeSync is supported over both the DisplayPort and both HDMI interfaces, however, both with different frequency range values. FreeSync will need to be enabled via the OSD menu. Interestingly enough, the FreeSync range of the screen varies depending on what FreeSync modus you choose in the OSD menu, there are two available: 'Standard' and 'Ultimate'. The Ultimate mode for DisplayPort gives you a wider range in which it can operate and, as such, it is the recommended option to use if you are going to use FreeSync. There is also a Standard mode, this one is more limited in its dynamic range. It's recommended that if, for whatever reason, you have any issues with flicker or any other oddities in the Ultimate mode, that you revert to the more limited Standard mode. In Ultimate mode on DP that gives you a range of 72 to 144Hz. Outside of these ranges and thus below 72 Hz, FreeSync doesn't work, however, AMD LFC (Low Framerate Compensation) is an intermediate solution for the lower framerates. We'll discuss LFC in the last chapter on this page though.

Update:

There have been a number of firmware updates for the Samsung monitors. Please make sure you upgrade the monitor firmware to 1019.1 for the 27" model and 1016.2 for the 32" model. With these updates we got most game titles working properly in HDR10. Also, the new Freesync range is 48-144Hz as of the latest update in DP Ultimate mode (!)

Multi-Zone Local Dimming and HDR

Over the years we have seen many LCD technologies in relation to backlighting. Traditional lighting methods simply are not sufficient anymore when using HDR as the peak brightness (a bright LED) can and will cause side effects. If you just have a backlit LCD screen or edge LED lighting then the tremendous amount of brightness for HDR will create a lot of blooming. This is also referred to as 'inhomogeneous backlighting', it is one of the LCD technology's fundamental problems. Please have a look at the example photo below:

Above, you can see that blooming effect, you may as well call it a halo. With an edge-lit screen the LEDs light up portions of the screen they shouldn't and, as such, light 'bleeds' out a bit. OLED solved that as each pixel is controlled and lit by itself, it does not use any form of LED (back/edge) lighting. That's also why black is black on OLED, pure black simply means turning the pixel off, creating excellent deep contrast levels. However, OLED as a technology is never going to make it into gaming monitors, even the best Ultra HD TVs now are starting to suffer from screen burning, more and more reports are surfacing on the web. With a lot of static imagery on a console or PC, OLED simply is not an option. While still not as optimal as OLED, the industry now is and has been adapting a new technology called 'Full-Array Local Dimming'. A methodology that is very similar to the way LCD TVs were configured back in the days, but instead of using the CCFL backlight, now it uses LED backlights instead. The term‚ 'full-array' means that there are LED zones spread out over the entire backside of the LCD panel. And here technology is advancing, in combination with Quantum Dot LCD screens and lots of arrays of backside LEDs. The big advantage is that the panel can light up just that portion of the screen that needs to be lit, keeping other places in the scene on screen as off, and thus totally dark. That creates deep contrast levels and peak brightness where needed. This technology is advancing fast and rapidly to UHD/HDR televisions, the new Samsung Q9FN (2018 model) Ultra HD TV, for example, uses somewhere between 400 to 500 local dimming zones. I just tested such a telly, and yes... black is black with intensely little blooming. A TV series that is very close to OLED. Full Array Local Dimming now has made its way to gaming PC monitors, and quite frankly we feel it it is the future of HDR panels for gaming monitors. The Samsung C32HG70 has Full Array Local Dimming, however with just 8 zones. Which is not a lot in contrast to that Q9FN I just mentioned.

HDR 10 Support for gaming

While it has nothing to do with FreeSync revision 1, it is now housed under the revision 2 tag. Better pixels, a wider color space, more contrast will result in more interesting content on that screen of yours. FreeSync 2 has HDR support built in, it is a requirement for any display panel with the label to offer full 10bpc support. High-dynamic-range will reproduce a greater dynamic range of luminosity than is possible with digital imaging. We measure this in nits, and the number of nits for UHD screens and monitors is going up.

What's a nit?

Candle brightness measured over one meter is 1 nit, also referred to as Candela; the sun is 1.6000.000.000 nits, typical objects have 1~250 nits, current pc displays have 1 to 250 nits, and excellent non-HDR HDTVs offer 350 to 400 nits. An HDR OLED screen is capable of 500 to maybe 700 nits for the best models and here it’ll get more important, HDR enabled screens will go towards 1000 nits with the latest LCD technologies. HDR allows high nit values to be used. HDR had started to be implemented back in 2016 for PC gaming, Hollywood has already got end-to-end access content ready of course. As consumers start to demand higher-quality monitors, HDR technology is emerging to set an excitingly high bar for overall display quality.

Good HDR capable panels are characterized by:

- Brightness between 600-1200 cd/m2 of luminance, industry goal is to reach 1000 to 2000

- Contrast ratios that closely mirror human visual sensitivity to contrast (SMPTE 2084)

- And the DCI-P3 and/or Rec.2020 color gamut can produce over 1 billion colors at 10 bits per color

HDR Tone Mapping at GPU level

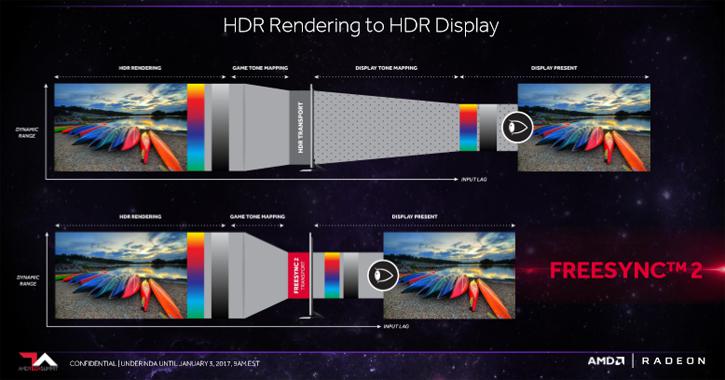

We're not 100% sure what the status of this is with the current build of Windows 10, however, Freesync 2 holds a few tricks up its sleeve in relation to HDR and reducing input lag by HDR transport. What this embedded technology does is tone mapping on the graphics card side, something the display normally would do by itself on HDR content. This is now done at driver level housed on the FreeSync 2 protocol. Since that happens before the image is sent to the monitor it will reduce tone mapping processing time and thus input lag. So in short, proper HDR10 support with that massive color space and low latency gaming is what this bit is about. Especially with HDR gaming in mind, this is a rather nice feature. FreeSync 2 this way offers over 2x perceivable brightness and color volume over sRGB. This, however, is only attainable when using a FreeSync 2 API enabled game or video player and content that uses at least 2x the perceivable brightness and color range of sRGB, and using a FreeSync 2 qualified monitor.

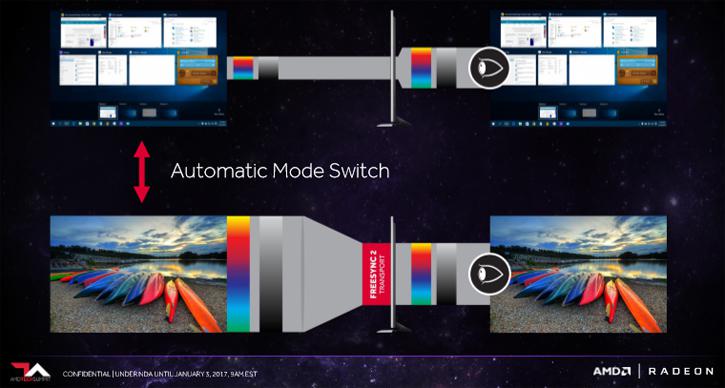

Switching seamlessly - thanks to an automatic mode switch, your adapter and monitor can switch in-between SDR and HDR as well. So, when watching an HDR movie has ended or you are done playing an HDR game, you will be reverted to SDR mode automatically. Games are slowly starting to support 10-bit HDR gaming, also referred to as HDR10. With the latest drivers, AMD supports HDR gaming with Dolby Vision and HDR10 support. This is, however, on select GPUs, basically anything Radeon Rx 4xx and newer as well as the latest Ryzen based APUs.

HDR video and gaming increase vibrancy in colors, details with contrast and luminosity ranges with brightness. You will obviously need a monitor that supports it as well as a game title that supports it. HDR10 is an open standard supported by a wide variety of companies, which includes TV manufacturers such as LG, Samsung, Sharp, Sony, and Vizio, as well as Microsoft and Sony Interactive Entertainment, which support HDR10 on their PlayStation 4 and Xbox One video game console platforms (the latter exclusive to the Xbox One S and X). Dolby Vision is a competing HDR format that can be optionally supported on Ultra HD Blu-ray discs and from Streaming services. Dolby Vision, as a technology, allows for a color depth of up to 12-bits, up to 10,000-nit brightness, and can reproduce color spaces up to the ITU-R Rec. 2020 and SMPTE ST-2084. Ultra HD (UHD) TVs that support Dolby Vision include LG, TCL, and Vizio, although their displays are only capable of 10-bit color and 800 to 1000 nits luminance. Think big and a lot of bandwidth. Monitor resolutions are expanding. A problem with that is that the first 8K monitors needed multiple HDMI and or DisplayPort connectors to be able to get a functional display. With DisplayPort HBR3, a thing or two has changed, as with the new feature-level AMD can drive 4K 120 HZ, 5K 60 HZ and 8K 30 Hz from a single DisplayPort cable. So with FreeSync 2 AMD added a new feature or two.

Low Framerate compensation (LFC)

LFC is not something new, however, what is new is that all FreeSync 2 compatible monitors must support this low framerate compensation modus. Otherwise, they do not receive the FreeSync 2 badge label slash certification. LFC is a technology that compensates the minimum refresh rate concerns of most AMD FreeSync ready monitors. So where the display isn't receiving frames faster than its minimum refresh rate with v-sync on, low framerate compensation (LFC) will prevent both tearing artifacts and motion judder. LFC pretty much always works if the lowest frequency range (72 Hz) fits a multiplier of two compared to the maximum refresh rate. For our Samsung panel in Ultimate FreeSync modus, 72 Hz x 2 =144. This screen supports 144 HZ, so that means LFC will work. Really, that's all the logic behind it as LFC doubles up on frames, so 71 Hz, or FPS if you will, gets 142 Hz, and that 142 Hz needs to fit into the highest possible frequency range, which is 144 Hz in this case.

Basically, once the monitor drops out of its FreeSync range (below 72 Hz) the software will run an adaptive algorithm on the GPU and is doing intelligent monitoring where extra frame syncs are inserted combined with where the GPU outputs a frame. This feature level support is now mandatory for FreeSync 2 certified monitors. Alright, we've got the basics covered, let's move onwards into the review.