Synchronizing Monitor & Graphics Card

NVIDIA G-Sync

Synchronizing the monitor refresh rate to your graphics card

NVIDIA recently announced G-Sync, a technology that will eliminate some pretty hardcore stuff, things we have taken for granted many years, screen-tearing and VSYNC stutters. For that you will need to dig a bit into your wallet though as you will need a compatible Geforce graphics card and a compatible monitor that has been equipped with an Nvidia G-Sync module. But once you have established that combination, you'll be nothing other then impressed -- that much we can guarantee! G-Sync, a technology that is named to be a game changer, yes G-Sync eliminates the problems that come with VSYNC (both on and off) versus what is displayed on your monitor. See, in recent years we all have been driven by the knowledge that on a 60 Hz monitor you preferably need 60 FPS rendered by your graphics card. This was for a very specific reason, you want the two as close as possible to each other as that offers you not only the best gaming experience, but also the best visual experience. Running 35 FPS on a 60 Hz screen with VSYNC on would be great, but you'd still see a hint of what I like to name "soft vsync stuttering".

Then the hardcore FPS gamer obviously wants extremely high FPS, and for these frag-masters the alternative is simply disabling VSYNC. However if you have that same 35 FPS framerate on 60 Hz, you'd see visible screen tearing. Heck, this is why framerate limiters are so popular as you try to sync each rendered frame in line with your monitor refresh rate. But yeah, these are the main reasons for screen anomalies, and ever since the start of the 3d graphics revolutions (all hail 3dfx), we simply got used to these sync stutters and/or screen tearing. To compensate we have been purchasing extremely fast dedicated graphics cards to be to be able to match that screen refresh rate as close as possible.

What Is Happening?

Okay you just read the introduction, a hint geeky, I agree. But in order to understand G-Sync as a technology I need to repeat and explain this at least two or three times. So let me try and explain once more what is happening. Very simply put, you need to remember this, games do not deliver a consistent frame rate. The graphics card is always firing of frames towards your monitor as fast as it can possibly do. As such the frames per second rendered are dynamic and can bounce from say 30 to 80 FPS in an matter of a split second depending on variables like 3D scene, polygon count, user interaction, effects shadeers kicking in, etc. On the eye side of things, you have this hardware which is the monitor, and it is a fixed device as it refreshes at, lets take, 60 Hz (the monitor refreshes and fires off 60 frames at you with each second that passes). Fixed and dynamic are two different things, they collide with each other. So on one end we have the graphics card rendering at a varying framerate while the monitor shows 60 images per second in a timed and sequential interval. That causes a problem as with a slower (or faster) FPS then 60, simply put you'll get multiple images displayed on the monitor with each refresh of the monitor. Graphics cards aren't that good at rendering at fixed speeds, so the industry tried to solve that with VSYNC on or alternatively off.

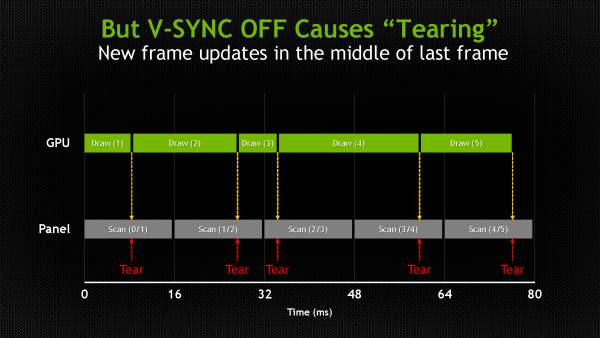

Up-to now we solved problems like Vsync stutter or Tearing basically in two ways. The first way is to simply ignore the refresh rate of the monitor altogether, and update the image being scanned to the display in mid cycle. This you guys all know and have learned as "VSync Off Mode" and is the default way most FPS gamers play. If you however freeze the display to one 1 Hz, this is what you will see, the epiphany of graphics rendering evil, screen-tearing.

.jpg)

Look at the upper and lower part, that is screen tearing

When a single refresh cycle (1/60th Hz on your monitor) shows 2 or more images, a very obvious tear line is visible at the break, yup, we all refer to this as screen tearing and you all have seen it many times. You can solve tearing though by turning VSYNC ON, but that does create a new problem.

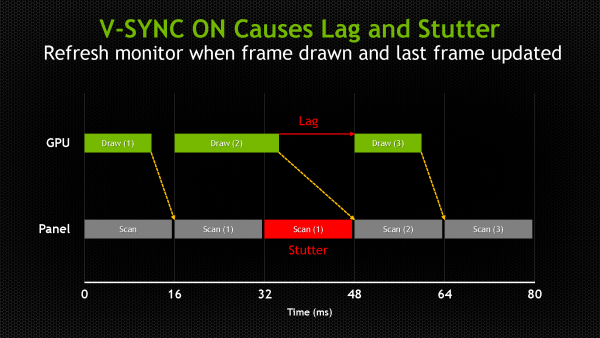

The solution to bypass tearing is to turn VSync on, here we basically force the GPU to delay screen updates until the monitor cycles to the start of a new refresh cycle. Delaying the graaphics card creates an effect as that delay causes stutter whenever the GPU frame rate is below the display refresh rate.

There's another nasty side effect. How many of you guys have been irritated by incorrect and laaggy mouse input during gaming? Yup, VSYNC delays the GPU frames and that creates the actual lag that you are experiencing. So it increases latency, which is the direct result for what you guys know and hate -- input lag, the visible delay between a button being pressed and the result occurring on-screen.

In the screenshot above we show how frames are delivered to the monitor with V-Sync enabled. As opposed to V-Sync Off, we can see that Frame Draw 1 (Frame 1) is rendered faster than 16ms, and instead of immediately displaying “Frame 2” causing a tear, V-Sync makes the GPU wait to send “Frame 2” until the vertical blanking period of the monitor occurs, and the prior frame is completely displayed.

So when “Frame 2” takes longer than 16ms to render, “Frame 1” is presented again (repeated) and “Frame 2” is not displayed until the next vertical blanking period. This long period of displaying the same frame twice causes a delay that is displayed as an animation stutter. This also produces input lag because any new input data from the gamer that was meant for the previous frame is now also delayed. The gamer continues to see the input data from “Frame 1,” and they won’t see the result of new input data until “Frame 3.”

- Enabling VSYNC helps a lot, but with the video card firing off all these images per refresh you can typically see some pulsing (I shouldn't wanna call it vsync stuttering but that would describe it better) when that framerate varies and you pan from left to right in your 3D scene. So that is not perfect at all, and then there's that dreaded input lag.

- Alternatively most people disable VSYNC - but here you will run into a problem as well, multiple images per refreshed Hz will result into the phenomenon that is screen tearing, which we all hate.

If you put 1 and 1 together, then it starts to make sense why we all want extremely fast graphics cards, as most of you guys want to enable VSYNC and have a graphics card that runs faster then 60 FPS. Over the years we have accepted these two (well three -- stutters, tearing and input lag) problems and now have taken them for granted.

So where does Nvidia G-Sync come in exactly? Well, let's head on over to the next page and we'll explain as this technology can eliminate it all, lag, VSYNC stuttering and screen-tearing. Sounds good, huh?